The McGill Intelligent Classroom

Gallery

Background

Information technology promised to empower us and simplify our lives. In reality, most of us can attest to the fact that the opposite is true. Modern presentation technology, for example, has made teaching in today’s classrooms increasingly complex and daunting. Electronic classrooms offer instructors a variety of multimedia presentation tools such as the VCR, document camera, and computer inputs, allowing for the display of video clips, transparencies, and computer generated simulations and animations. Unfortunately, even the most elegant user interfaces still frustrate many would-be users.

Whereas fifty years ago, the only concern a teacher had was running out of chalk, faculty now struggle to perform relatively simple tasks, such as connecting their computer to the projector, displaying a video tape, and even turning on the lights. As a result of the cognitive effort and time its use requires, the technology tends to be underutilized. Worse still, it often distracts the instructor from the primary pedagogical task. While technology’s capacity to improve the teaching and learning experience is evident to many, its potential has remained largely untapped.

A related concern is the effort required to exploit the technology for novel applications, for example, distance or on-line education. The desire to provide lecture content to students who are unable to attend the class in person, as well as to those who wish to review the material at a later time, has been a driving force behind the development of videoconferencing and web-based course delivery mechanisms. Although a number of universities now offer courses on-line, the cost involved in creating high-quality content is enormous. Both videoconferencing and simple videotaping of the lectures require the assistance of a camera operator, sound engineer, and editor. For asynchronous delivery, lecture material, including slides, video clips, and overheads, must be digitized, formatted and collated in the correct sequence before being transferred. Adding any material at a later date, for example, the results of follow-up discussion relating to the lecture, is equally complicated. The low-tech solution, which offers the lecture material by videotape alone, still involves considerable effort to produce and suffers further from a lack of modifiability, a single dimension of access (tape position), and a single camera angle. This prevents random accessibility (e.g. skip to the next slide) and view control (e.g. view the instructor and overhead transparency simultaneously at reasonable resolution), thus limiting the value to the students.

Evolution of Project Objectives

Our principle concern in this project was to overcome the complexity and effort associated with the use of electronic classroom technology and the costs of on-line lecture delivery. As such, the initial objectives of this project were to develop, in a classroom setting, computer-augmented presentation technology in order to:

- reduce the complexity of operating electronic classroom presentation technology such that instructors can make use of the LCD projector, VCR, and other devices, without having to worry about “which button to press”

- automatically generate a multimedia (web-based) version of the class, including transparencies, hand-written notes, and the audio-visual of the lecture itself, for later review by instructors and/or students

- provide the means for real-time audience response systems, of particular relevance for large classes, permitting instructors to assess, rapidly, the students’ understanding of a particular topic.

Through early meetings between the design team, undergraduate students, and pedagogical experts from McGill’s Centre for University Teaching and Learning, we soon realized that the third of these goals was heading in the direction of “technology overkill” rather than offering a substantive improvement to the educational experience, and hence, was struck.

The project was also motivated by a desire to deploy new technology to support effective teaching, and to help professors enhance their teaching with innovative and powerful tools and approaches. This was particularly relevant in the Engineering faculty, where survey results indicated relatively low student satisfaction with the quality of teaching (In-Touch, 1998). While technology itself certainly cannot make someone a better teacher, we thought that it could be used to offer the tools necessary for improved self-evaluation and for ongoing, qualitative, student feedback regarding both the good and bad points of one’s teaching style. The role of situated feedback was seen as offering the potential for sustained faculty improvement.

Initially, we considered the possibility of instructors reviewing their web-based lectures after class, or asking students to provide on-line critique of the instructors’ teaching style, but these approaches seemed of questionable benefit. Furthermore, combining the functionality of viewing a lecture on-line with instructor critique was considered problematic. However, the discussion led to a new proposal that offered similar functionality but a different purpose, namely to permit students to ask questions on-line during the viewing of a recorded lecture.

The motivation for this proposal came from our observation that electronic lectures tend to be closed systems. Not only is it difficult for instructors to add to or modify existing content, but there is no mechanism for students to offer feedback concerning the lecture or ask questions relating to the material, apart from email or course newsgroups. While such tools provide communication between students and instructor, they lack both permanence and visibility. Questions posed in class become part of the entire class’s experience, whereas, if asked and answered electronically, the exchange is only available to a few participants (email) or to those students who are actively following the discussion thread (newsgroup). The thread eventually disappears or is buried by ensuing discussion on unrelated topics. A further limitation is the lack of context, i.e. exactly what was the student viewing before asking the question? This led to the formulation of a new project objective, namely:

- Previously Asked Questions (PAQ): Students’ questions could be time-stamped based on the position in the lecture where they were asked, and relayed to the instructor and/or TAs for their reply. With the addition of post-processing software, the instructor could be provided with some useful statistics (e.g., 10 students had questions about slide #17). These questions could either be answered electronically or discussed in a subsequent lecture. The software might augment or replace a newsgroup discussion, or the professor could lead off a class by summarizing the feedback and addressing questions raised. This would have the additional impact of demonstrating the instructor’s willingness to listen to the class. Furthermore, these same tools could be used by professors to manage the increased interaction with students created by asynchronous questioning and enable alternative means of student evaluation.

Project Progress

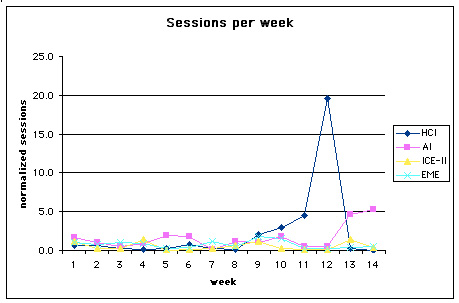

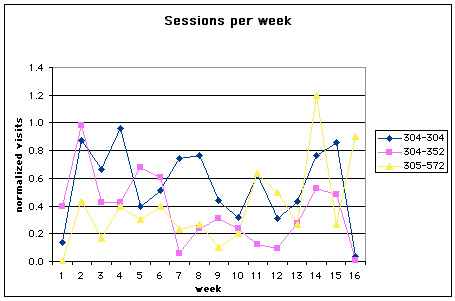

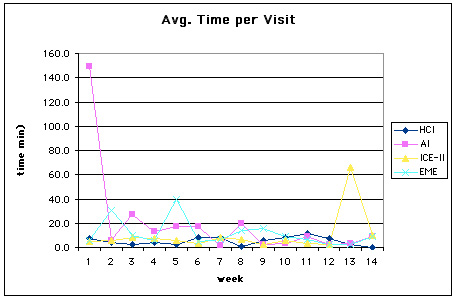

Web Log Analysis

Usage over time

Summary of Student Questionnaire Data

Includes full questionnaire tables and commentary:

- Beginning of term questionnaire results (Table 2)

- Midterm questionnaire results (Table 3)

- End of term questionnaire results (Table 4)

References

[Abowd et al, 1998] Abowd, G., Atkeson, C., Brotherton, J., Enqvist, T., Gulley, P., and Lemon, J., Investigating the capture, integration and access problem of ubiquitous computing in an educational setting. In Proceedings of Human Factors in Computing Systems CHI ‘98. ACM Press, New York, pp. 440-447.

[Cooperstock et al, 1997] Cooperstock, J.R., Fels, S.S., Buxton, W. and Smith, K.C. (1997), Reactive Environments: Throwing Away Your Keyboard and Mouse. Communications of the ACM, 40(9), 65-73.

[In-Touch, 1998] Survey of Graduate and Undergraduate Students. In-Touch Survey Systems for the Recruitment and Liaison Office, McGill University, 1997-1998.

[Winer, 2000] Winer, L. Student use of and reaction to MC13 (The “Intelligent Classroom”): January — March 2000, CUTL Internal Report, McGill University, 2000.

[Winer, 2001] Winer, L.R. and Cooperstock, J.R. The “Intelligent Classroom”: Changing teaching and learning with an evolving technological environment. International Conference on Computers and Learning, Coventry, UK, April 2001.

Relevant links

- Instructions for the McGill Intelligent Classroom facilities (now obsolete)

- Instructions for the Mini-Presentation System (now obsolete)

- The future of the electronic classroom

- Cooperstock, J.R. (2001) Classroom of the Future: Enhancing Education through Augmented Reality. HCI International, Conference on Human-Computer Interaction, New Orleans, pp.688-692.

- Winer, L.R. and Cooperstock, J.R. (2002) The “Intelligent Classroom”: Changing Teaching and learning with an evolving technological environment. Journal of Computers and Education, 38, 253-266.

- Jie, Y. and Cooperstock, J.R. (2002) Arm Gesture Detection in a Classroom Environment. IEEE Workshop on Applications of Computer Vision, Orlando, pp. 153-157.

- Cooperstock, J.R. (2003) Intelligent Classrooms need Intelligent Interfaces: How to Build a High-Tech Teaching Environment that Teachers can use?. American Society for Engineering Education, Nashville, June.