This project is funded by the new Networks of Centres of Excellence on Graphics, Animation, and New Media (GRAND). Participants include Jeremy Cooperstock, Stephen Brooks (Dalhousie), Ravin Balakrishnan (Toronto), Sidney Fels (UBC), Abby Goodrum (Ryerson), Carl Gutwin (Saskatoon), Paul Kry (McGill), Gerd Hauck (Waterloo), Sam Fisher (NSCAD), Robert Gardiner (UBC), and Xin Wei Sha (Concordia).

|

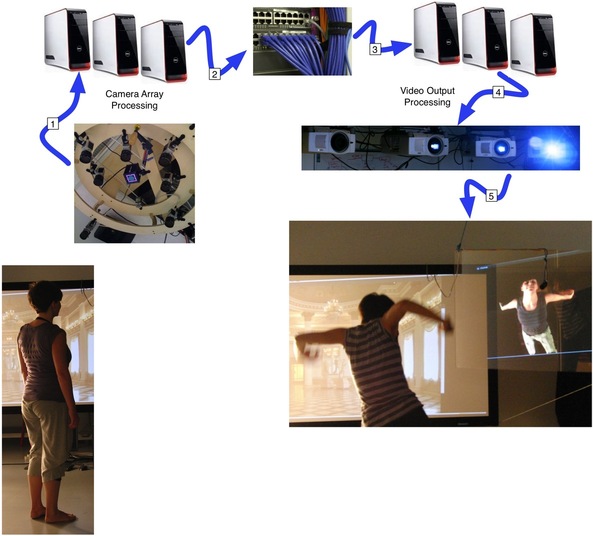

High-level overview of the Virtual Presence pipeline. (1) Video input of the performer is acquired by a steerable camera array, which selects one of the cameras or interpolates between multiple cameras to provide a desired view perspective, segmented from the background. This is then (2) transported over the network with low-latency protocols, to (3) a second set of computers responsible for rendering the scene, possibly combined with background graphics and video from other sites, (4) assigning the resulting graphics content to multiple display devices, as appropriate, and these (5) output the rendered content through suitable display hardware such as the transparent HoloView screen shown here. |

Videoconferencing suffers from limitations of fidelity and delay and often proves inadequate for supporting group discussion or highly collaborative activity, especially between more than two sites. Moreover, existing technologies tend to prohibit or constrain mobility of participants. Similarly, the integration of remote avatars or virtual actors into shared spaces often imposes constraints on the realism, believability and artistic control of rendered content, while real-time data acquired during interaction and performance is not fully exploited in current post-production systems.

This project will enhance the next generation of virtual presence and live performance technologies in a manner that supports the task-specific demands of communication, interaction, and production. The goals are to: improve the functionality, usability, and richness of the experience; support use by multiple people, possibly at multiple locations, engaged in work, artistic performance, or social activities; and avoid inducing greater fatigue than the alternative (non-mediated experience).

Our approach to realizing these objectives entails further development and integration of several enabling technologies, including video acquisition and display architectures, spatially reactive yet controllable lights and cameras, tetherless tracking, video segmentation, multimodal synthesis, latency-reduction techniques, and novel GIS-like production interfaces. Numerous challenges must be overcome, including seamless integration of video display and presentation of 3D content, visible from multiple angles. Spatially appropriate audio is equally important, especially in the context of human-human communication and the haptic modality (sense of touch) should also be supported. Participants must be allowed to move freely, while continuing to experience the relevant sights, sounds, and ground texture, as they would in a "real", non-mediated environment. Finally, recognizing the importance of delay minimization in distributed complex group activity, the project will investigate several mechanisms to reduce the impact of network latency.

Our proposed methdology for tackling these objectives consists of an interative design-implementation-evaluate cycle, in which prototype tools will be integrated in applications and deployed in real-world performance and collaborative design activities at an early stage, allowing for meaningful evaluation outside of an artificial laboratory environment. From this ongoing test feedback, the tools will be refined, with subsequent validation conducted on each iteration. This implies that efforts toward the project milestones, listed below, will proceed largely in parallel, with evaluation conducted initially on existing tools developed outside of our project, as a basis for comparison with our ensuing developments.

Last update: 24 May 2010