Project team: J. Cooperstock (McGill ECE), T. Arbel (McGill ECE), L. Collins (McGill MNI), R. Balakrishnan (Toronto CS), R. Del Maestro (McGill Neurology and Neurosurgery), B. Goulet (McGill MUHC) ; funded as an NSERC Strategic Project

[overview] [project plan] [virtual biopsy] [volume carving] [video clip]

This project deals with the challenges of medical image visualization, in particular within the domain of neurosurgery. We wish to provide an effective means of visualizing and interacting with data of the patient's brain, in a manner that is natural to surgeons, for training, planning, and surgical tasks. This entails three fundamental objectives: advanced scientific visualization, robust recognition of an easily learned and usable set of input gestures for navigation and control, and real-time communication of the data between multiple participants to permit effective understanding and interpretation of the contents. The required expertise to accomplish these tasks spans the areas of neurosurgery, human-computer interaction, image processing, visualization, network communications.

Technologies that support manipulation in an efficient contact-free manner, coupled with visualization approaches that allow combination of multiple data sources, would significantly enhance the quality of information available during surgery. Moreover, the ability to share such visualizations at a distance while discussing them would offer tremendous opportunities for medical education in addition to expert training or peer coaching opportunities for complex procedures.

Existing visualization tools for neurosurgery typically display 2D slices of data, along with 3D renderings that provide some idea of positional relationships. However detailed in resolution, the 2D views offer poor spatial context and impose a significant burden on the surgeon's cognitive resources to process and interpret the displayed information. The 3D visualizations are limited to surface renderings of structures of interest. Although parameter manipulation is supported in a pseudo-interactive manner, it makes an incredible difference to the user when lighting, specularity, opacity, and viewpoint can be changed rapidly and the results seen in near-real-time. The improvement in experience is analogous to the difference between viewing a static frame from an ultrasound versus live ultrasound video.

State of the art systems integrate additional data sources, for example, intra-operative ultrasound or stereo-microscopy with the magnetic resonance imaging (MRI) model. However, these remain restricted to simple 3D overlays with little or no computational intelligence devoted to helping the surgeons make sense of the data in real-time. Furthermore, navigation through what is inherently a three-dimensional volume is performed inefficiently by a series of 2D gestures, usually with a computer mouse. Geographically distributed interaction with the data, in collaboration with other medical professionals, is currently impractical given the available tools.

These observations motivate the development of new techniques and tools for visualization and navigation of neurological data. Recognizing the time constraints on expert surgeons that precludes their travel to assist colleagues or train students on unfamiliar operations, it is equally imperative to support effective communication between geographically distributed users simultaneous to their interaction with the data.

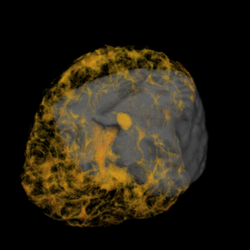

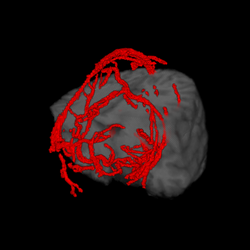

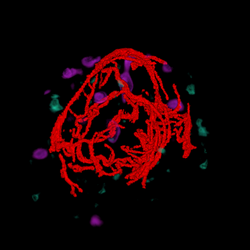

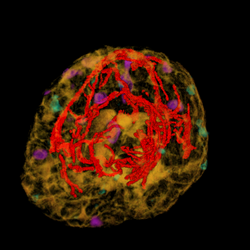

A major task is to implement the abstraction layer for rendering synthetic data, independent of display technology. This may involve use of the MNI's VTk-based IGNS system (IBIS) or build on our own rendering architecture, with low-level rendering engines for each display type, and input taken from existing neuro-visualization tools. In either case, the goal is to integrate the 3D rendering of MRI, fMRI, PET, DTI, and intra-operative ultrasound data, possibly in conjunction with the tracked position of surgical tools, and add modules for additional processing (e.g., feature highlighting, density control, and interactive manipulation/deformation). Rendering strategies must take into account the importance of merging volumetric and surface rendering techniques when dealing with the multiple data sets available.

|

|

|

|

| cutting plane + MRI | cutting plane + blood vessels | blood vessels + fMRI + PET | blood vessels + fMRI + PET + MRI |

Several studies will be conducted to compare the effectiveness of prototype candidates (consisting of display technologies, visualization tools, and interaction paradigms) each in a training scenario involving medical students and an instructor. Evaluation is intended to involve analysis of improvements (in either positional accuracy, performance speed, or other relevant metrics) for surgical tasks such as:

Additional technologies will need to be integrated as the project advances, in particular, free-handed tracking (possibly wearing a surgical glove). Other possibilities being considered include projected overlay of data onto the patient's head in an augmented reality manner and combining "peel-away" visualization tools to allow the simulation of cutting away a layer of tissue in order to investigate what lies immediately below.

Another area of experimentation involves different interface techniques and feedback modalities related to acquisition of control points and manipulation of a 3D model. These studies will include basic tasks of object or target selection, investigating the comparative performance benefits of different rendering technologies and modes of feedback. We will compare the capabilities of different display technologies and the changing requirements as one moves between 2D (conventional screen), and immersive (multi-screen), stereoscopic (3D), autostereoscopic, and volumetric displays. Related studies will address the effectiveness of display systems for multiple concurrent users.

The project will also integrate a notion of registration accuracy or uncertainty into the visualization, so that the surgeon can know where to trust the image, and where additional data is required. In addition, the tools will provide the surgeon with a means of translating and rotating the ultrasound data to better align it within the MRI volume using simple gestures. This form of interaction would be much preferred as an assistive tool for generating such information displays. As the automated tools evolve in capability, this same process could be used as an additional initialization or correction step. These techniques would permit more accurate surgical procedures, hopefully with the result of improving surgical outcomes, and patient care.

While improved display of volumetric brain data is likely to aid in understanding of the 3D content, little investigation appears to have been made into the question of how different display technologies and interaction paradigms affect perception and understanding of such data. To address this need, we are performing comparative studies of different display technologies (conventional 2D screen, head-mounted display, stereoscopic projection with polarizing filters, and autostereoscopic display) intended to evaluate their efficacy in communicating three-dimensional content, specifically, in the context of a neurosurgical planning task. While we hypothesize that stereographic display offers benefits over a monoscopic equivalent for understanding of volumetric content and their spatial relationships, our study is intended to quantify the differences in resulting task performance between these and against traditional monoscopic display. We conducted two experiments: virtual biopsy and volume carving.

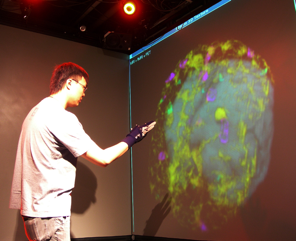

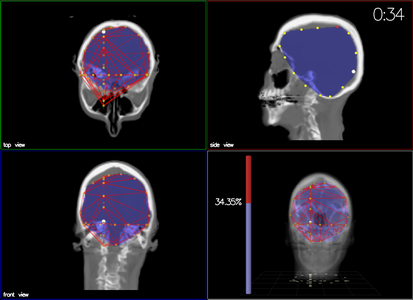

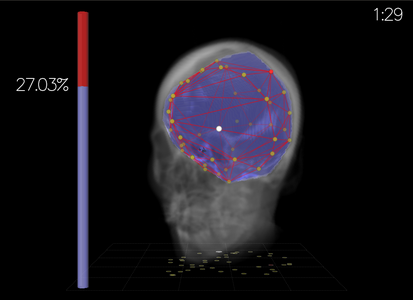

a) Sample view of the experiment

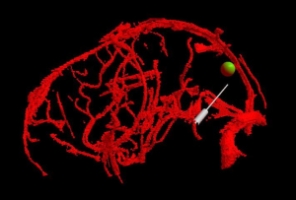

a) Sample view of the experiment |

b) Experimental setup

b) Experimental setup

|

The experimental task was to define a straight vessel-free path from the cortical surface to the targeted tumor using (a pen-like) probe. Because of the complex anatomical structure of the human brain and the topology of key blood vessels, defining such a path for insertion of probes and tools can be a challenging task in practice. For this task, we visualized the segmented brain vasculature with a simulated tumor at positions of varying difficulty of reaching, as shown in Figure a. Subjects were provided with hand-coupled motion cues as they manipulated the volume and probe (Figure b)). Two tangible objects were used as input devices: a small plastic skull to manipulate the orientation and proximity (zoom) of the vasculature, and a replica of a biopsy probe used to locate the tumor. Once the subject was satisfied with the probe position, a foot step, sensed by an electronic game controller dance pad, is used to end the trial. This avoided the risk of introducing jitter to the probe position, which may have resulted otherwise had a manual button press been required.

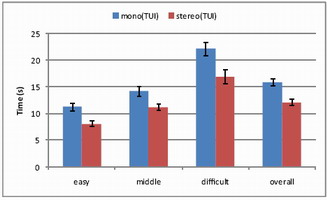

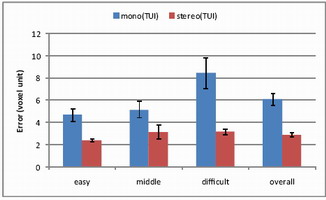

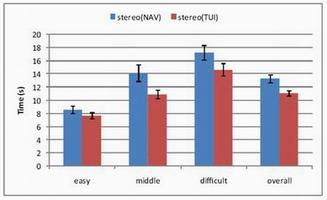

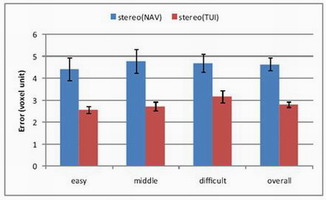

This experiment was conducted with 12 participants (8 male and 4 female, ranging in age between 22 and 37). As expected, performance improved in the stereo display condition. Completion time was reduced (p<0.0001) and errors were decreased (p<0.0001).

a) Completion time

a) Completion time |

b) Error

b) Error

|

In this experiment, we compared the tangible user interface (TUI) and a typical 3D mouse (3Dconnexion SpaceNavigator). The experiment was conducted with 12 participants (6 male and 6 female, ranging in age between 22 and 57).

a) Completion time

a) Completion time |

b) Error

b) Error

|

a) Head-mounted display

a) Head-mounted display |

b) Polarized projection

b) Polarized projection

|

c) Multiview lenticular display

c) Multiview lenticular display |

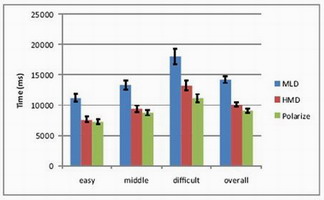

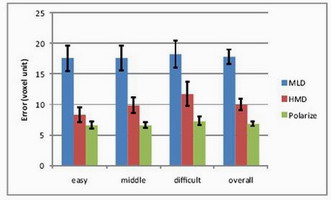

We conducted a study of different stereoscopic display modalities: head-mounted display (HMD), polarized projection, and multiview lenticular display (MLD). Twelve participants took part in the experiment, 6 male and 6 female, ranging in age between 23 and 36. The results indicate that overall, polarized projection outperformed the other two display modalities, exhibiting both fastest completion time (p<0.05) and the smallest error (p<0.01).

a) Completion time

a) Completion time |

b) Error

b) Error

|

a) Traditional orthographic views |

b) Perspective view b) Perspective view |

The task required participants to generate a convex hull encompassing the sub-volume target, indicated in blue, by placing a set of 3D points that defined the mesh, as illustrated in above figure. Participants were given five minutes to minimize the error, calculated dynamically as the sum of brain volume incorrectly excluded from the mesh and the sum of non-brain volume incorrectly included in the mesh.

For output, two obvious candidates are a 3D perspective view, or three orthographic views. The 3D perspective view is displayed in stereopsis by a polarized projection system.

a) Mouse |

b) Space Navigator |

c) Rockin' Mouse |

d) Depth slider |

e) Free space (Wii remote) |

f) Phantom |

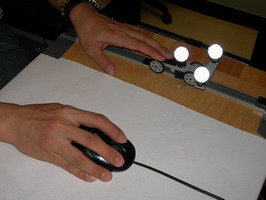

For the volume carving task, we compare the regular mouse with a set of 3D input devices. Our selection of 3D devices was driven by the results of our previous 3D placement experiment. The set includes a Depth Slider, a Rockin’ Mouse, a freespace device in either absolute of relative mode, and a Phantom®. We considered the inclusion of isotonic or elastic devices.

Depth Slider is a physical

slider manipulated by the non dominant hand and used

in cooperation with a regular mouse in the dominant hand.

The slider adds the control of depth to the mouse.We re-implemented the original Rockin’ Mouse design of Balakrishnan

et al., modifying a regular mouse by adding a

curved foam base with a flat bottom for tilting, and attached

a number of retro-reflective markers for tracking the position

and tilt by a Vicon™motion capture system. The free-space device, consisting of a Nintendo Wii Remote™,

equipped with infrared reflectors tracked by a Vicon™ motion

capture system. The Phantom® consists of a stylus attached to an articulated

arm. The 3D cursor is placed on the tip of the stylus.

The experiment was a balanced within-subjects repeated measures design, in which the order of device presentation to each subject was balanced as much as possible. Six subjects (two female, four male), between the ages of 23 and 38, all right-handed, took part in the study.

The volume carving task strongly motivates accuracy and permits continual refinement through the addition of strategically placed mesh points around the target volume. Here, speed itself is not the objective, but rather, minimization of error within the allotted five minutes. As can be seen in the above figure, there is a competition between the mouse in perspective view and the Depth Slider for most of the five-minute duration of the trial. Ultimately, the mouse in orthographic views achieves a slightly lower error, but its path to that value is slower, because the participants have limited awareness of the gaps in the mesh under that viewing condition. As we expected, the Depth Slider proves to be a useful mechanism for this particular task. This confirms the suitability of the mouse as an accurate positioning device and demonstrates the advantages of bimanual manipulation, where the nondominant hand can be used effectively to select depth.

Last update: 23 September 2009