Recording Studio that Spans a Continent

Recording engineers in Los Angeles mixed 12 high quality audio channels streamed live over the Internet from Montreal.

This project forms a component of the McGill Advanced Learnware Network project, funded by Canarie Inc. and Cisco Systems. The demonstration was prepared by the Audio Engineering Society’s Technical Committee on Network Audio Systems.

On Saturday September 23, 2000, a jazz group performed in a concert hall at McGill University in Montreal and the recording engineers mixing the 12 channels of audio during the performance were not in a booth at the back of the hall, but rather in a theatre at the University of Southern California in Los Angeles.

The demonstration was part of the 109th Audio Engineering Society Convention in Los Angeles. The McGill University research project that is developing techniques to stream high quality multichannel audio over the internet is funded primarily by Canarie Inc. and Cisco Systems. Hardware was provided by dCS Ltd., Mytek Digital, and RME and software by the Fraunhofer IIS-A and the Advanced Linux Sound Architecture project. The assistance of these companies is gratefuly acknowledged. Special thanks are also due to Paul Barton-Davis of ALSA for his incredible efforts rewriting pieces of the sound driver at the last minute. This demonstration would not have taken place if not for his help.

World renowned recording engineers Brant Biles and Bob Margoulef mixed the 12 audio channels for an audience in the Norris Theatre at the USC School of Cinema and Television. The audience saw the McGill Jazz Orchestra, Gordon Foote conducting, projected on a large screen in MPEG-2 video. The high quality video was transmitted over the same Internet link as the audio. The demo used the new high speed internet networks CA*net3 in Canada and Internet2 in the U.S.

Participants

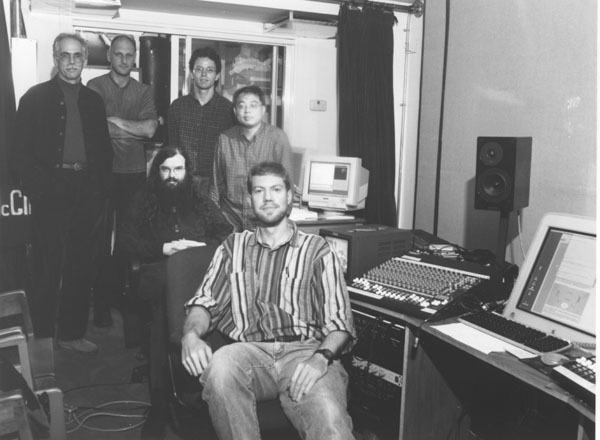

(right) In Redpath Hall at McGill, (back row from left) John Roston, Andrew Brouse, Jason Corey, Quan Nguyen, (center) Stephen Spackman, (front) Michael Fleming.

McGill Centre for Intelligent Machines Stephen Spackman and Jeremy Cooperstock

McGill Multichannel Audio Research Laboratory Jason Corey, Michael Fleming and Andrew Brouse, and Wieslaw Woszczyk

McGill Computing Centre Quan Nguyen

McGill Instructional Communications Centre John Roston, Daniel Schwob and Stewart McCombie

University of Southern California School of Cinema Television Chris Cain and Kevin Perry

University of Southern California IMSC Immersive Audio Laboratory Chris Kyriakakis

University of Southern California Networking Systems Christos Papadopoulos

Background

Real-time transmission of audio data over the internet has become relatively commonplace, but the quality and number of channels has so far been limited due to bandwidth constraints. With the ongoing growth of new, high speed networks, comes the potential of high-fidelity, multi-channel audio distribution. In cooperation with the Audio Engineering Society’s Technical Committee for Networked Audio Systems (TCNAS) and Dolby Laboraties, we previously developed protocols for reliable real-time streaming of AC-3 (encoded Dolby 5.1) audio stream at 448 kbps and provided the world’s first-ever demonstration of this technology during the AES 107th Convention in New York (1999), followed by two later demonstrations at the Canarie 5th Advanced Networks Workshop in Toronto and INET 2000 in Yokohama, Japan.

This demonstration presents the Internet transmission of multichannel music in

high-resolution (production quality) 24bit/96kHz PCM

between Redpath Hall, McGill University in

Montreal, Canada and the University of Southern California,

Los Angeles. To our knowledge, this is the first time that live audio

of this quality has been streamed over the network.

Discussion will focus on technical issues, challenges of

latency, quality of service and a review of applications.

Last year, we provided the world’s first demonstration of reliable real-time streaming of AC-3 (encoded Dolby 5.1 at 448 kbps) audio over the Internet during the AES 107th Convention in New York.

Performance Details

The performance began at McGill’s Redpath Hall, where 12 microphones were arranged as illustrated below to capture the audio of the McGill Jazz Orchestra, Gordon Foote conducting.

Analog audio signals from the microphone array were passed through a pre-amp into A/D converters, which provided our computer with a digital data stream, which was then packetized and transmitted.

On the receiving end, the opposite process took place, with data passed through D/A converters and mixed by the engineers locally. The sound system was comprised of:

Brant Biles and Robert Margouleff of Mi Casa Multimedia, a renown Hollywood company specializing in Multi-Channel Audio Recording, Mixing, and Mastering prepared a live mix in the Norris Theater that many in the audience considered to sound like “being at McGill”. Both Biles and Margouleff are known to be pioneers of multichannel music with their 5.1 channel releases of Boyz II Men “II” and Marvin Gaye’s “Forever Yours”, and Margouleff has an impressive history of winning Grammy Awards for Stevie Wonder’s “Innervisions” and “Fulfillingness”.

Biles has mixed the 12 source channels into the 5.1 - channel sound system of the theater by wrapping the audience with trombones, trumpets and saxes of the McGill Jazz Orchestra and placing the piano and guitar in the front stereo at the left, and the bass and drums in the front stereo at the right. He skillfully used the on-board compressors and equalizers of the Sony DMX-R100 to bring out the presence and blend of the ensemble, achieving a very impressive overall presentation. Most of McGill’s room reverbaration was captured by the individual group microphones (DPA 4011) with a touch added from the dedicated ambience pair of DPA 4006 microphones. Biles switched off the rear loudspeakers to ensure that everyone in the theatre, including the audience at the rear, would hear a wide horizontal image of the band. The sonic result was an exceptional realism and fullness of musical experience.

The mixing desk for the event was the new Sony DMX-R100 Compact Digital Console running at 96kHz locked into the six pairs of Hi-Speed AES/EBU signals supplied by Mytek 96/24 D/A converters that served only to change the bit stream format from lightpipe ADAT to independent Hi-Speed AES/EBU signals. Once mixed digitally into six 96/24 outputs in the Sony console, the six digital signals were converted to analog by an additional Mytek 96/24 D/A converter before being sent to the theatre’s 5.1 monitoring system.

The audience thoroughly enjoyed this impressive demonstration and continued talking about it on a bus ride back to the Convention Center.

Technical Details

the demonstration, audio data was sampled by 24bit/96kHz analog-to-digital converters and sent through an ADAT lightpipe (4 channels per pipe) to an RME Hammerfall Digi 9652 audio interface, installed on a PIII-733 PC running RedHat Linux. We also planned to compress the data using the MPEG-2 Advanced Audio Coding library, developed by the Fraunhofer IIS-A, however, time limitations prevented us from completing this component of the demonstration. Our software managed the reliable transport of this data over the Internet, using special thread scheduling techniques to ensure that data was read and written to the audio devices at precisely timed intervals.

In parallel, an MPEG-2 video stream was provided using Cisco Systems’s IP/TV system. However, since our audio software had no way to communicate with the video system, the two streams had to be synchronized manually to a three second delay (the limit imposed by IP/TV). The technical presentation discussed the importance of large buffers for long-haul, reliable data transport.

Dealing with audio at the high data rates of 24bit/96kHz requires very precise timing that pushes conventional computer software to its limits. Without large buffers in the D/A hardware, it was necessary to supply these units with blocks of data samples at frequencies approaching that of the Operating System scheduler – 100 Hz under current Linux implementations. As the various threads of our code have to juggle between network I/O, audio hardware I/O, and buffer management within these time constraints, it was critical to provide very tight bounds on these operations and avoid buffer copying as much as possible.

Challenges and Results

The most serious obstacles we faced during the development were on the interface side. For example, our attempts to develop support for the dCS Ltd. 96/24 converters, which communicate to the PC over a Firewire connection, were completely frozen by the lack of isynchronous support under the current (experimental) Linux drivers. We are hoping that this issue will soon be resolved. Another attempted approach, to communicate with these units under Windows, also had to be abandoned due to the reliance on a specific Firewire interface vendor’s hardware.

The option which initially appeared more promising was to interface to the Mytek Digital 96/24 converters through the RME Hammerfall Digi 9652 running under RedHat Linux. However, we eventually discovered that the ALSA drivers had never been tested on the Hammerfall at data rates of 24bit/96kHz and in fact, required a significant rewrite to handle the unexpected format and channel rearrangement that the Hammerfall provides under these settings. This problem was finally solved less than one week before the demonstration, leaving us only a few days to complete the rest of the coding and debugging.

In terms of the Fraunhofer IIS-A AAC library, which works on 16bit/48kHz data, integrating this codec proved very difficult because their software was developed with the assumption that audio data was to be read from and written to files rather than network buffers. We eventually overcame this hurdle, but the changes to the ALSA driver necessitated for the PCM demonstration required the replacement of the glibc library with a version incompatible with the Fraunhofer software. Unfortunately, there was insufficient time to resolve this issue, but we hope to demonstrate the AAC system with a high number of channels at next year’s AES Convention in New York.

Despite the above issues, numerous additional challenges leading up to the demonstration, several sleepless nights, and some very frightening moments on the day of the demonstration, itself, including:

the demonstration of PCM audio at 24bit/96kHz ran perfectly.

In terms of bandwidth issues, we measured the total link capacity between McGill and USC at approximately 70 Mbps just prior to the demonstration. Our transmission of audio data consumed a minimum (with no retransmissions) of 28 Mbps while the video data required an additional 3 Mbps. With moderate competing traffic on the network, our protocol continued to deliver the audio data reliably. During the demonstration, we observed a highly reduced frame rate on the video signal through the IP/TV MPEG-2 display. Although we initially speculated that this was due to bandwidth congestion, the more likely cause was a misconfigured ethernet switch setting on the receive side.

We are now exploring automated synchronization of various audio formats with MPEG-2 video and DV streams, for real-time streaming and editing applications.

Software Structure

The protocol is implemented as a widely adaptable high-throughput software system using no specialized or tightly-coupled code structures.

The architecture is highly modular but non-stratified; protocols, local I/O processes and codecs run as peer threads. The central organizing abstraction and the internal communication path is a circular array of frame slots, which modules can address either randomly (subject to windowing constraints) or with self-locking cursors. The circular structure is used not only as a non-copying buffer shuttle but as the main internal temporal abstraction, with synchronous events being launched from an integrated timing wheel.

The program supports multiple simultaneous reader modules and can be configured as a local format converter, as a server, as a client, or as a minimal-delay relay.

In the current transmission strategy the client computes an optimal resend schedule based on idealized assumptions of unlimited network bandwidth and statistically independent packet loss; resends are requested at the computed earliest detect times. The resend requests contain the client’s basic reception parameters and performance measurements. The server then processes requests by suppressing out-of-window and back-to-back packet resends (normally resulting from rising server I/O load). Thus the only state presently maintained in the server is the frame last send times.

In the coming months we plan to explore techniques for dynamic bandwidth control in a multicast environment, using the same codebase.

Media Material

- Audio Media: December Issue, Multimedia News

- Mix Magazine: January Issue, Internet Audio Supplement

- Widescreen Review Magazine

- A/V Video

- Pro Sound News

- EQ Magazine

- Audio Media, December 2000

- Surround Professional, October 2000

- AES Press Release, October 2000

- CBC Radio, July 20, 2000

- Montreal Gazette, July 19, 2000

- McGill press release, July 18, 2000

- Dolby press release, October 13, 1999

- the NODE: Learning Technologies Network, September 27, 1999

- AES Press Release, September 26, 1999

- AES Press Release, September 24, 1999

- Etown.com article, September 22, 1999

- Project Overview by Elizabeth Cohen

- AES Press Release, September 1999