Nothing is currently selected for comparison. Please select two tools to compare on the Tools page.

Only one tool is selected for comparison. Please select another to compare on the Tools page.

Your web browser does not support the Session Storage API. This feature will not work.

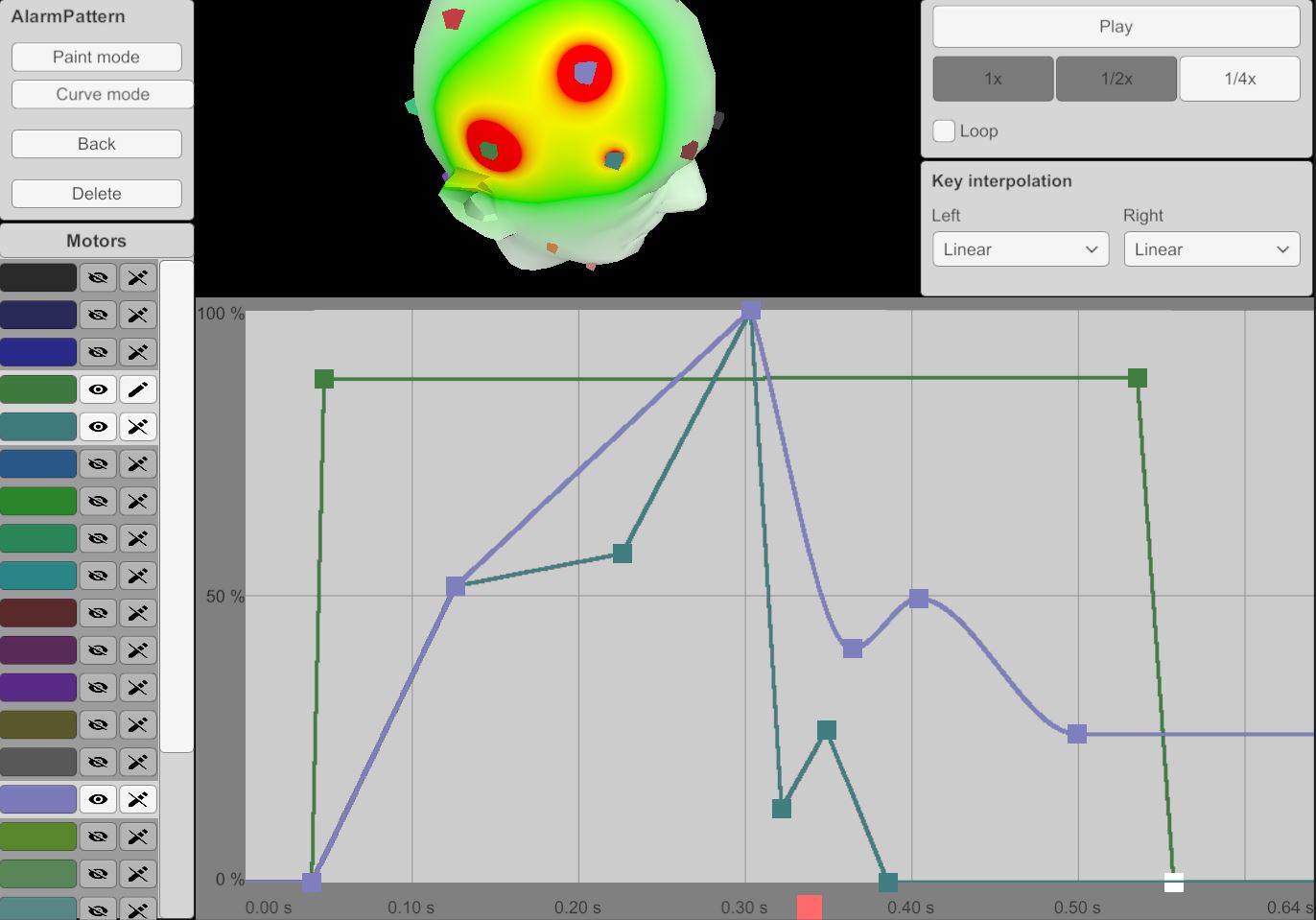

3DTactileDraw

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2019 |

| Platformⓘ The OS or software framework needed to run the tool. | Unknown |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | ACM CHI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Virtual Reality |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Bespoke |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | HapticHead |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Head |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Keyframe, Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | Unknown |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

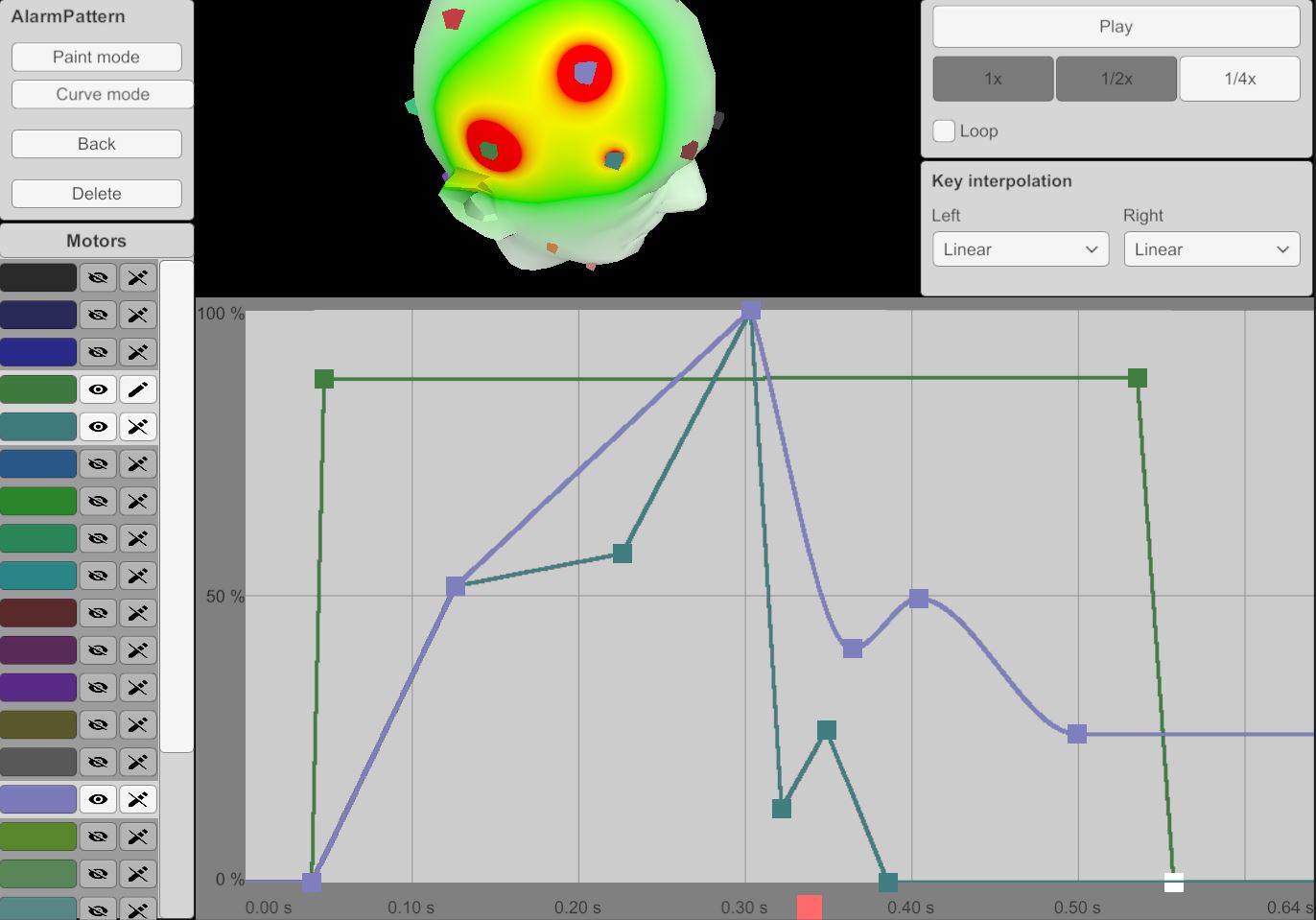

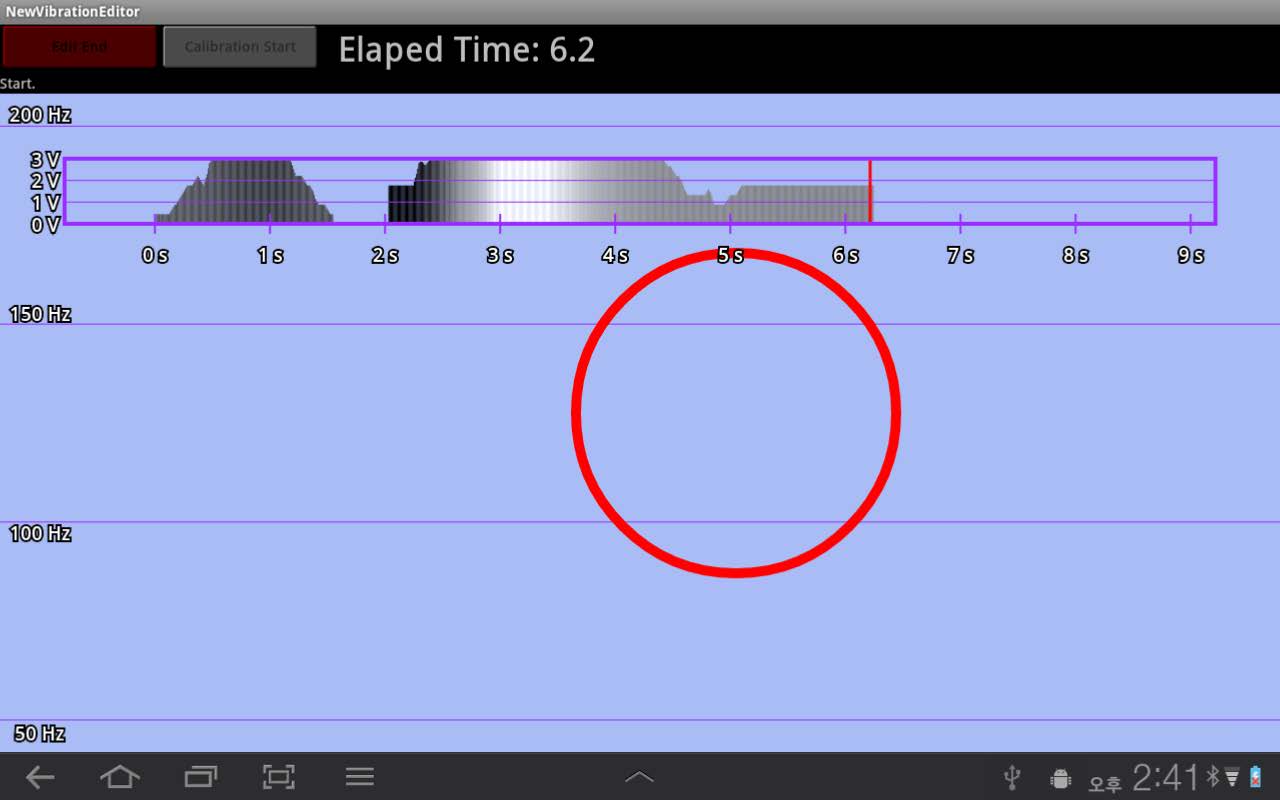

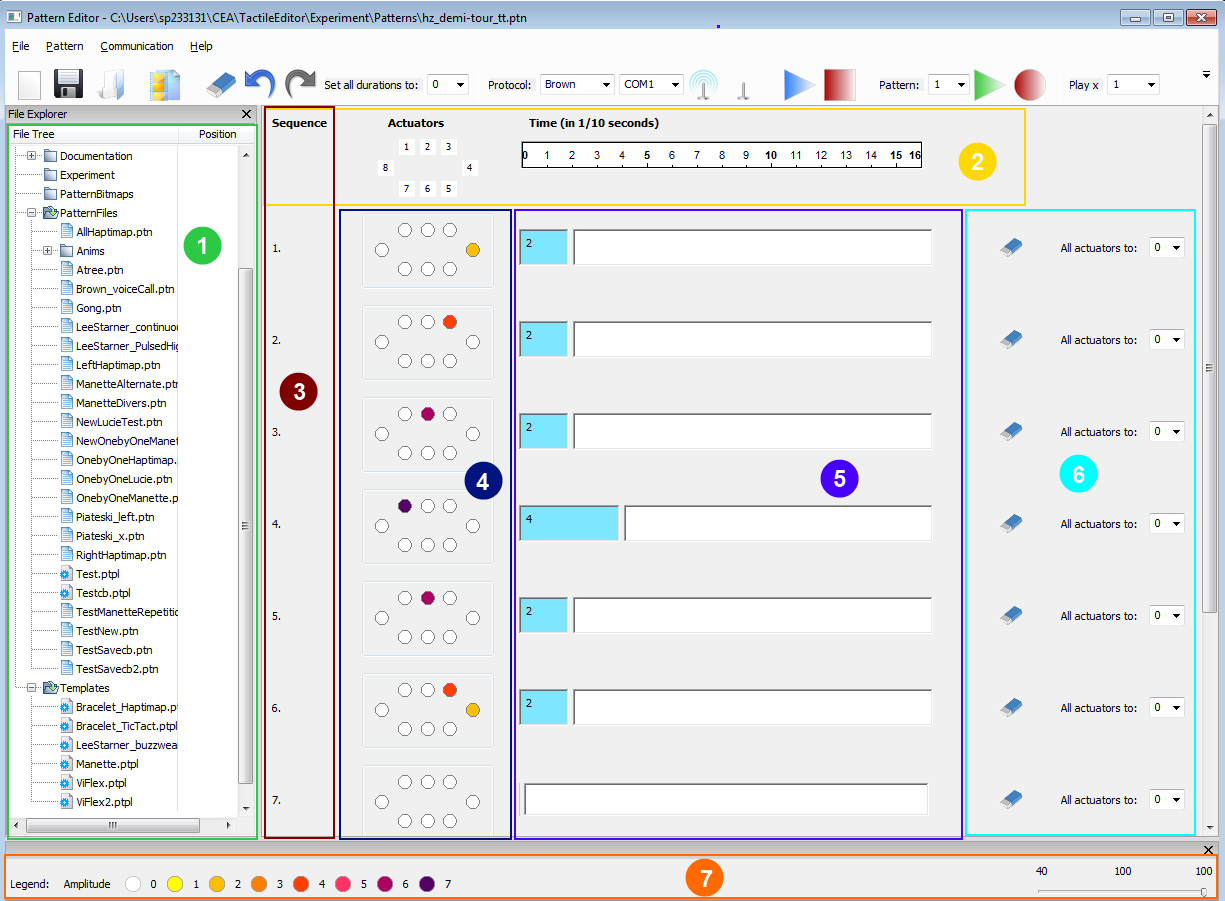

3DTactileDraw supports control of an a 24-actuator array using the HapticHead helmet. Two major interfaces are present in the tool: a paint interface that permits drawing desired effects directly on the surface of a model of a 3D head, and a curve interface that provides per-actuator intensity keyframes on a timeline.

For more information about 3DTactileDraw, consult the CHI 2019 paper.

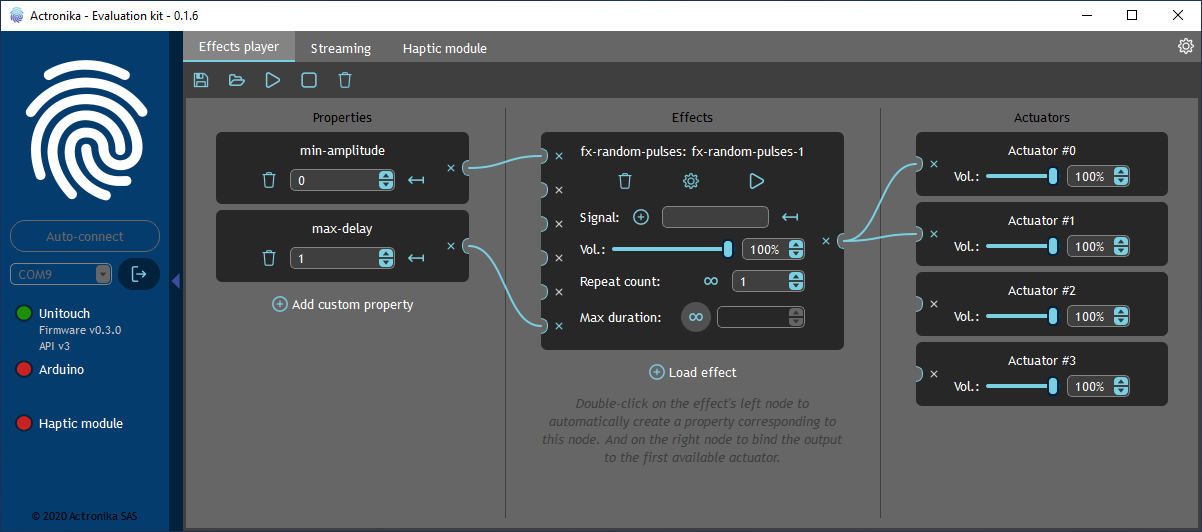

Actronika EvalKit

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2020 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows, macOS, Linux |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL 3) |

| Venueⓘ The venue(s) for publications. | N/A |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Hardware Control |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Actronika Unitouch |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Audio |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Dataflow |

| Storageⓘ How data is stored for import/export or internally to the software. | WAV |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

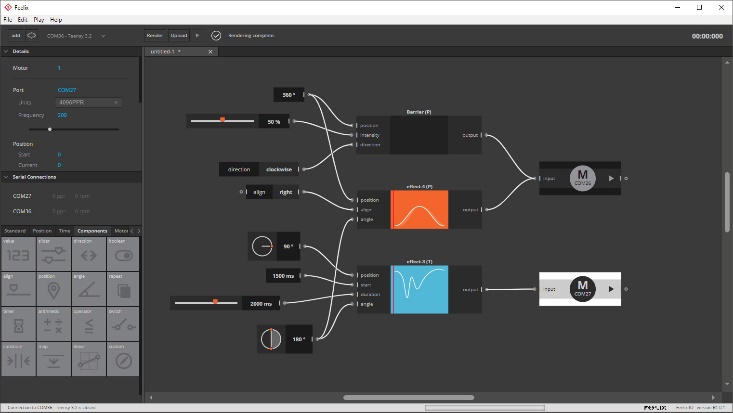

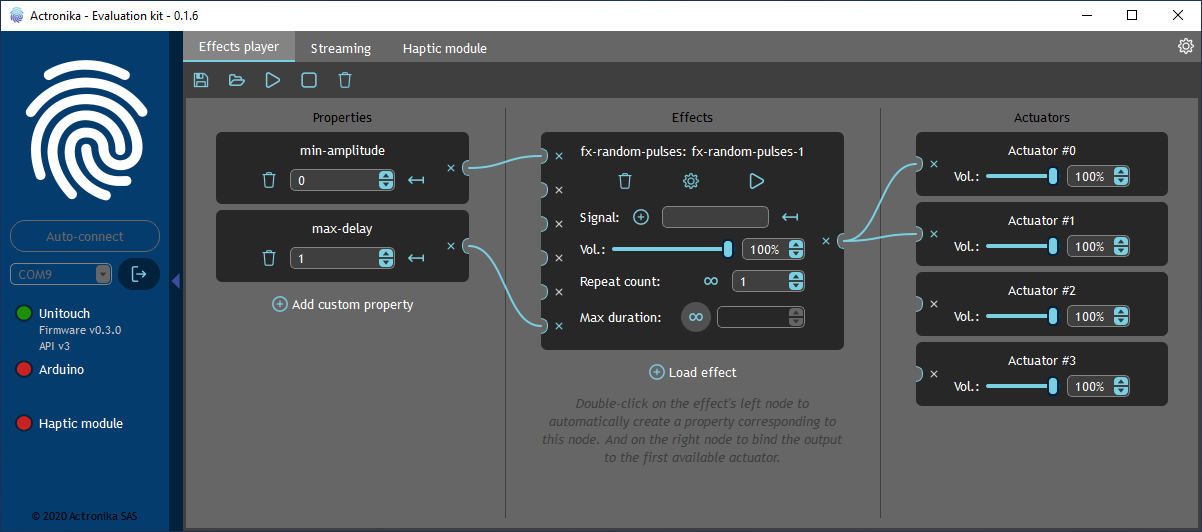

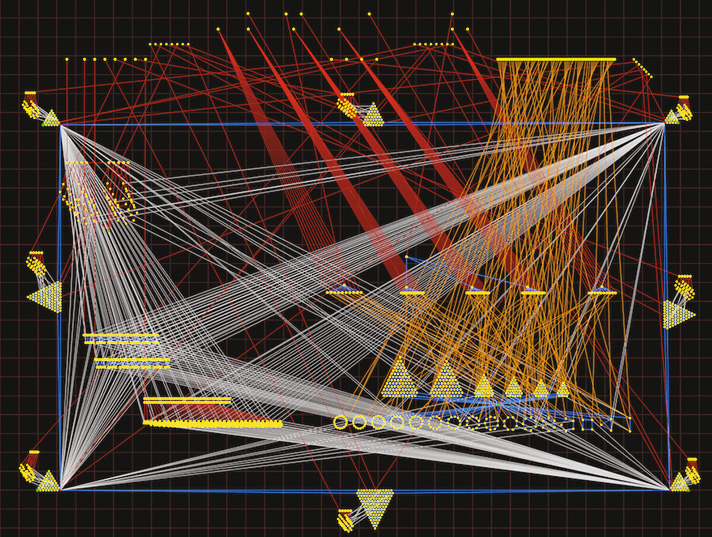

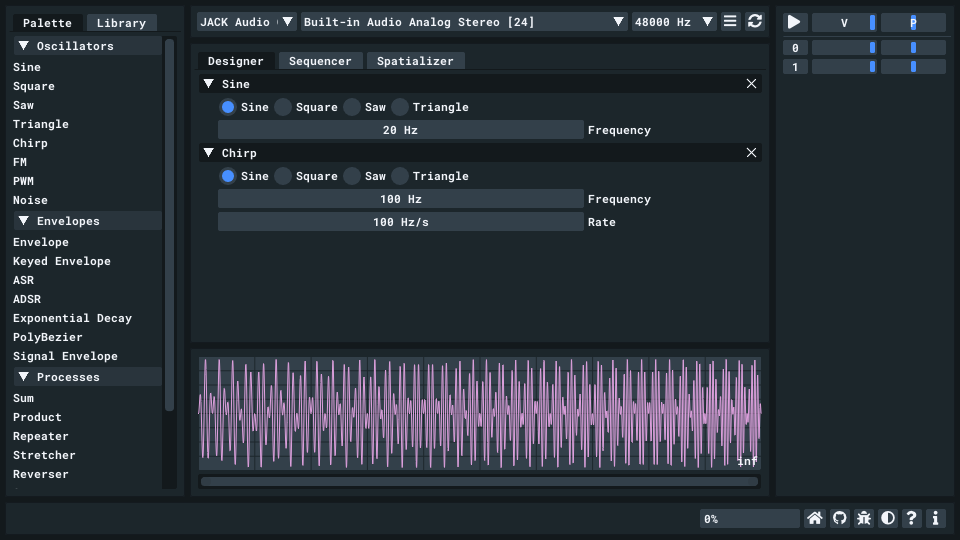

The Actronica EvalKit includes a basic dataflow interface where preset, parametrized effects can be adjusted and their output directed to different actuators. Other modes support directly playing back an audio file and trying other, more complex effects on the included haptic module.

For more information, consult the Actronika website.

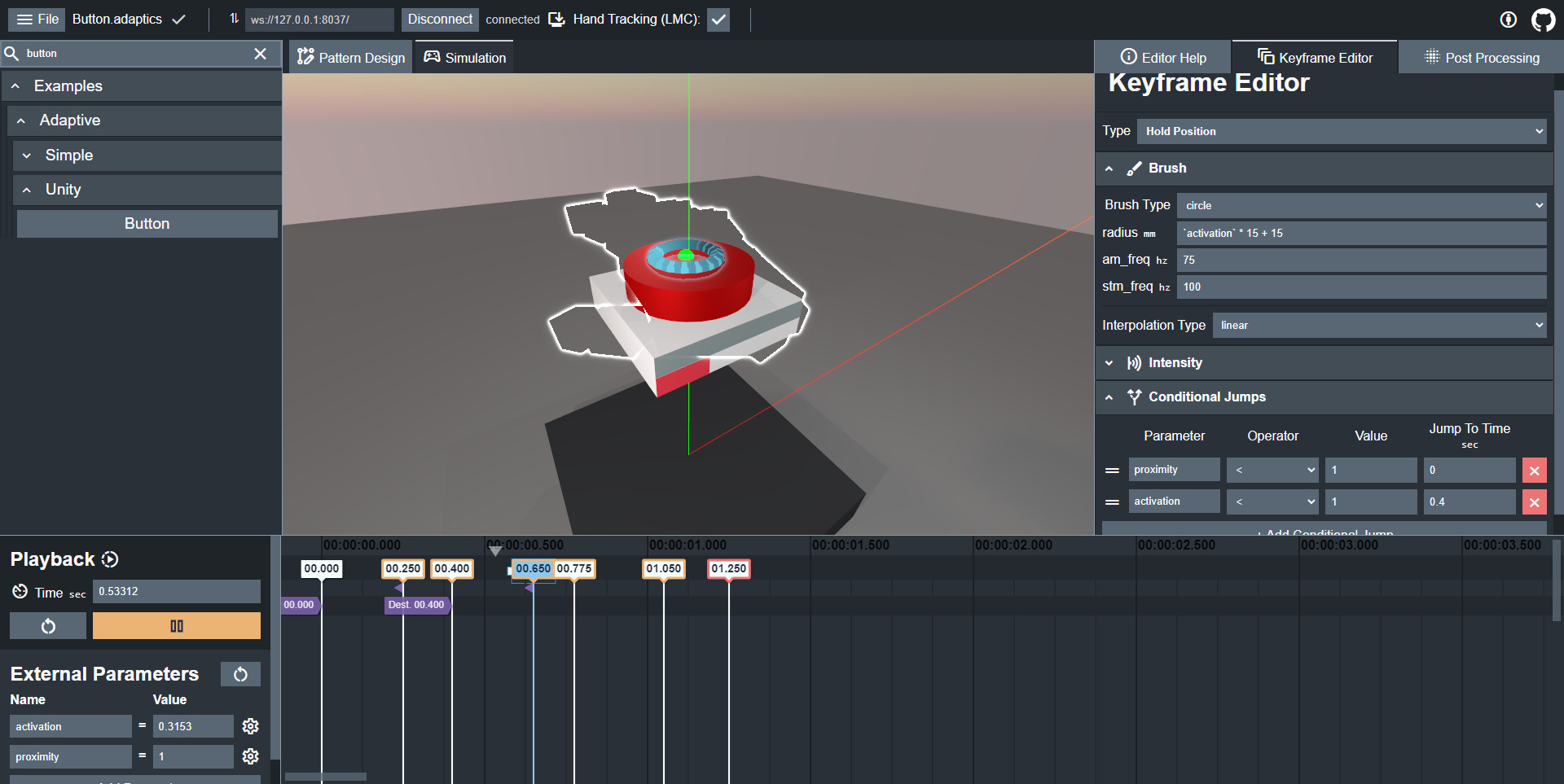

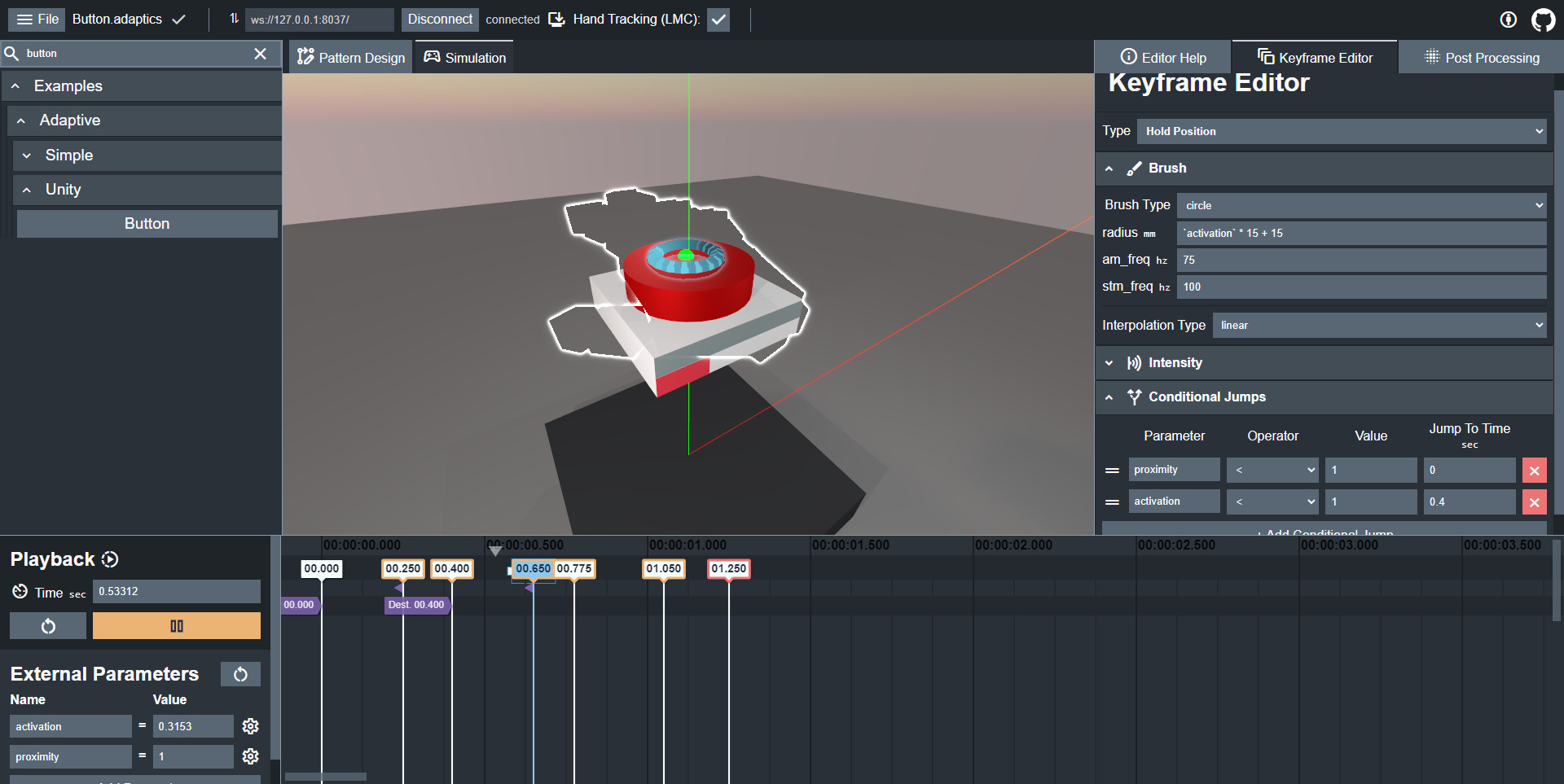

AdapTics

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2024 |

| Platformⓘ The OS or software framework needed to run the tool. | Unity, Rust |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL-3.0 and MPL-2.0) |

| Venueⓘ The venue(s) for publications. | ACM CHI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Prototyping, Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Ultraleap STRATOS Explore |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Hand |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time, Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Keyframe, Demonstration, Track |

| Storageⓘ How data is stored for import/export or internally to the software. | Custom JSON |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API, WebSockets |

Additional Information

AdapTics is a toolkit for creating ultrasound tactons whose parameters change in response to other parameters or events. It consists of two components: the AdapTics Engine and Designer. The Designer, built on Unity, allows for the creation of adaptive tactons using elements commonly found in audio-video editors, and adaptive audio editing in particular. Tactons can be created freely or in relation to a simulated hand. The Designer communicates using WebSockets to the Engine, which is responsible for rendering on the connected hardware. While only Ultraleap devices are supported as of writing, the Engine is designed to support future hardware. The Engine can be used directly through API calls in Rust or C/C++.

To learn more about AdapTics, read the CHI 2024 paper or consult the AdapTics Engine and AdapTics Designer GitHub repositories.

Android API

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2008 |

| Platformⓘ The OS or software framework needed to run the tool. | Android |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (Apache 2.0) |

| Venueⓘ The venue(s) for publications. | N/A |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Android |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | N/A |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| N/A |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

The Android API consists of preset VibrationEffect assets and developer-added compositions of the “click” and “tick” effects. Waveforms can also be created by specifying periods a sequence of vibration durations or durations and associated amplitudes. Audio-coupled effects can also be generated using the HapticGenerator. There are significant differences in hardware and software support across different Android devices and OS versions, including basic features such as amplitude control.

For more information, consult the Android API documentation and Android Open Source Project (AOSP).

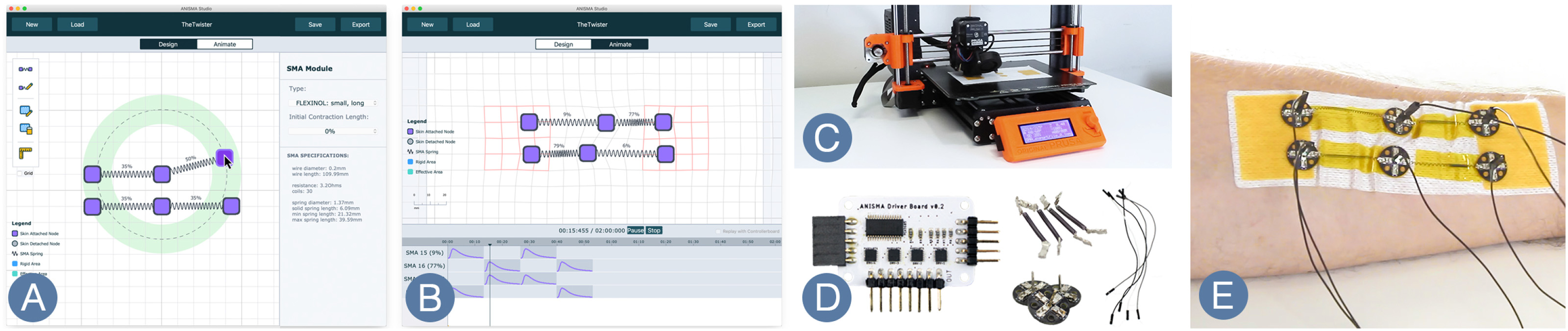

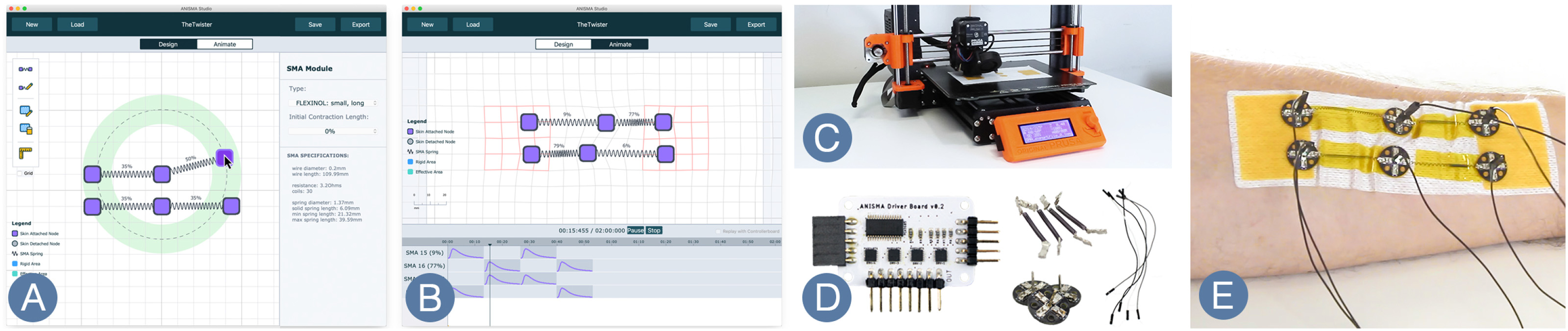

ANISMA

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2022 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows, macOS, Linux |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL 3) |

| Venueⓘ The venue(s) for publications. | ACM ToCHI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Hardware Control |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Skin Stretch/Compression |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Class |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Shape-Memory Alloy |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Keyframe, Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | STL File |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

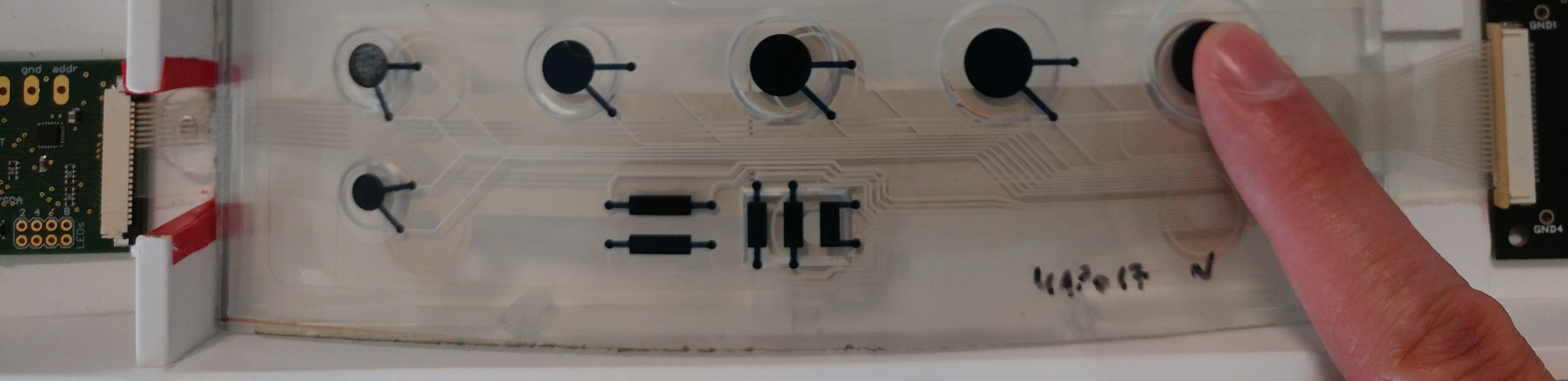

ANISMA is a toolkit to prototype wearable haptic devices using shape-memory alloys (SMAs). Users can position types of SMAs already present in the software between two nodes and simulate how networks of SMAs would behave on the skin during actuation. Nodes to which SMAs are attached can either be simulated as adhered to the skin of the wearer or free-floating. Once the layout is complete, ANISMA can be used to fabricate the design and to play back patterns on the actual hardware.

For more information, please consult the 2022 ACM ToCHI paper and the main GitHub repository.

Apple Notifications

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2013 |

| Platformⓘ The OS or software framework needed to run the tool. | iOS |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Proprietary |

| Venueⓘ The venue(s) for publications. | N/A |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Notifications |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | iPhone |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

New vibration patterns for notifications can be added on supported devices through the settings menu. The user taps out the desired pattern on the touchscreen, can play the recorded pattern back, and save it for later use.

For more information, consult the Apple Support page.

Beadbox

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2015 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (MIT) |

| Venueⓘ The venue(s) for publications. | UAHCI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Accessibility |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Class |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | EmotiChair, Voice Coil |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Keyframe, Music Notation |

| Storageⓘ How data is stored for import/export or internally to the software. | MIDI |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

Beadbox allows users to place and connect beads across different tracks, representing different physical actuators. Each bead provides a visual representation of vibration frequency and intensity. Duration can be controlled by forming a connection across two beads. If actuator, duration, or frequency changes between the beads, Beadbox will move between these values during playback.

For more information about Beadbox, consult the UAHCI 2016 paper and the GitHub repository.

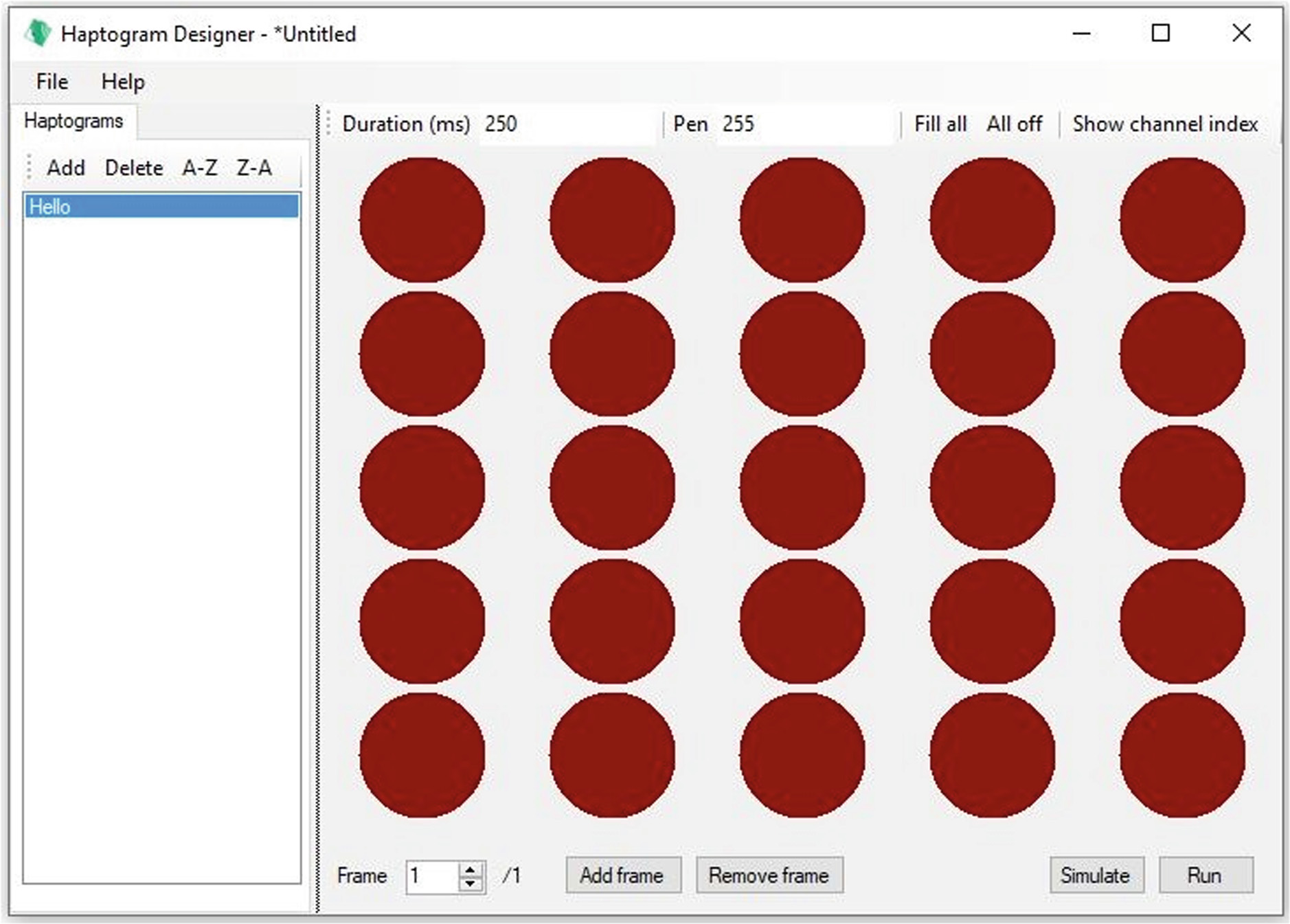

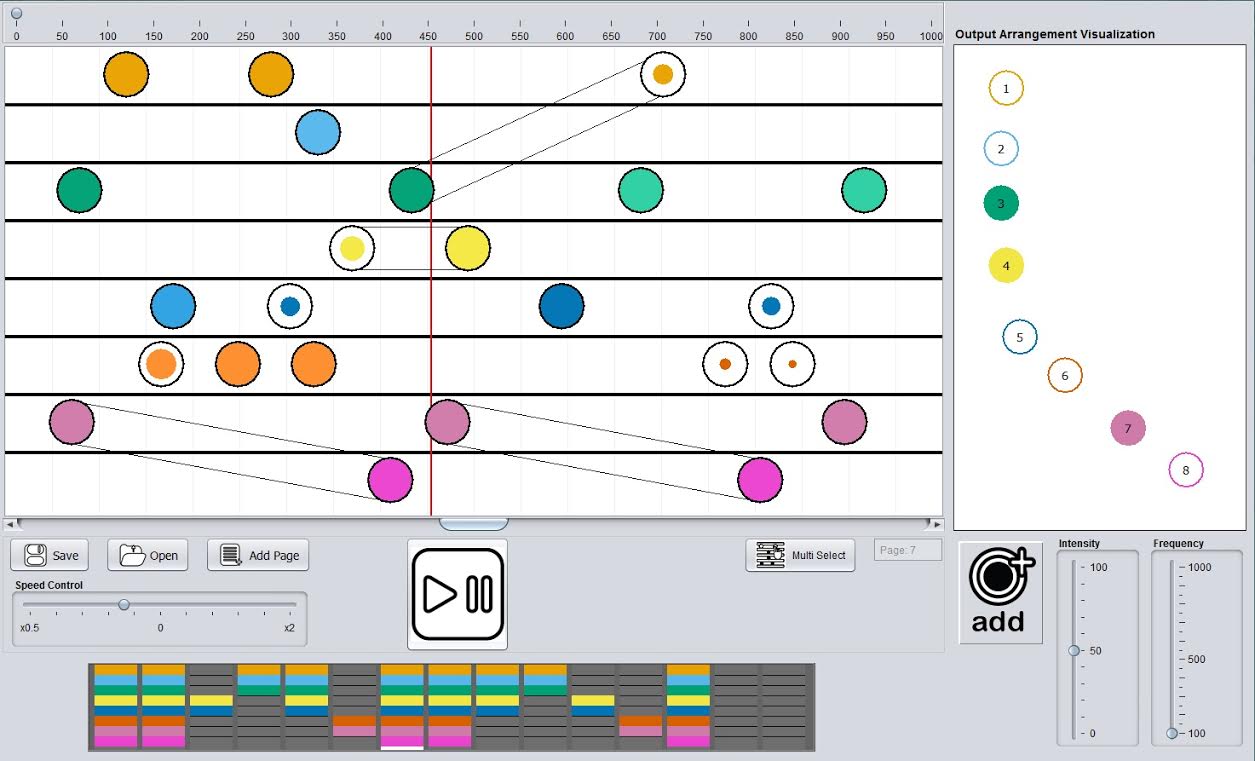

bHaptics Designer

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2016 |

| Platformⓘ The OS or software framework needed to run the tool. | Web |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Proprietary |

| Venueⓘ The venue(s) for publications. | N/A |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Virtual Reality, Gaming |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | bHaptics Devices |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Torso, Arm, Head, Hand, Foot |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Keyframe, Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | Internal |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

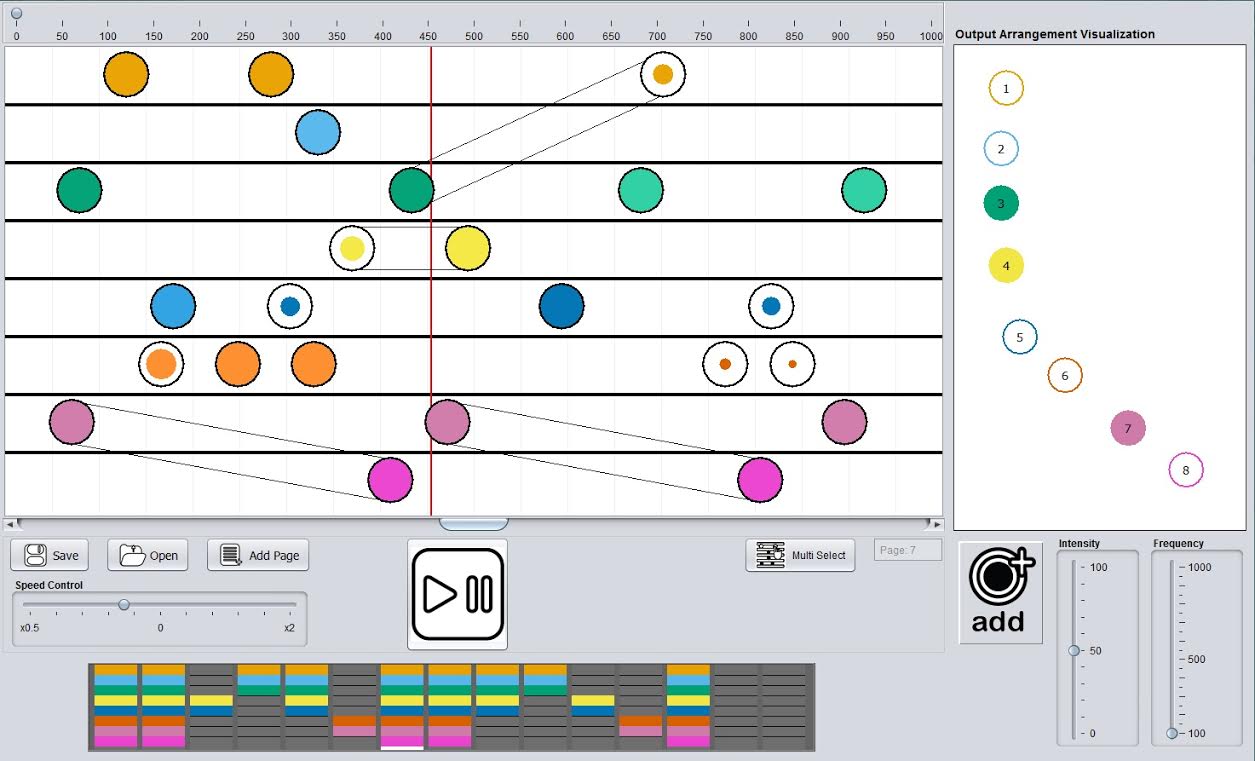

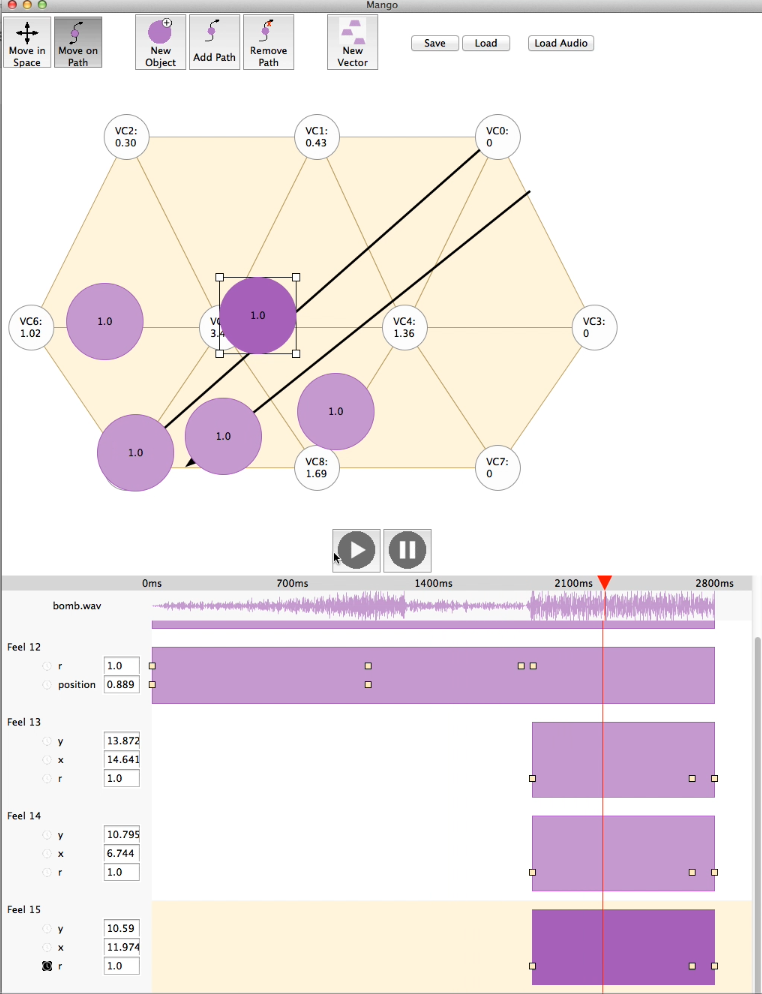

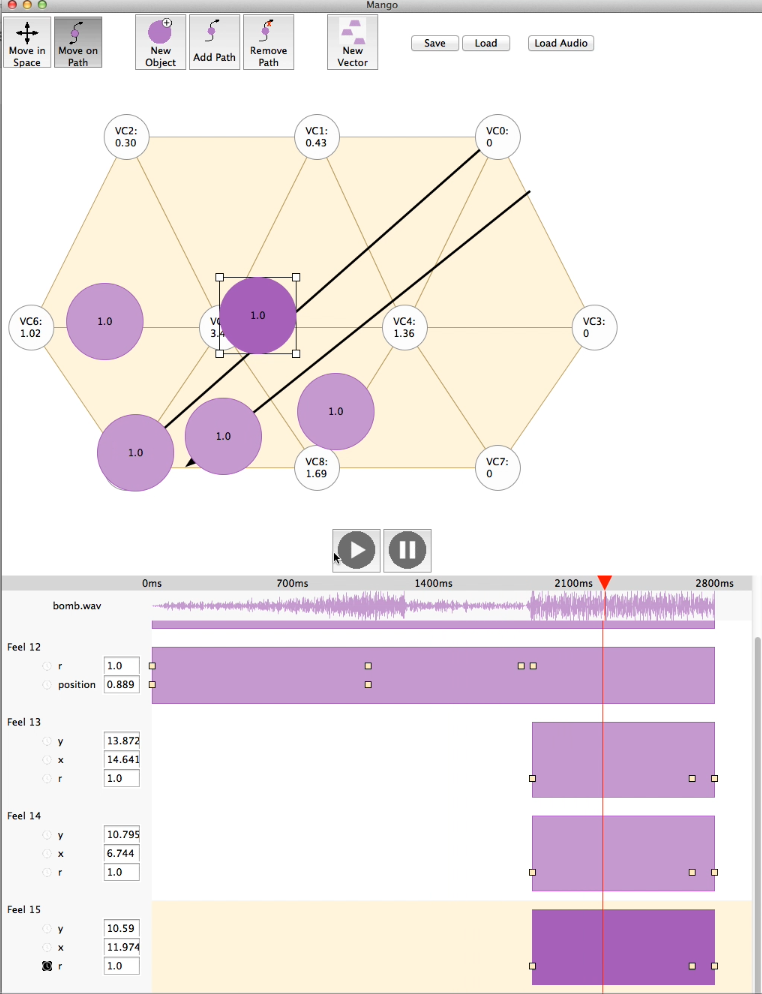

The bHaptics Designer allows for tactile patterns and animations to be created on the various bHaptics products worn on the body. Points of vibration can be set to move across the grid of the selected device with intensity changing from waypoint to waypoint. Individual paths can be placed into tracks on a timeline to create layered, more complex effects.

For more information, consult the bHaptics Designer website.

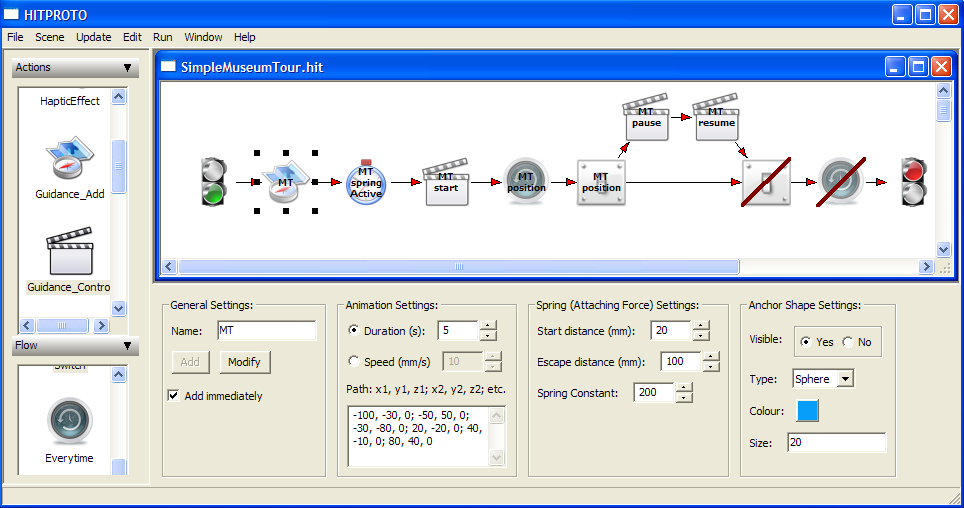

Cha et al. Authoring

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2007 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | IEEE WHC |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile, Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Bespoke |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | 76-Tactor Glove, Phantom |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Hand |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Visual |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Keyframe, Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | MPEG-4 BIFS |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

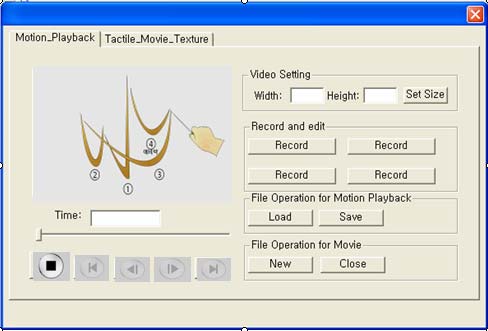

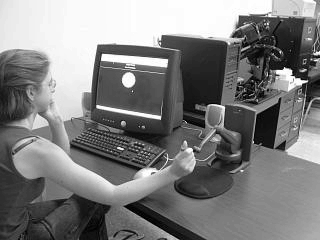

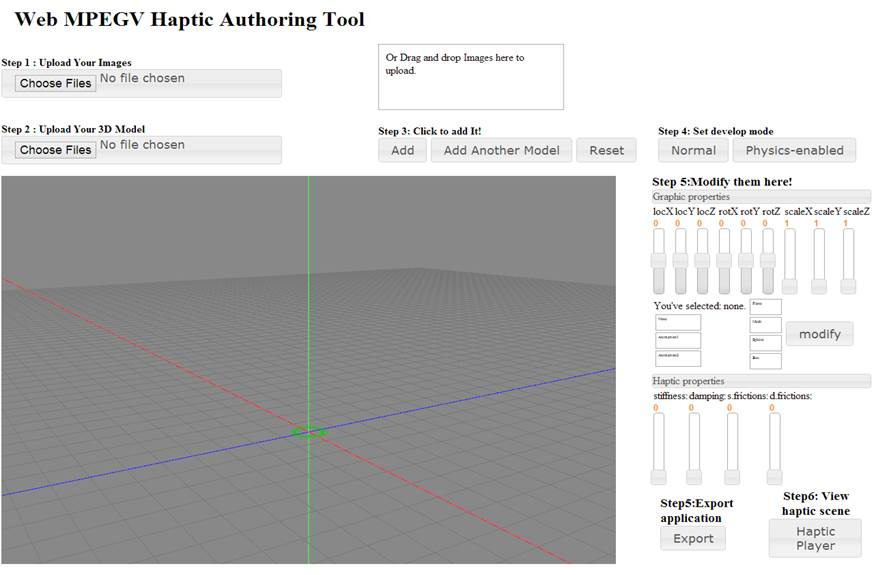

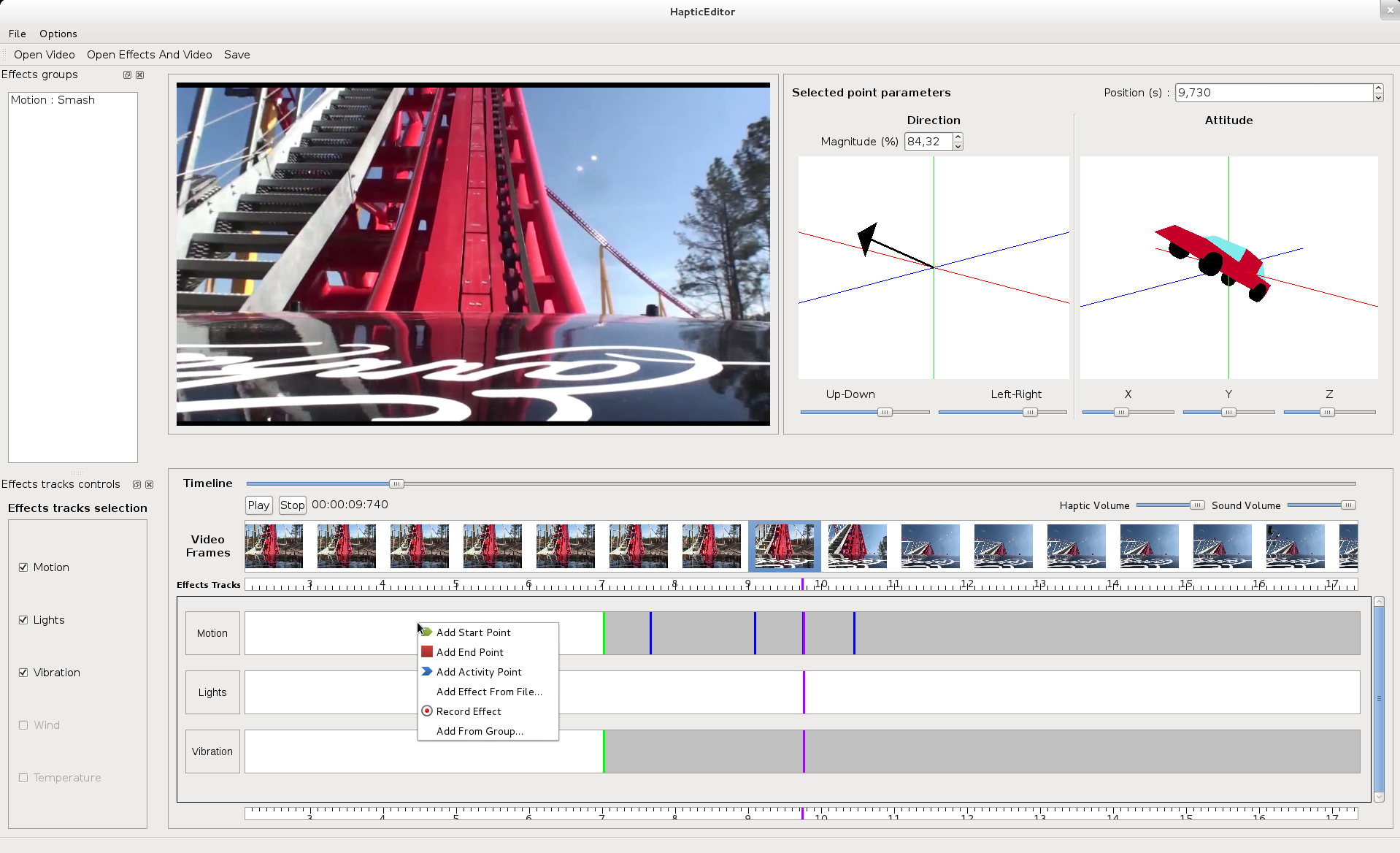

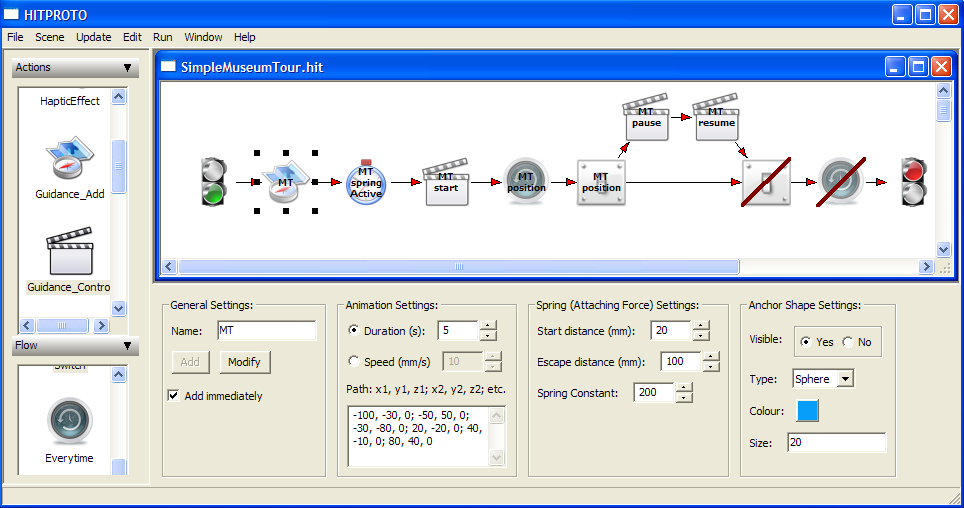

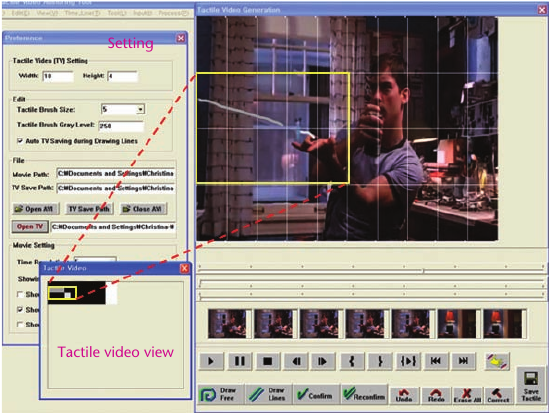

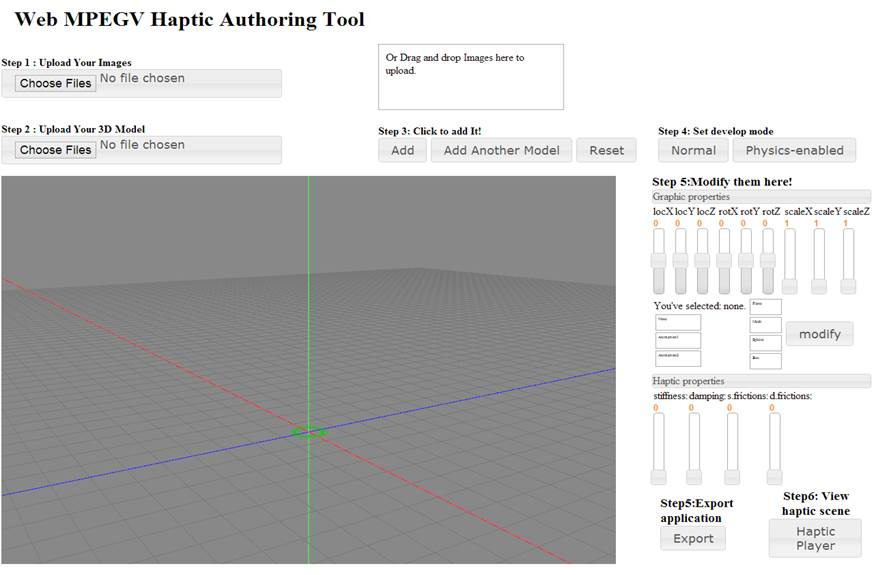

The authoring tool described by Cha et al. is meant to create interactions to be broadcast using MPEG-4 Binary Format for Scenes (BIFS). Haptic effects are represented through different “nodes” that support moving a force-feedback device along a trajectory, guiding a force-feedback device to a specific position, and triggering vibration on a tactile array. The tool itself supports recording motion on a force-feedback device for use in these nodes and an interface for creating and aligning vibration effects with pre-existing video files.

For more information, consult the WHC’07 paper.

CHAI3D

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2003 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows, macOS, Linux |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (BSD 3-Clause) |

| Venueⓘ The venue(s) for publications. | EuroHaptics |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Simulation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | omega.x, delta.x, sigma.x, Phantom, Novint Falcon, Razer Hydra |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | Yes |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Visual, Audio |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | N/A |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| N/A |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

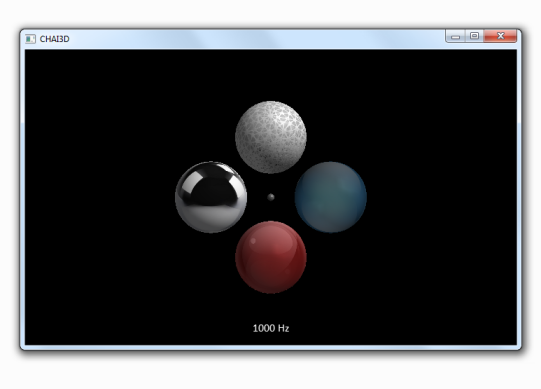

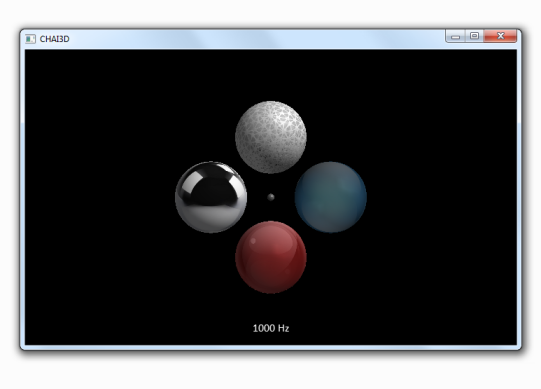

CHAI3D is a C++ framework for 3D haptics. Users can initialize a scene, populate it with virtual objects, and set the properties of those objects using built-in haptic effects, such as “viscosity” and “magnet”. It also uses OpenGL for graphics rendering an OpenAL for audio effects. CHAI3D can be extended to support additional haptic devices using the included device template.

For more information on CHAI3D, please consult the website, the documentation, and the EuroHaptics abstract.

Cobity

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2022 |

| Platformⓘ The OS or software framework needed to run the tool. | Unity |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (MIT) |

| Venueⓘ The venue(s) for publications. | Mensch und Computer |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Virtual Reality |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Kinova Gen3 |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| None |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

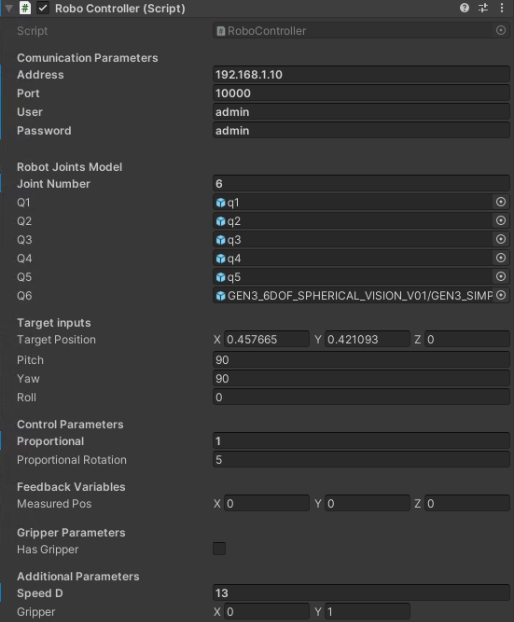

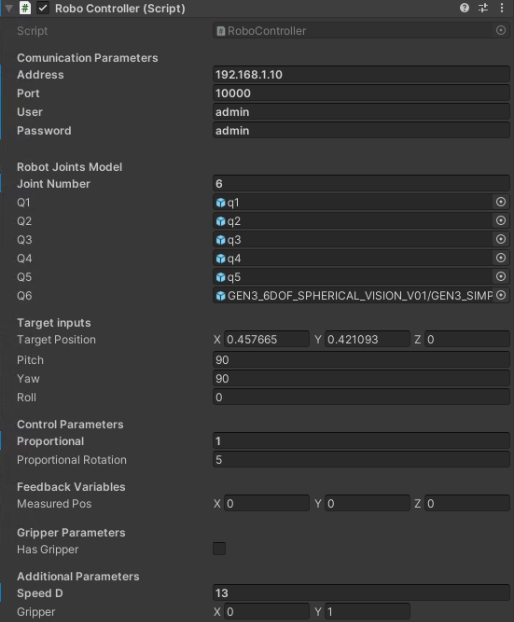

Cobity is a Unity plugin for controlling a cobot in VR. The robot’s end effector and position tracking parameters can be modified within the plugin. The end effector can be moved in response to user movements, e.g., by hand tracking, and can be bound to the position of a virtual object in the scene.

For more information on Cobity, please consult the MuC’22 paper or the GitHub repository.

Component-based Haptic Authoring Tool

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2015 |

| Platformⓘ The OS or software framework needed to run the tool. | Unity |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | GRAPP |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Novint Falcon |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Audio, Visual |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| None |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

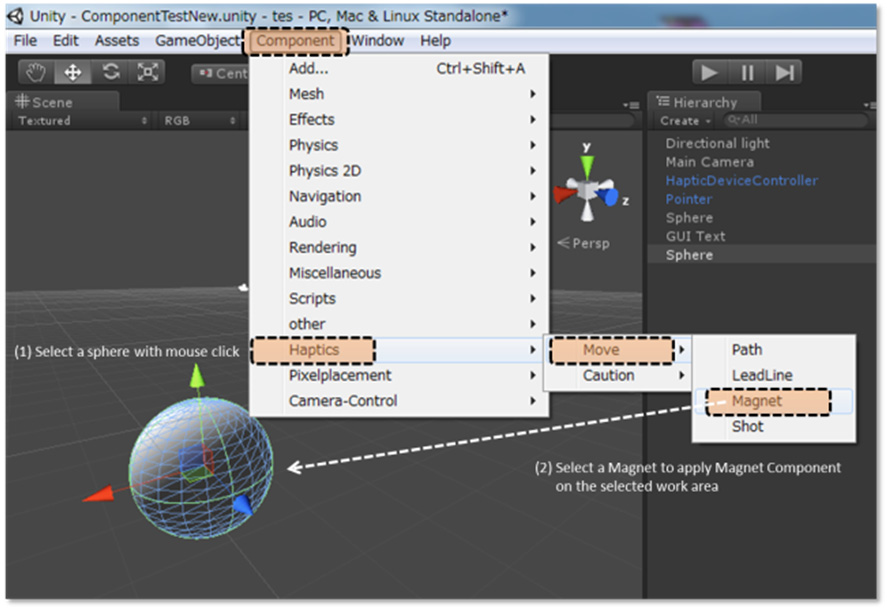

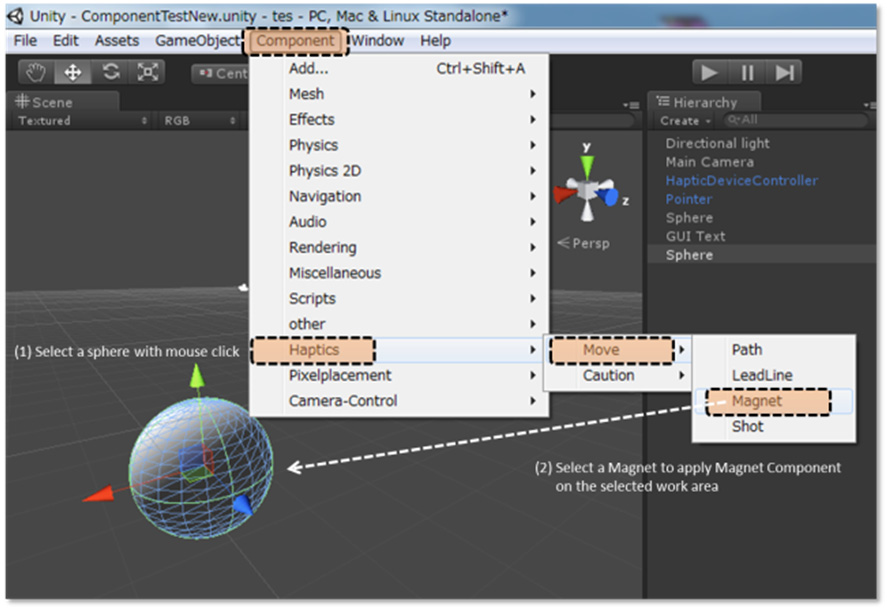

The component-based haptic authoring tool extends Unity to allow for set haptic effects, such as magnetism or viscosity, to be added to elements in the scene. These elements may already have visual or audio properties. The aim of the tool is to decrease the difficulty of adding haptic interaction to an experience.

For more information, consult the 2015 Computer Graphics Theory and Applications paper.

Compressables Haptic Designer

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2021 |

| Platformⓘ The OS or software framework needed to run the tool. | Web |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (MIT) |

| Venueⓘ The venue(s) for publications. | ACM DIS |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Hardware Control |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Skin Stretch/Compression |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Bespoke |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Compressables |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Head, Hand, Arm, Leg, Torso |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time, Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | Internal |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

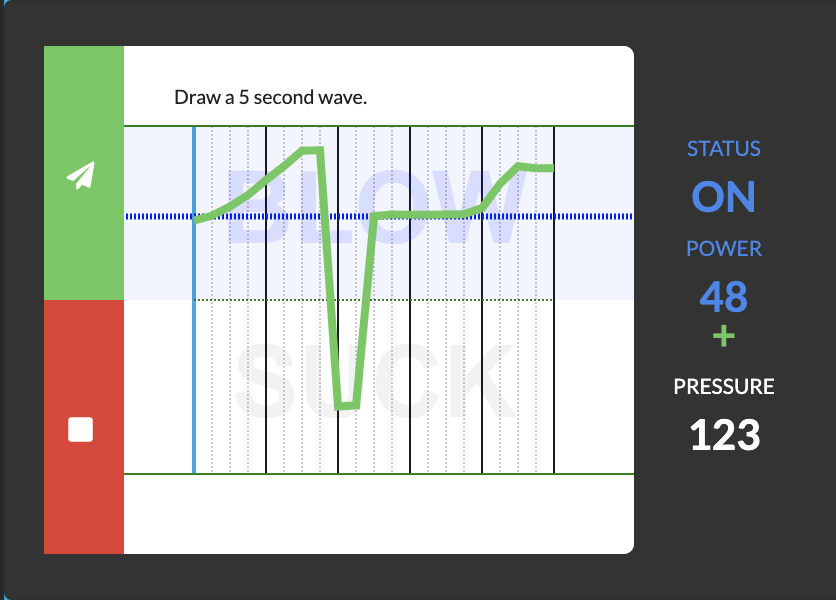

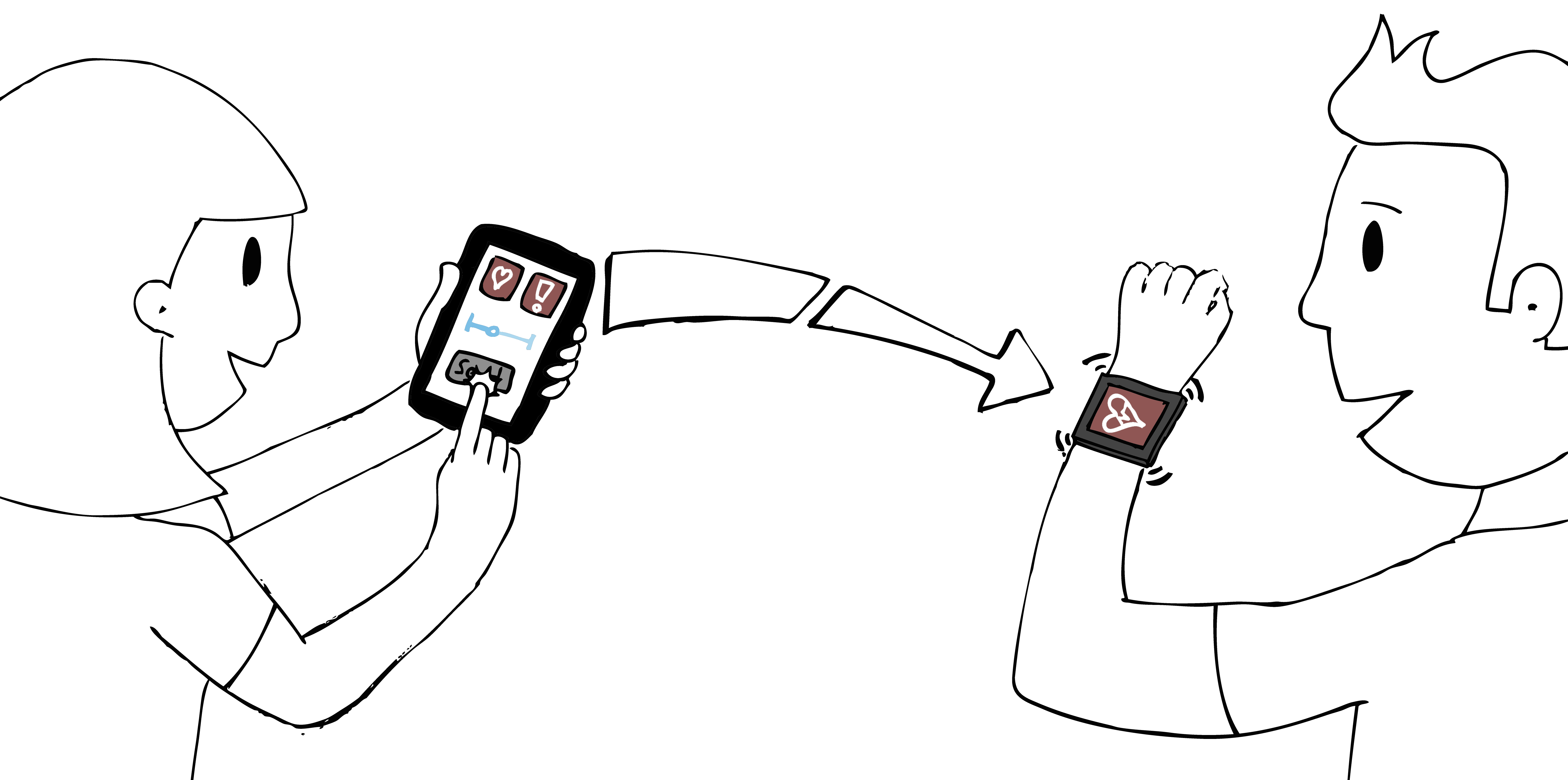

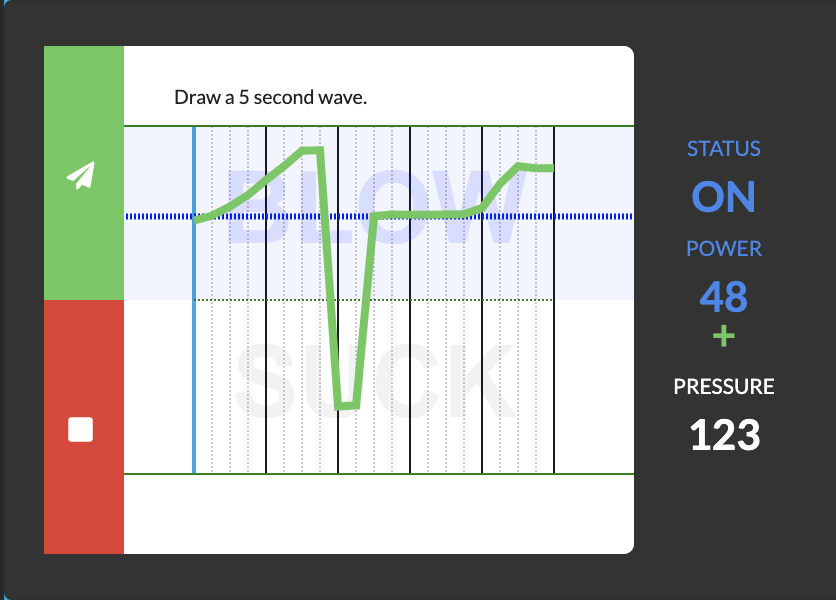

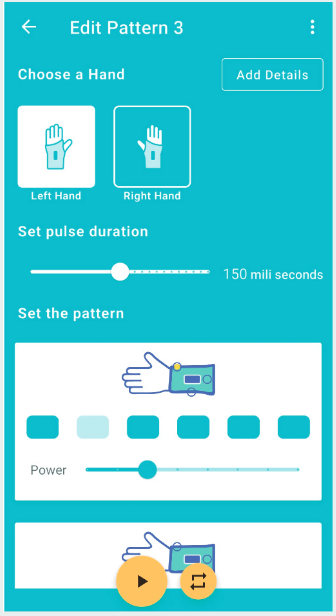

The Compressables Haptic Designer is a web app for controlling the Compressables family of pneumatic wearables. Motor power limits can be set through the app and gestures in the user interface allow for a user to control compression and decompression in real time. Time-based effects can also be created and triggered by certain physical gestures.

For more information on Compressables or the Compressables Haptic Designer, please consult the DIS’21 paper or the GitHub repository. The included graphic by S. Endow, H. Moradi, A. Srivastava, E.G. Noya, and C. Torres is licensed under CC BY 4.0.

D-BOX SDK

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2008 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Proprietary |

| Venueⓘ The venue(s) for publications. | N/A |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Simulation, Gaming, Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | D-BOX |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | N/A |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| N/A |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

The D-BOX LiveMotion SDK is used to create motion effects on a D-BOX chair in response to events, such as those in a simulation or game. Telemetry information concerning the user’s avatar, vehicles, or surrounding environment must be sent when updates occur. These data are used by a custom-built D-BOX Motion System to create haptic effects with limited latency between events occurring in the virtual environment and a response being felt.

For more information, consult the D-BOX website.

DIMPLE

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2007 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows, macOS, Linux |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL 2) |

| Venueⓘ The venue(s) for publications. | NIMEInteracting with Computers |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Simulation, Music |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | omega.x, delta.x, sigma.x, Phantom, Novint Falcon, Razer Hydra |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Visual, Audio |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | N/A |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| N/A |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | Open Sound Control |

Additional Information

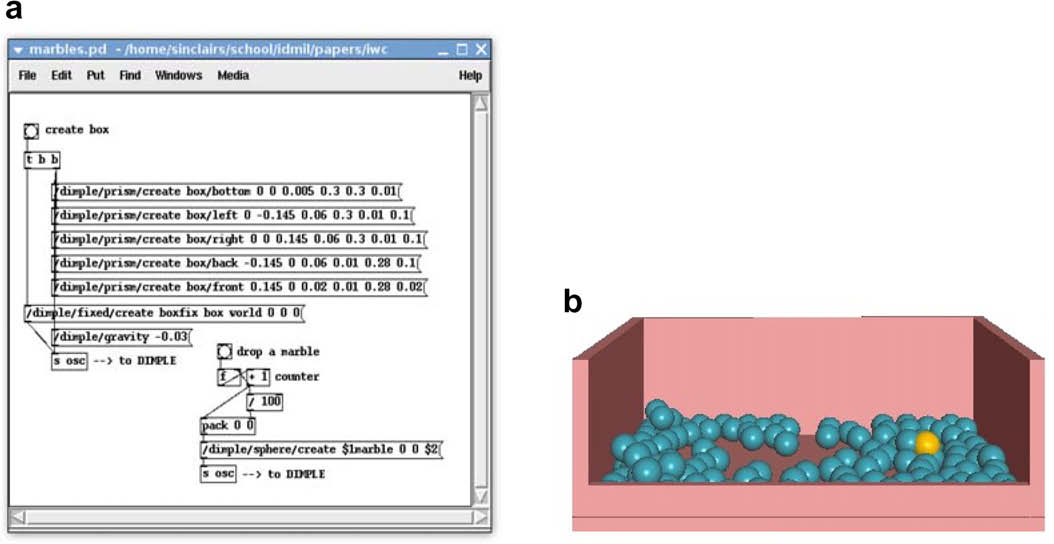

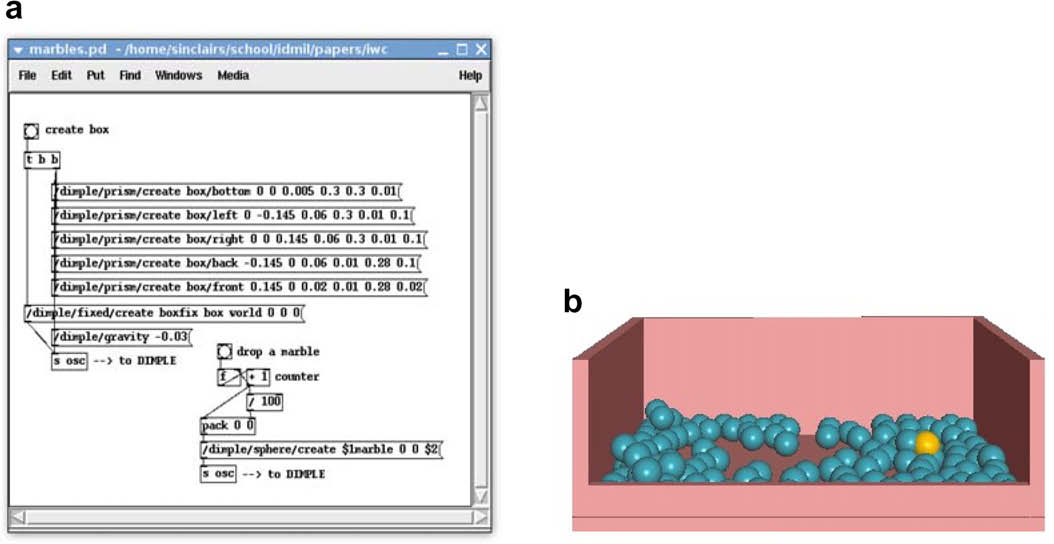

DIMPLE is a framework to connect visual, audio, and haptic simulations of a scene using OSC. Haptics support is provided via CHAI3D. DIMPLE allows for scenes to be constructed by a client, such as Pure Data, over OSC, and creates corresponding graphical and haptic representations of it using CHAI3D, ODE, and GLUT. The user can then connect data from events in these scenes (e.g., an object’s motion) to the audio synthesis environment of their choice.

For more information on DIMPLE, please consult the NIME’07 paper, the 2009 Interacting with Computers article, and the GitHub repository.

DOLPHIN

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2021 |

| Platformⓘ The OS or software framework needed to run the tool. | Qt |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL 3) |

| Venueⓘ The venue(s) for publications. | ACM Symposium on Applied Perception |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Psychophysics |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Ultraleap STRATOS Explore |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | Yes |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Hand |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| None |

| Storageⓘ How data is stored for import/export or internally to the software. | CSV |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

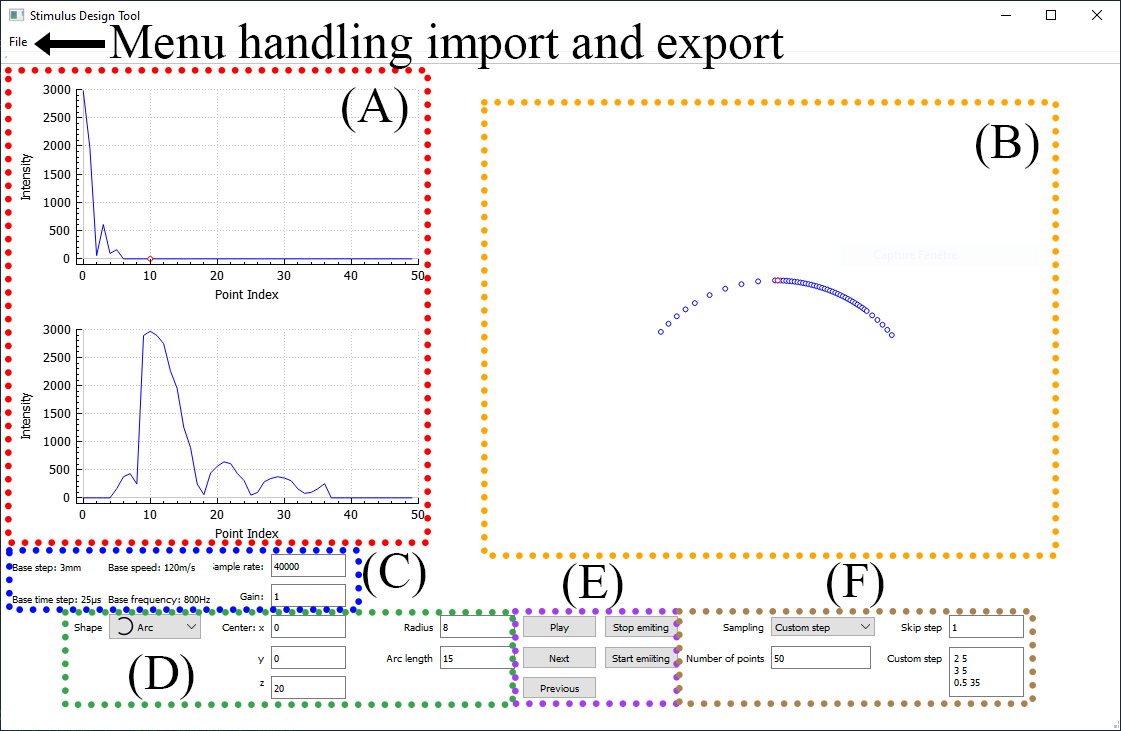

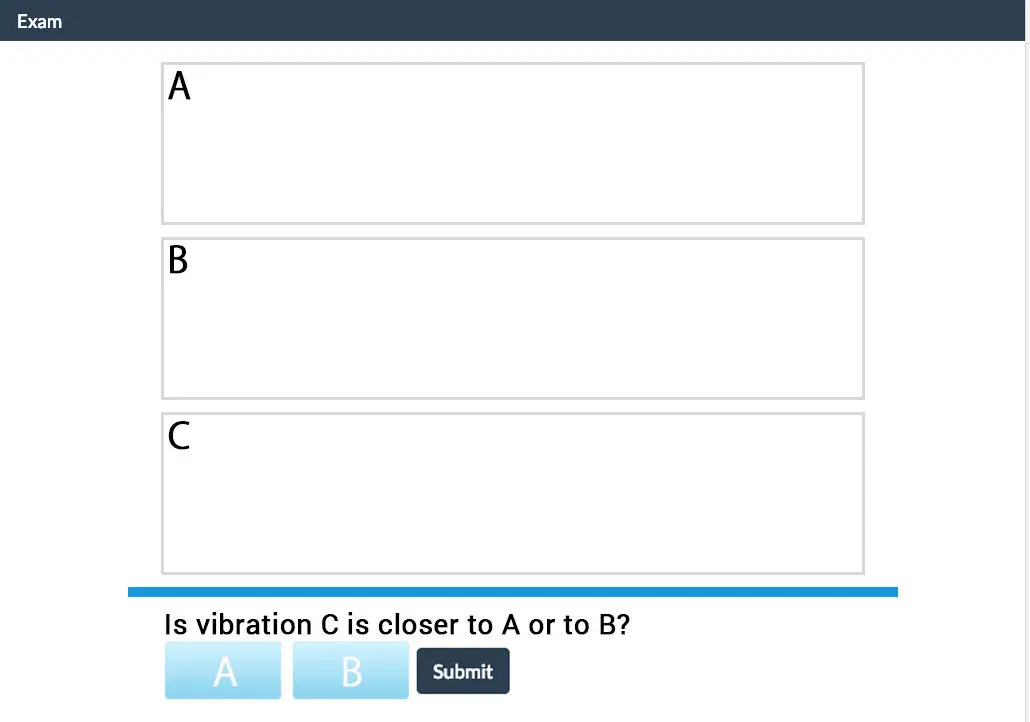

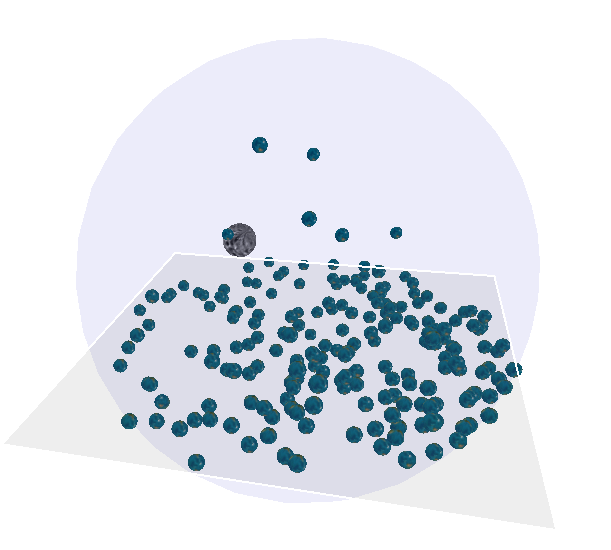

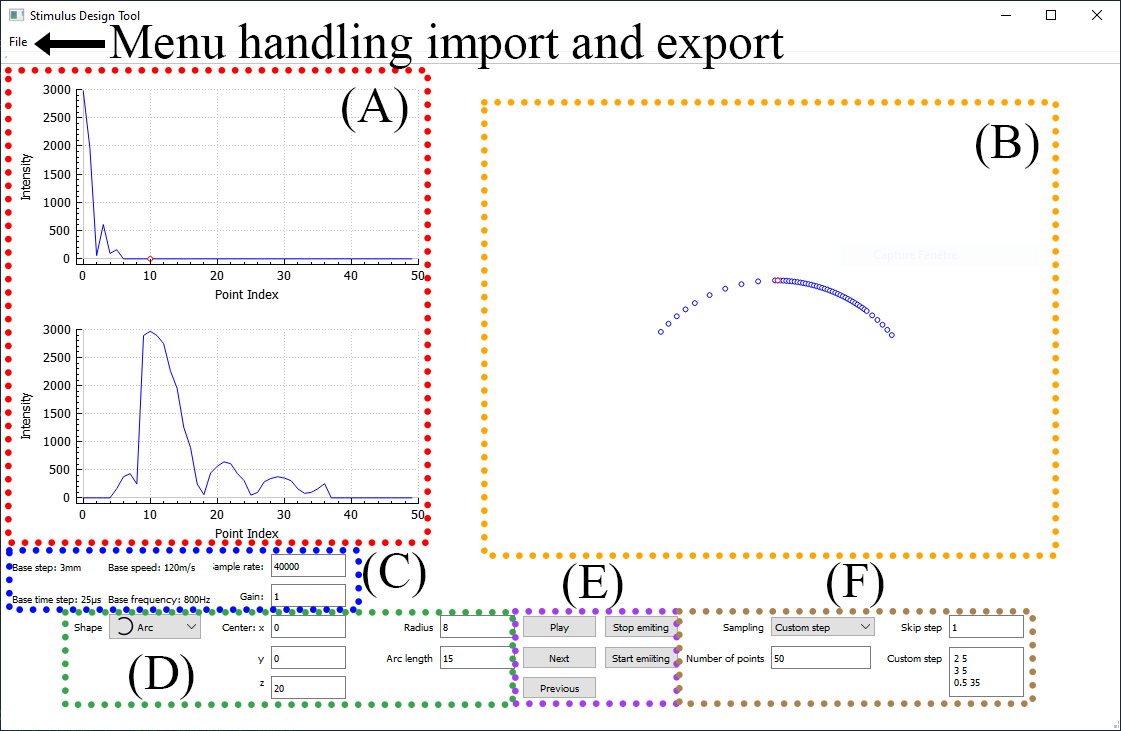

DOLPHIN is a framework with a design tool for creating ultrasound mid-air tactile renderings for perceptual studies. Users can create new classes to represent the geometries of shapes and the sampling strategies used to display them. Parameters of the shape and sampling strategy can be modified in the tool with the help of pressure and position visualizations. DOLPHIN also includes an interface to PsychoPy to aid in studies. While the framework currently only supports the STRATOS, the software is written so that support for new devices can be added in the future. A “reader” module is also available that can be included in other software to play back the renderings designed in DOLPHIN.

For more information, please consult the SAP’21 paper or the GitLab repository.

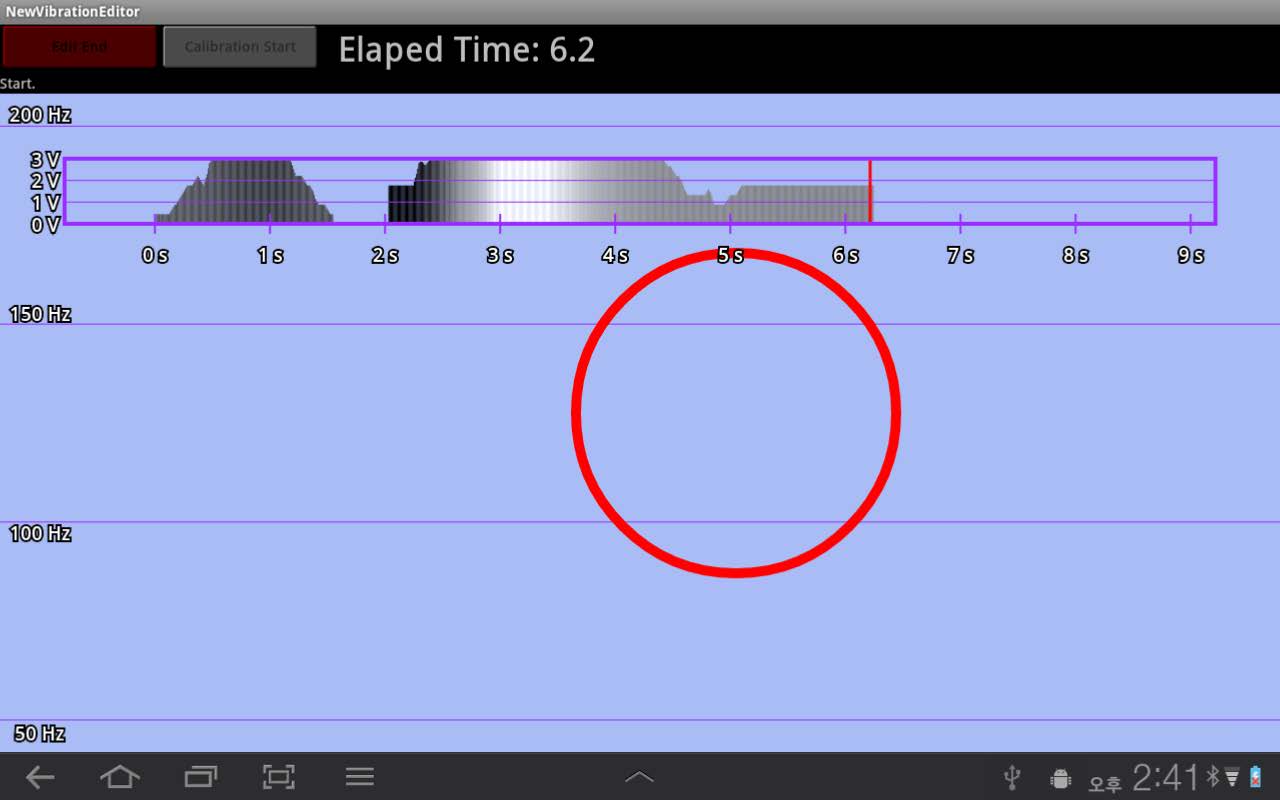

DrawOSC and Pattern Player

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2015 |

| Platformⓘ The OS or software framework needed to run the tool. | iPad |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | ICMC |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Music |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Bespoke |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Ilinx Garment |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Arm, Leg, Torso |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | Unknown |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | Open Sound Control |

Additional Information

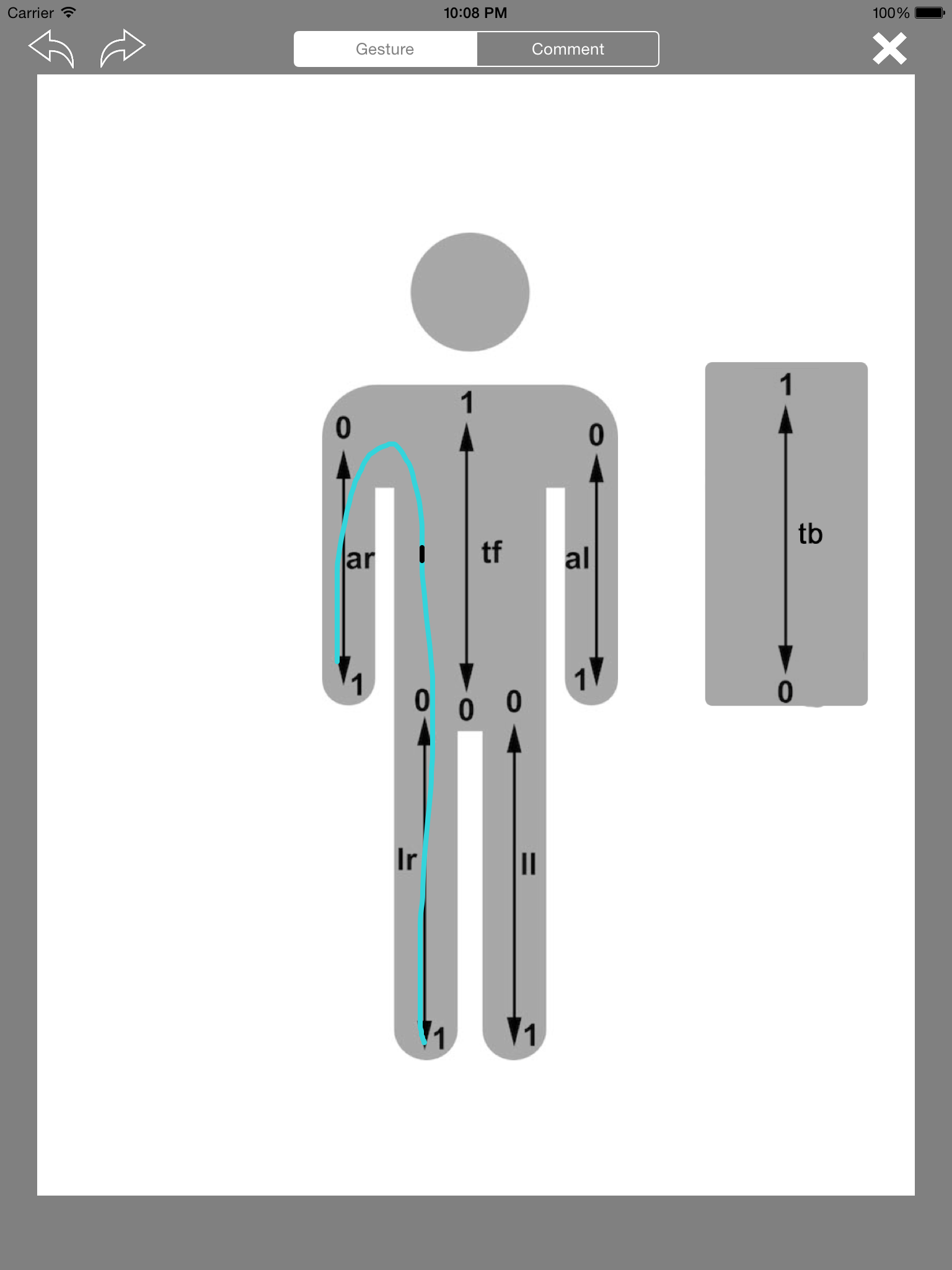

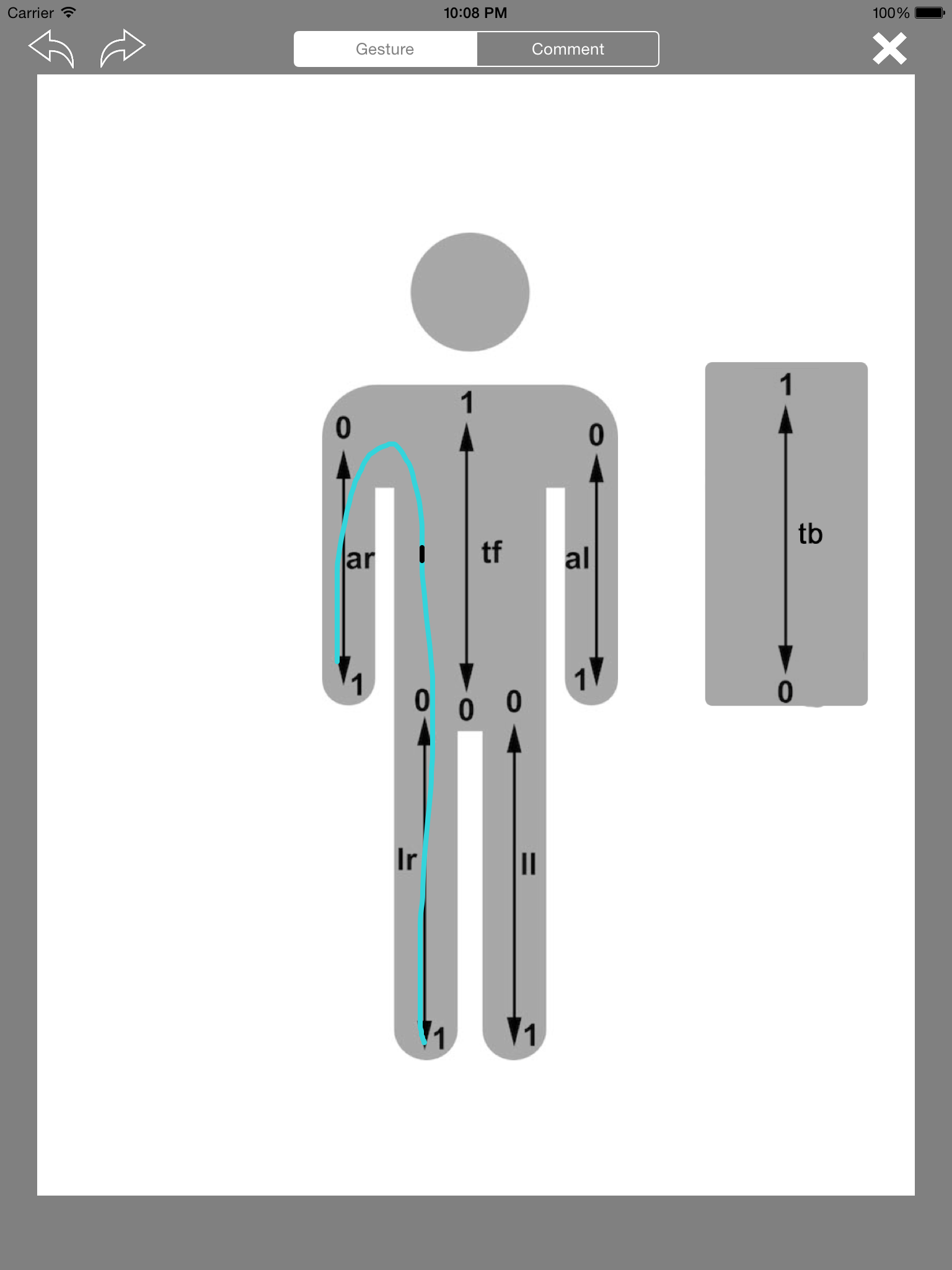

The DrawOSC and Pattern Player tools were used to compose tactile effects with the eccentric rotating mass (ERM) motors present on the arms, legs, and torso of the Ilinx garment. DrawOSC provides a visual representation of the body and allows the user to draw vibration trajectories that are played on the garment and to adjust a global vibration intensity parameter. The Pattern Player tool additionally allows for intensity to be controlled independently over these trajectories.

For more information about DrawOSC and the Pattern Player, consult the ICMC 2015 paper titled “Composition Techniques for the Ilinx Vibrotactile Garment”.

Feel Messenger

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2015 |

| Platformⓘ The OS or software framework needed to run the tool. | Android |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | ACM CHI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Communication |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Android |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Additive, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| None |

| Storageⓘ How data is stored for import/export or internally to the software. | Unknown |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

Feel Messenger is a haptically augmented text messaging application structured around families of “feel effects”. Feel widgets or “feelgits” are types of effects that can be varied by a set of parameters, or “feelbits”. These parameters are made available to users of Feel Messenger through a set of menus. New effects can also be created by playing pre-existing effects one after the other.

For more information, consult the CHI’15 WIP paper.

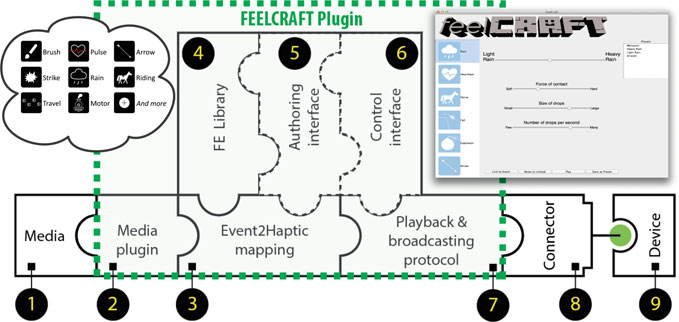

FeelCraft

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2014 |

| Platformⓘ The OS or software framework needed to run the tool. | Java, Python |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | ACM UISTAsiaHaptics |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Collaboration, Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Vybe Haptic Gaming Pad |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Library, Description |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| None |

| Storageⓘ How data is stored for import/export or internally to the software. | Custom JSON |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

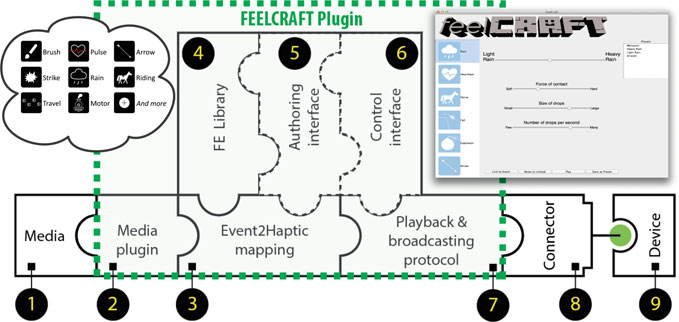

FeelCraft is a technical architecture where libraries of haptic effects (feel effects) are triggered by events in pre-existing media applications through the use of the FeelCraft plugin. The implemented example connects to the event system of a Minecraft server. Families of feel effects are expressed in software and controllable through sets of parmeters that are made available to users through a menu interface. In this example, as in-game events occur (e.g., it begins to rain), an associated feel effect with the selected parameters will be displayed to the person playing the game.

For more information on FeelCraft, consult the UIST’14 demo and the relevant chapter of Haptic Interaction.

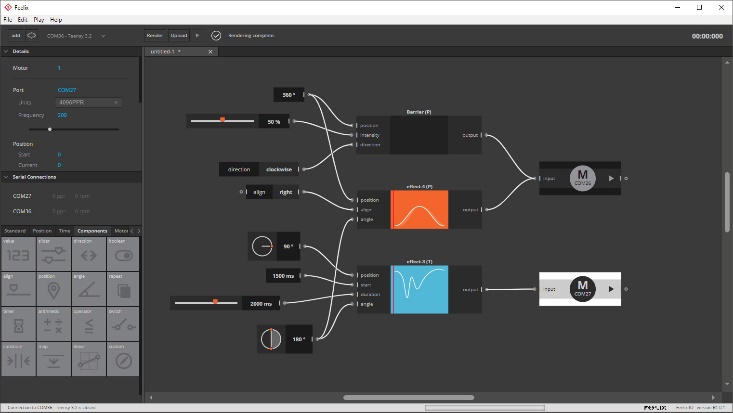

Feelix

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2020 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows, macOS |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (MIT) |

| Venueⓘ The venue(s) for publications. | ACM ICMIACM NordiCHIACM TEI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Prototyping |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Class |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Brushless Motors |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time, Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Demonstration, Dataflow |

| Storageⓘ How data is stored for import/export or internally to the software. | Feelix Effect File |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

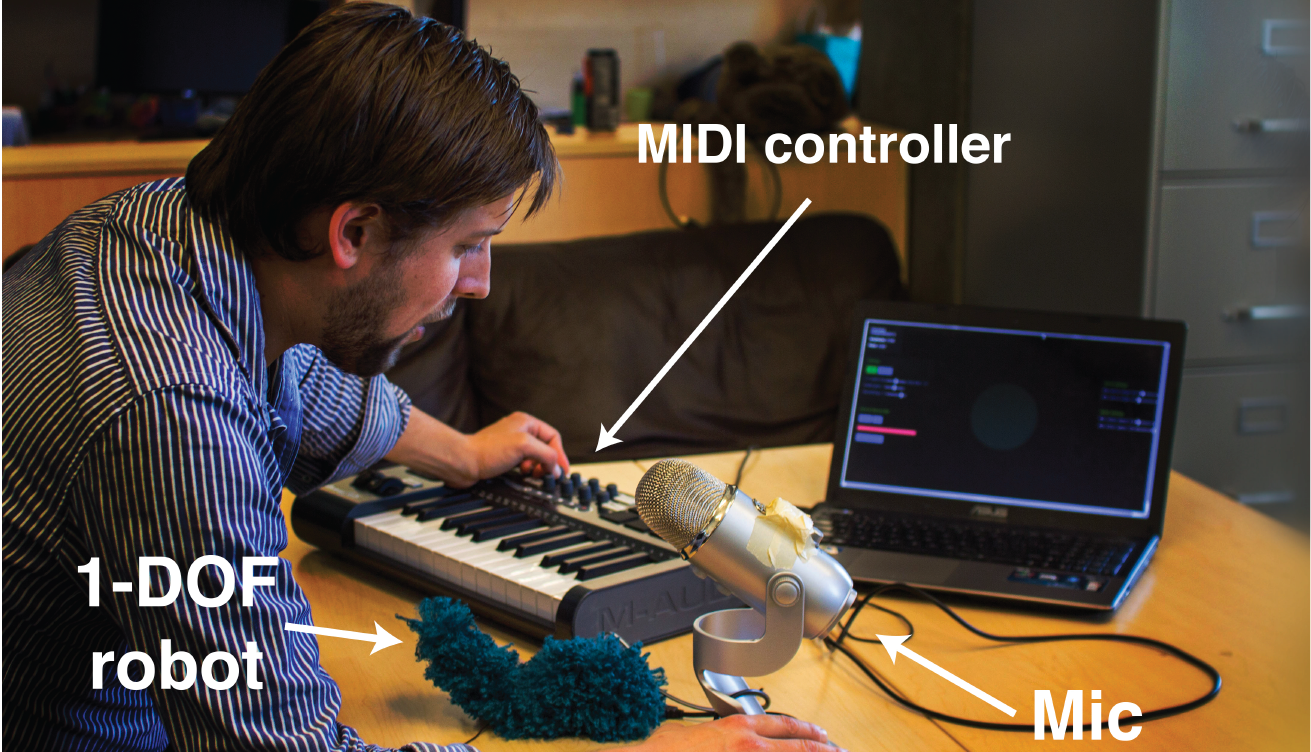

Feelix supports the creation of effects on a 1 DoF motor through two main interfaces. The first allows for force-feedback effects to be sketched out over either motor position or time. For time-based effects, user-created and pre-existing effects can be sequenced in a timeline. The second interface provides a dataflow programming environment to directly control the connected motor. Parameters of these effects can be connected to different inputs to support real-time adjustment of the haptic interaction.

For more information, consult the 2020 ICMI paper, the NordiCHI’22 tutorial, and the TEI’23 studio. and the Feelix documentation.

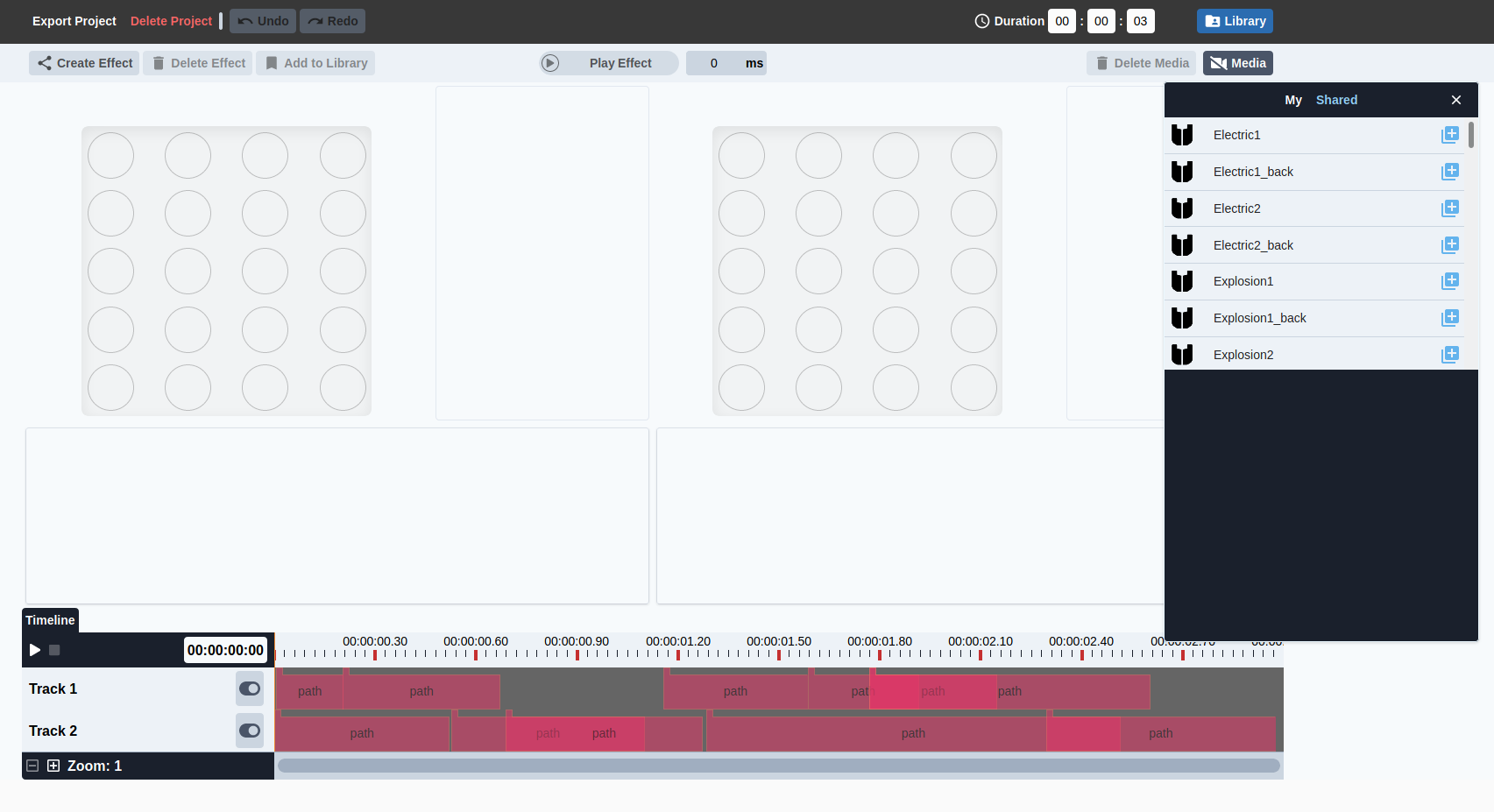

Feellustrator

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2023 |

| Platformⓘ The OS or software framework needed to run the tool. | Unknown |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | ACM CHI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Hardware Control, Collaboration |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Ultraleap STRATOS Explore |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Hand |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time, Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Audio, Visual |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Library, Additive |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Keyframe, Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | Custom JSON, CSV |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

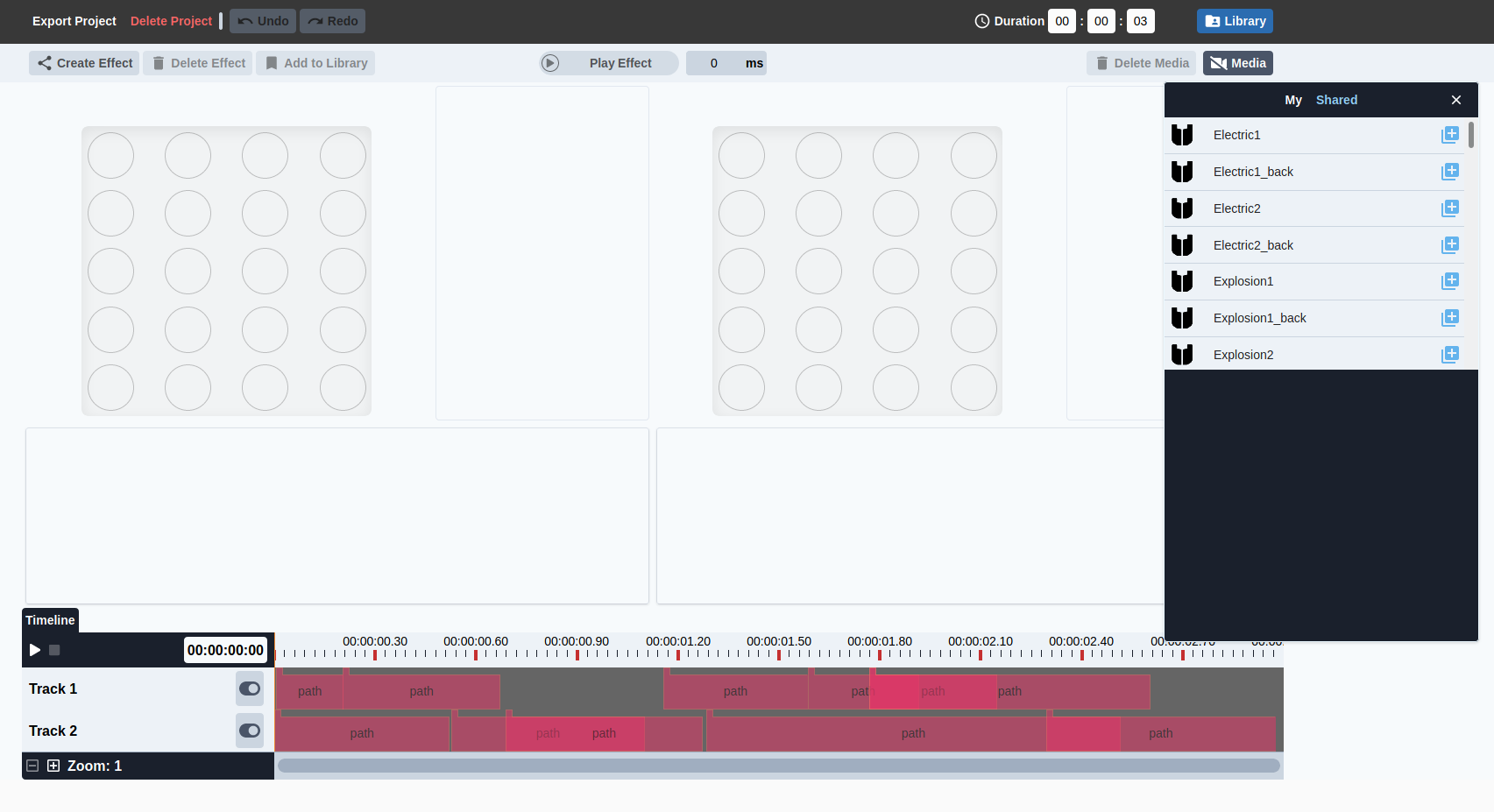

Feellustrator is a design tool for ultrasound mid-air haptics that allows users to sketch out paths for sensations to follow, control how the focal point will move along this path, and combine them together over time to create more complex experiences. Audio and visual reference materials can be loaded into the tool, but cannot be modified once added. Hand tracking support is present so that effects can be played relative to the user’s hand rather than floating freely in space.

For more information, please consult the CHI’23 paper.

ForceHost

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2022 |

| Platformⓘ The OS or software framework needed to run the tool. | Web, Faust |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL 3) |

| Venueⓘ The venue(s) for publications. | NIME |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Music |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Bespoke |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | TorqueTuner |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Audio |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | Open Sound Control |

Additional Information

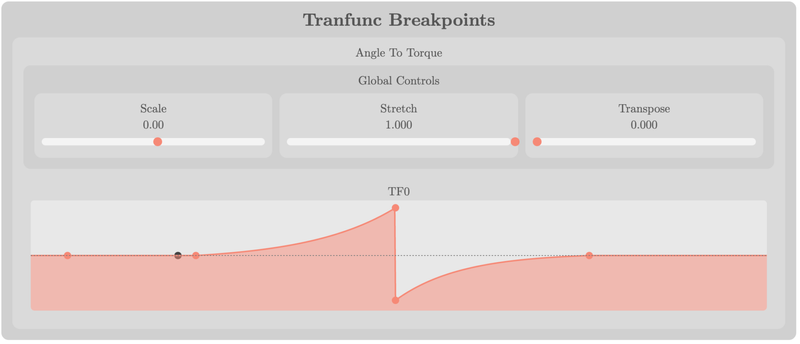

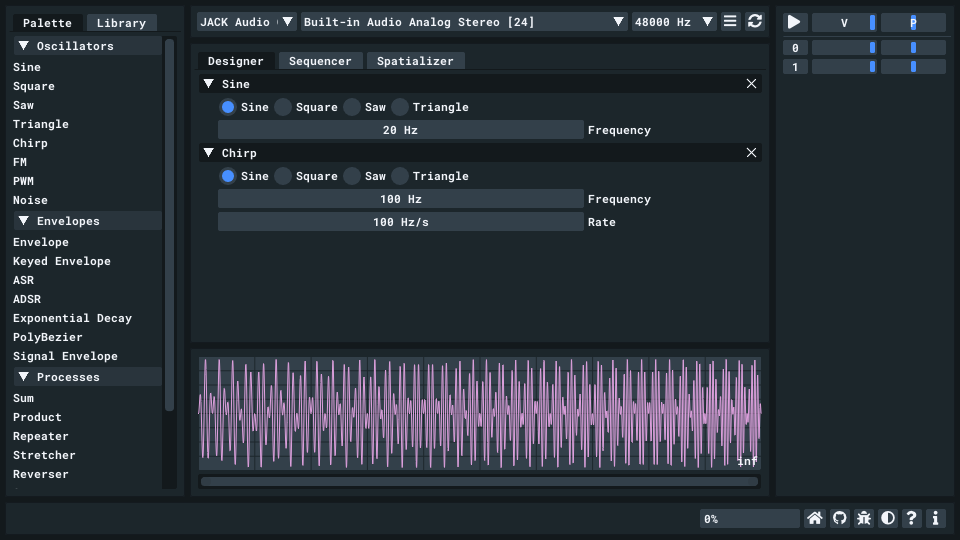

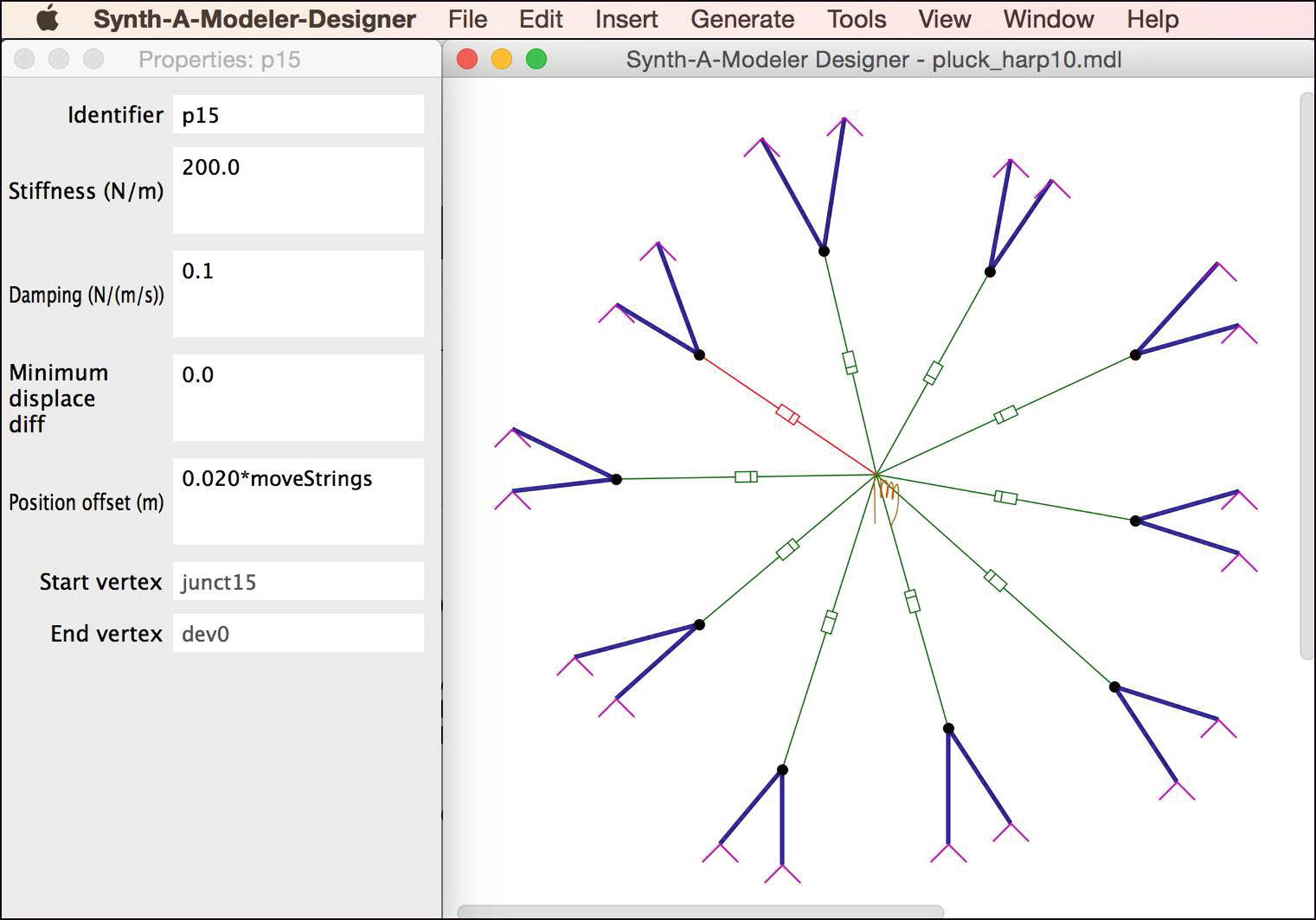

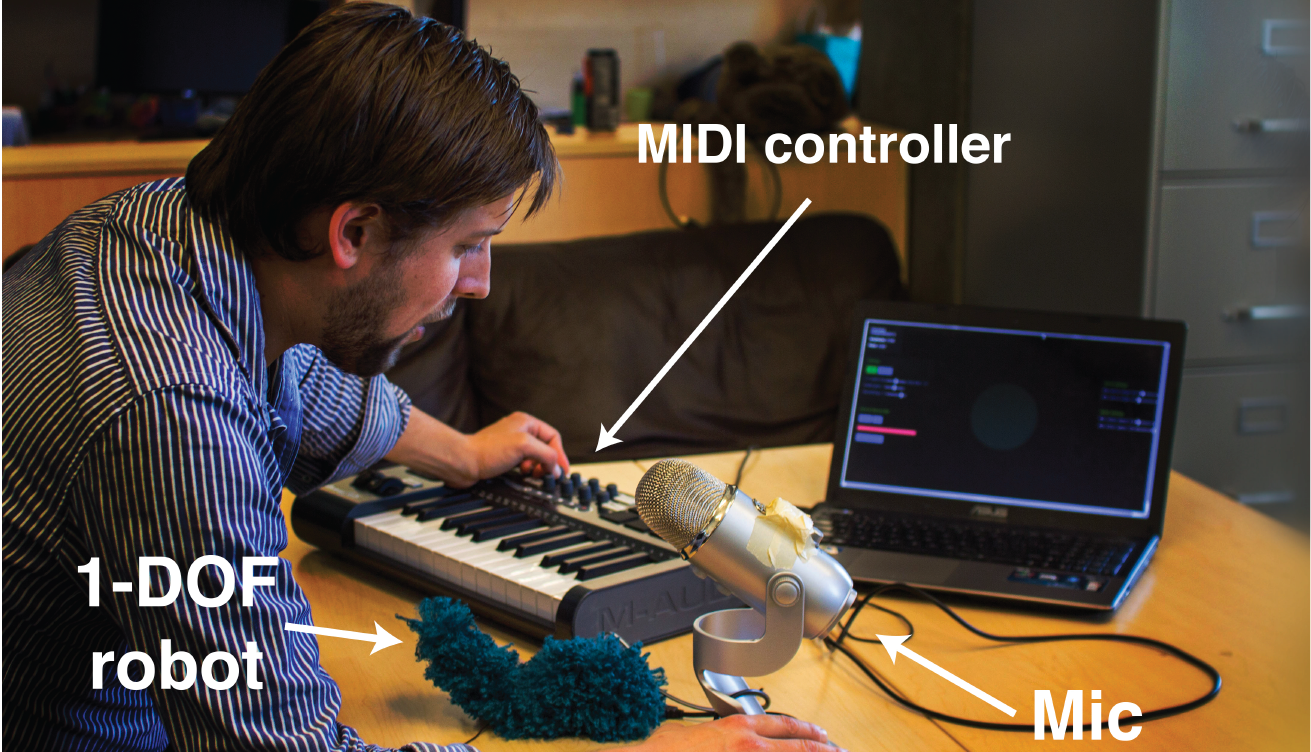

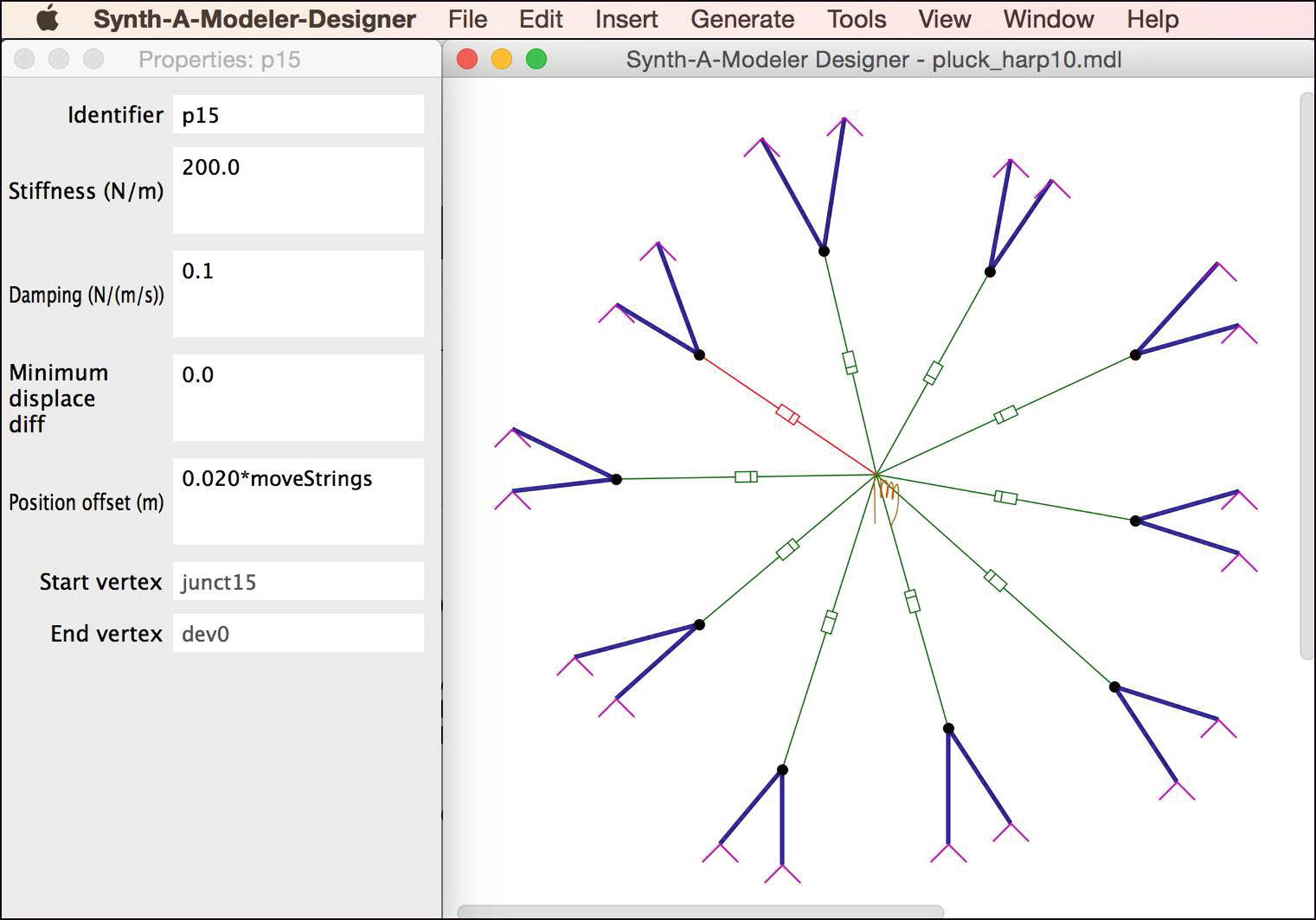

ForceHost is a toolchain for embedded physical modelling of audio-haptic effects for digital musical instruments. It primarily supports the TorqueTuner device, but can optionally be used with ESP32 boards supporting audio I/O, a 1-DoF servo, and network connectivity. Network connectivity is necessary so that it can be connected to other audio synthesis programs and so users can access the editor GUI through a web application. This application allows users to create haptic effects at runtime by sketching and manipulating curves representing transfer functions. Based on Faust, ForceHost is also supported by a fork of Synth-a-Modeler and can be controlled with a lower-level API called haptic1D.

For more information, please consult the NIME’22 paper or visit the GitLab repositories.

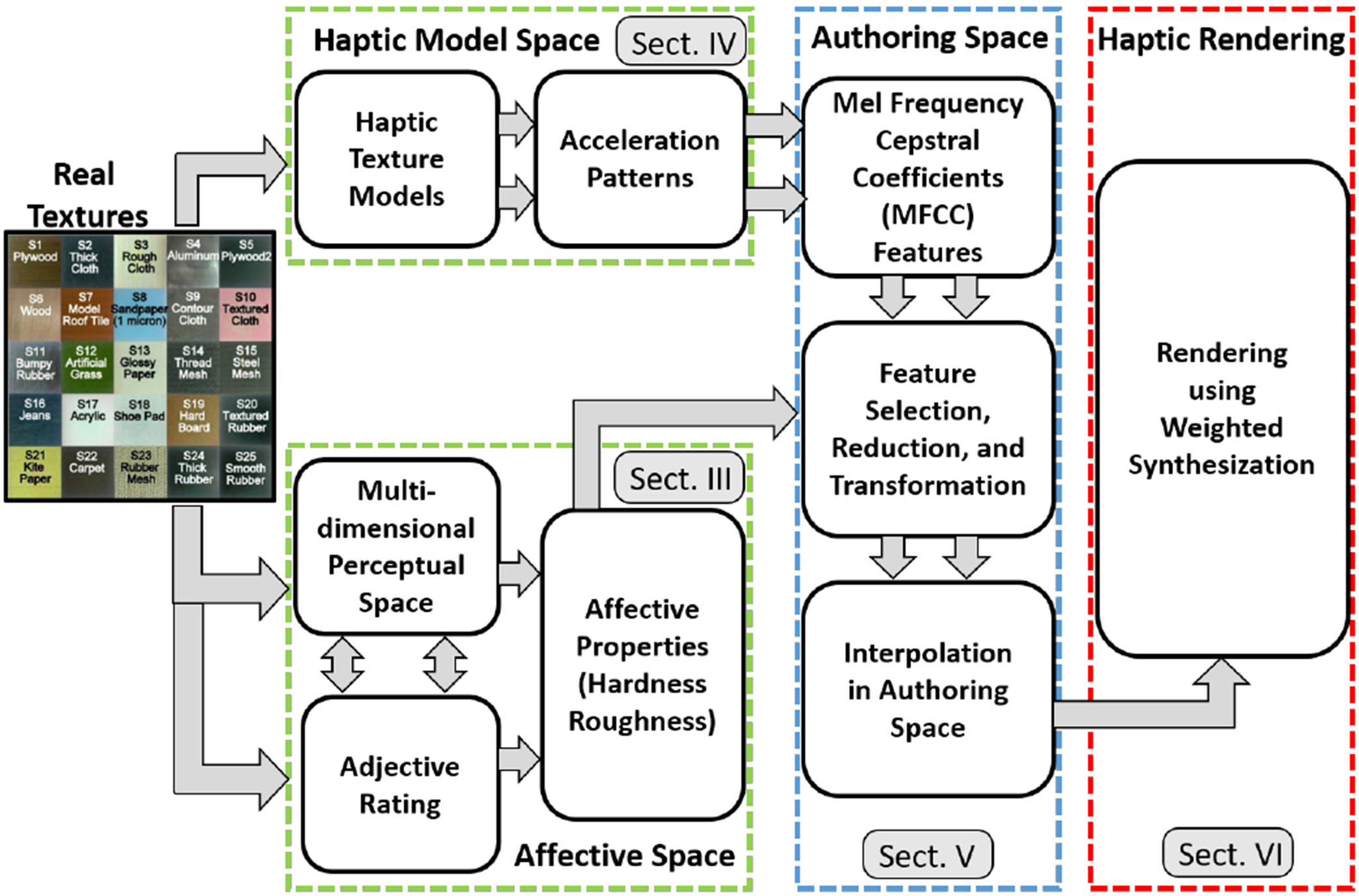

GAN-based Material Tuning

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2020 |

| Platformⓘ The OS or software framework needed to run the tool. | iPad |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | IEEE ACCESS |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Prototyping |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Class |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Voice Coil |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Hand |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| N/A |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

The tuning method developed here uses a generative adversarial network (GAN) to produce vibrations corresponding to materials with properties in between those provided in the dataset used to train the GAN. The application described in the paper was intended to test this method of haptic tuning with users.

For more information about this method, consult the 2020 IEEE Access paper.

GENESIS

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 1995 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows, macOS, Linux |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | ICMCSymposium on Computer Music Multidisciplinary Research |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Music, Simulation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Bespoke |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Transducteur gestuel rétroactif |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Audio |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Dataflow |

| Storageⓘ How data is stored for import/export or internally to the software. | Unknown |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

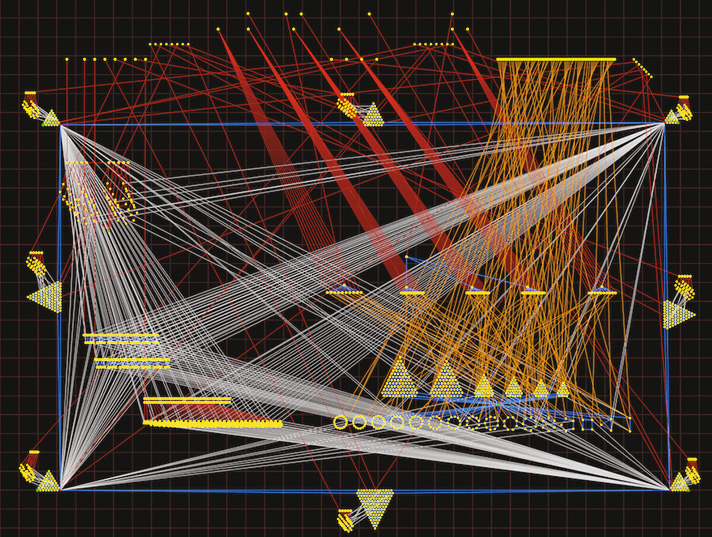

GENESIS is a physical modelling system that uses the CORDIS-ANIMA framework to create virtual musical instruments. By creating networks of different modules in a one-dimensional space and adjusting their parameters, simulations can be constructed and interacted with via a connected haptic device. Sounds produced by the model are played back. While earlier versions of GENESIS required non-realtime simulation of instruments, multiple simulation engines are now supported, including those that run in real time (e.g., GENESIS-RT).

Information on GENESIS is included in numerous places, including the 1995 International Computer Music Conference (ICMC) paper, the 2002 ICMC paper, the 2009 ICMC paper, and the 2013 International Symposium on Computer Music Multidisciplinary Research paper.

H-Studio

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2013 |

| Platformⓘ The OS or software framework needed to run the tool. | Unknown |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | ACM UIST |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback, Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Novint Falcon |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Audio, Visual |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Keyframe, Demonstration |

| Storageⓘ How data is stored for import/export or internally to the software. | Unknown |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | IMU Sensor |

Additional Information

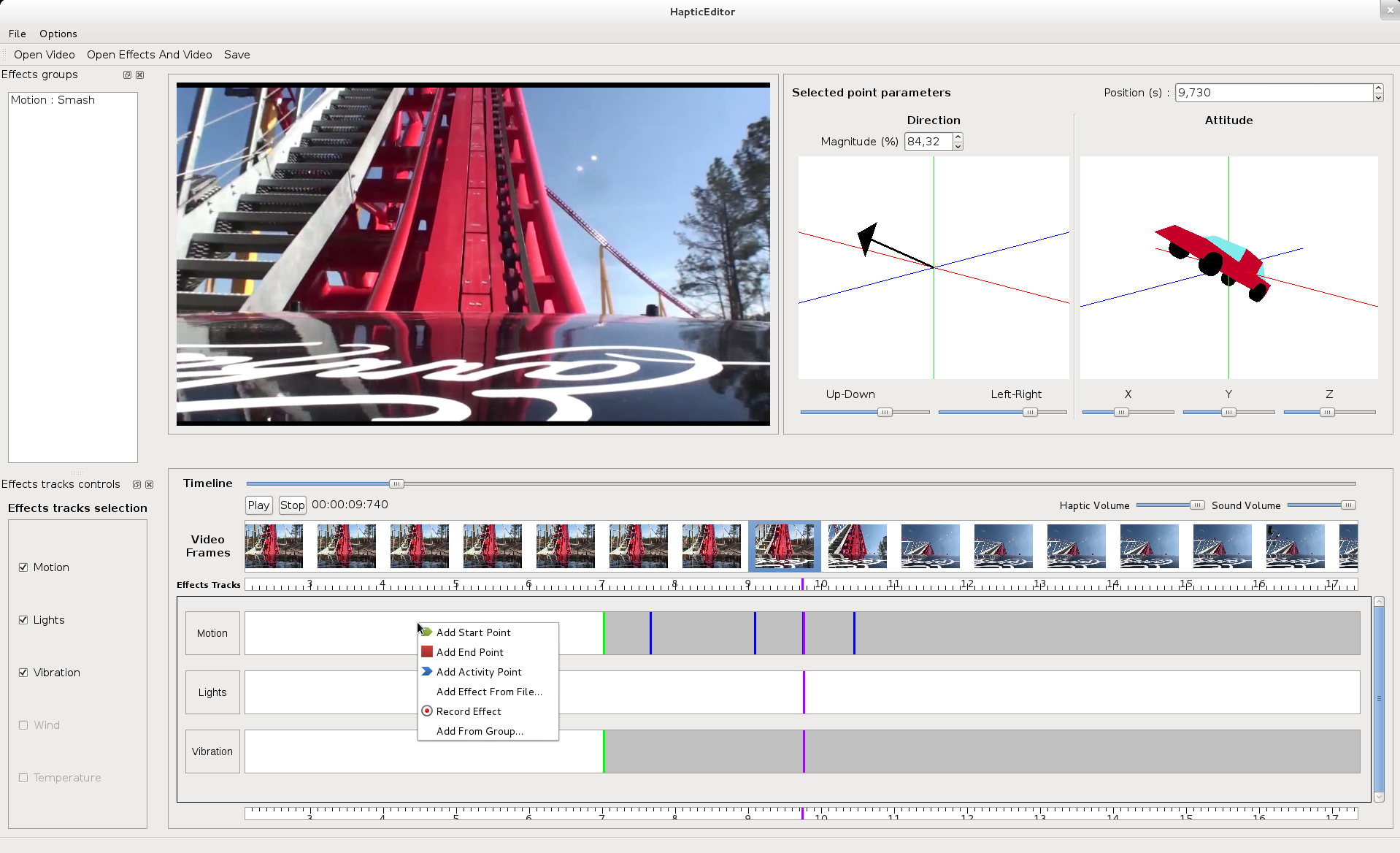

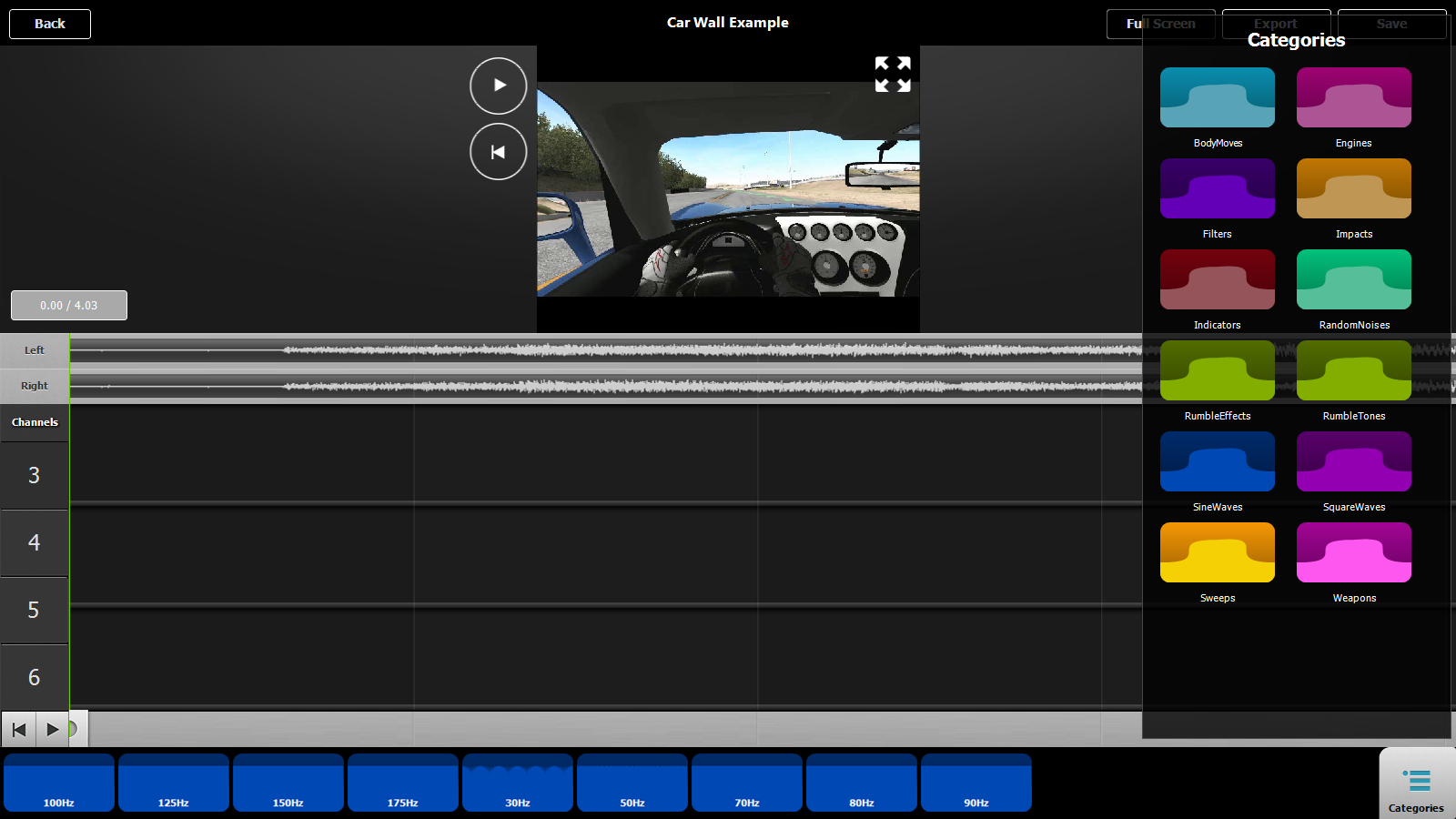

H-Studio is a tool meant to add haptic effects, primarily motion effects, to a pre-existing video file. Its primary interface provides a preview of the original audio-visual content, tracks of the different parameters that can be edited in H-Studio, and a visual preview of a selected motion effect. Data to drive a motion effect can be input from a force-feedback device directly or from another source (e.g., an IMU), and motion effects can be played back in the tool to aid in further refinement.

For more information, consult the UIST’13 poster.

H3D API

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2004 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows, macOS, Linux |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL 2) |

| Venueⓘ The venue(s) for publications. | N/A |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Simulation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Phantom, Novint Falcon, omega.x, delta.x, sigma.x |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Visual |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | N/A |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| N/A |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

H3D API is a framework that lets users design haptic scenes using X3D. Virtual objects, effects in space, and haptic devices can be specified using X3D and added into the scene-graph. Visual properties are rendered using OpenGL and haptic properties are rendered using the included HAPI engine. Users also have the option of adding new features or creating more complex scenes by directly programming in C++ or Python.

For more information on H3D API, please consult the H3D API website and its documentation page.

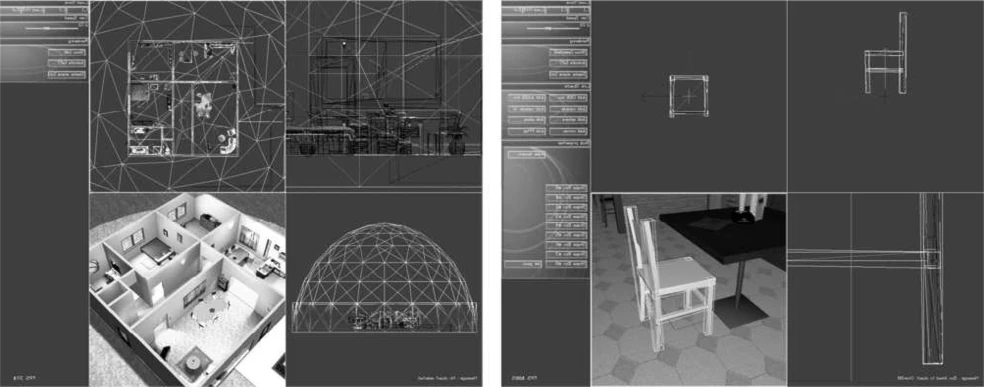

HAMLAT

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2008 |

| Platformⓘ The OS or software framework needed to run the tool. | Blender |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | EuroHaptics |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Phantom |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Visual |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| None |

| Storageⓘ How data is stored for import/export or internally to the software. | HAML |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

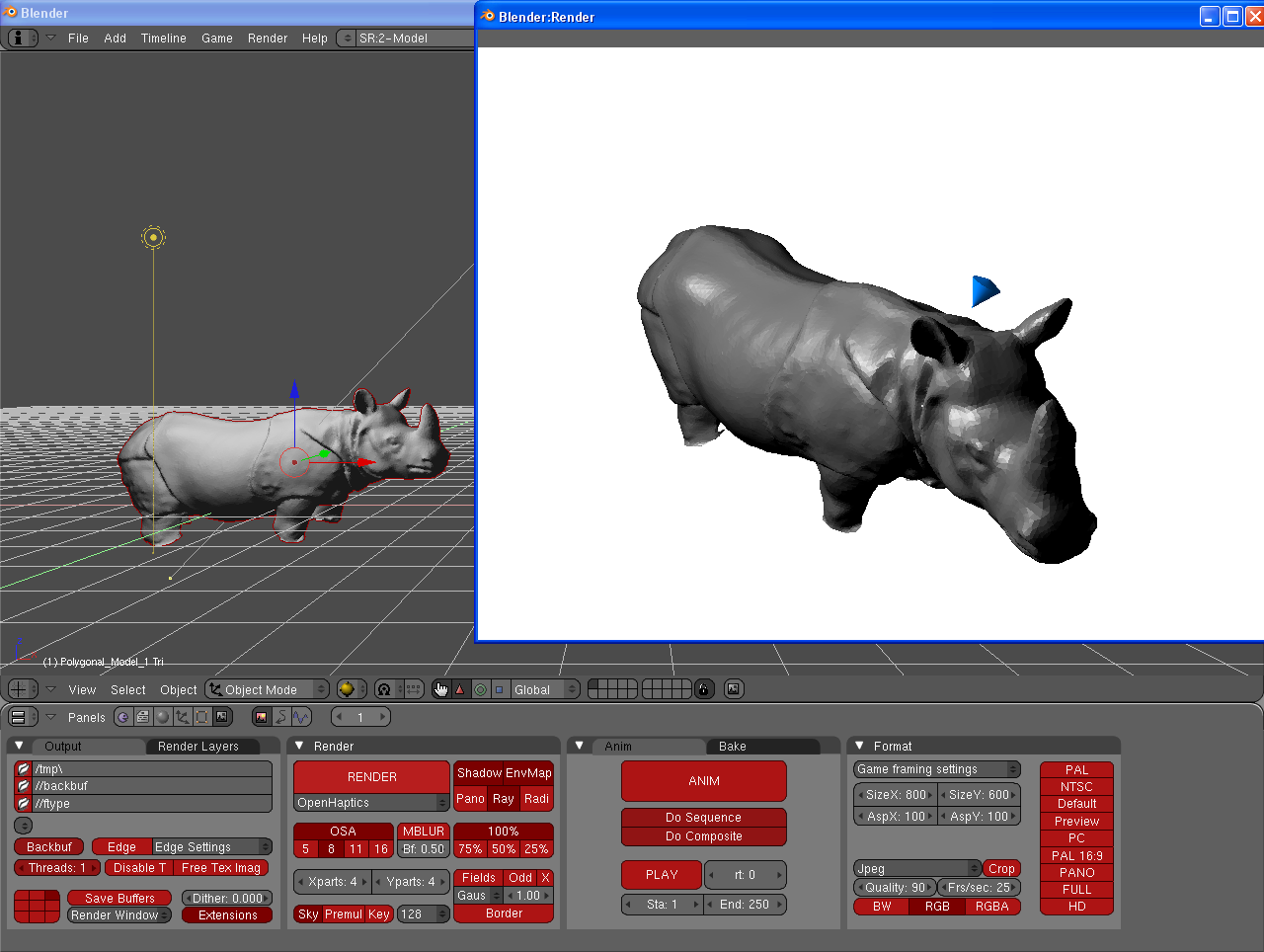

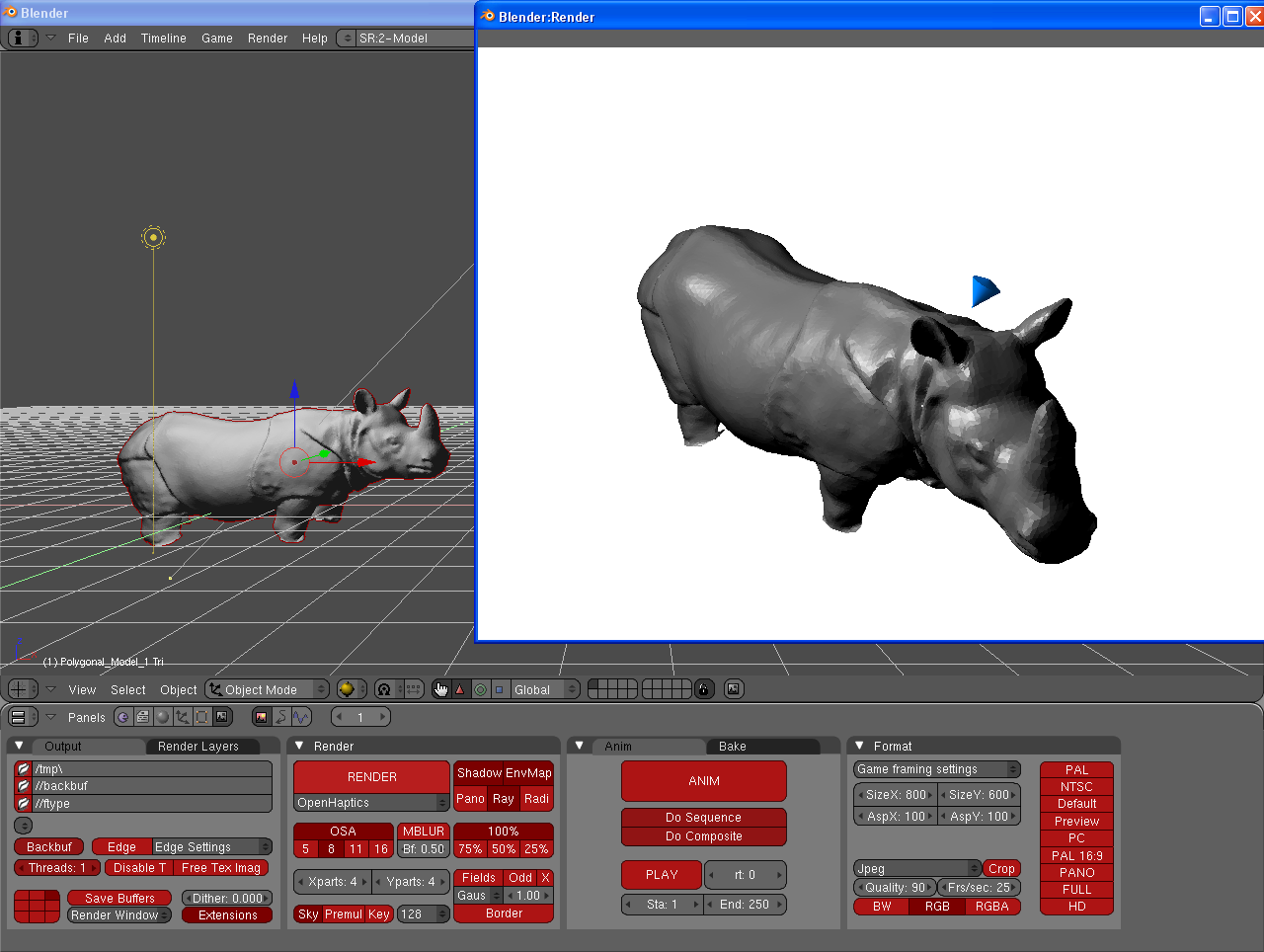

HAMLAT is an extension to Blender that adds additional menu items to control the static physical properties of modeled objects. These properties can be felt using a force feedback device in the environment itself. These properties can be imported to and exported from HAMLAT using the Haptic Applications Meta Language (HAML).

For more information, consult the EuroHaptics 2008 paper.

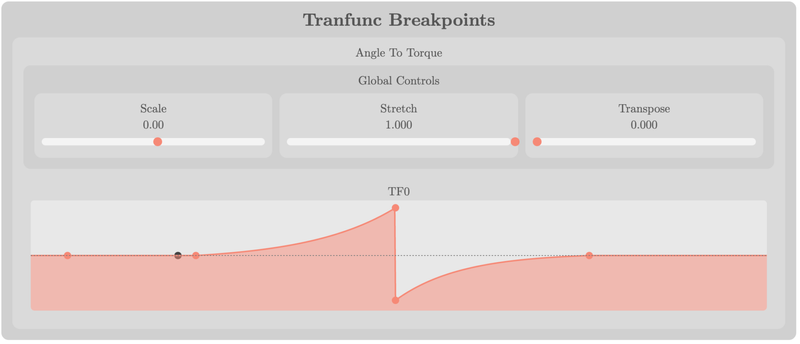

hAPI

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2018 |

| Platformⓘ The OS or software framework needed to run the tool. | Java, C#, Python |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL 3) |

| Venueⓘ The venue(s) for publications. | N/A |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Simulation, Education |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Haply 2DIY |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | N/A |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| N/A |

| Storageⓘ How data is stored for import/export or internally to the software. | None |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

hAPI is a low-level API for controlling the Haply 2DIY device. In its basic form, it handles communication between the host and device and mapping between positions and forces within the device’s workspace and angles and torques used by the hardwware itself. hAPI can also be combined with physics engines such as Fisica for convenience.

For more information on hAPI, please consult the GitLab repository, the 2DIY development kit page, and the hAPI/Fisica repository.

Haptic Icon Prototyper

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2006 |

| Platformⓘ The OS or software framework needed to run the tool. | Linux |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | IEEE Haptics Symposium |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Prototyping |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Class |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | 1 DoF Knob |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time, Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Location-aware |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Keyframe |

| Storageⓘ How data is stored for import/export or internally to the software. | Unknown |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

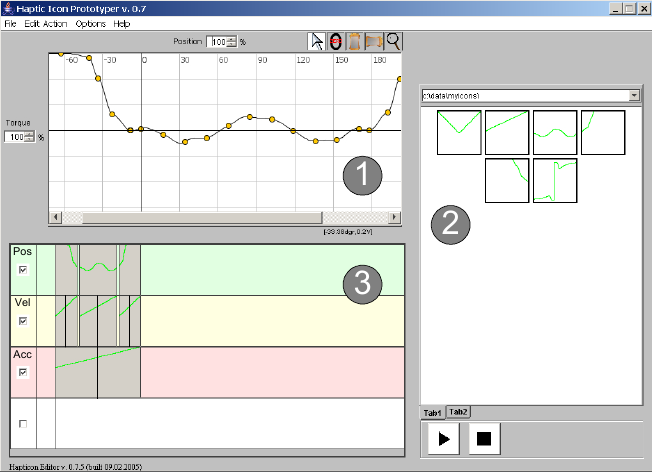

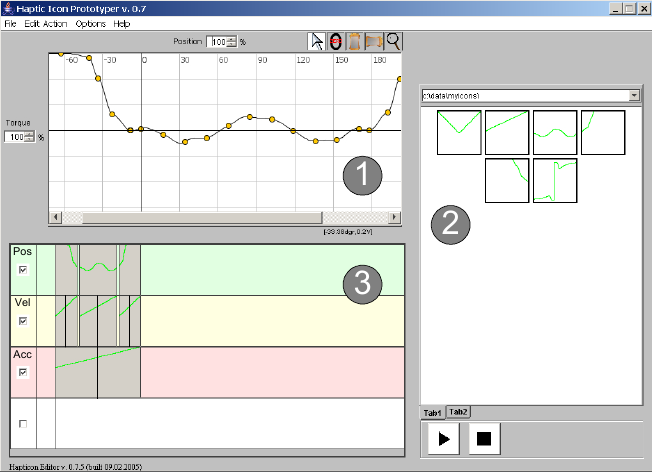

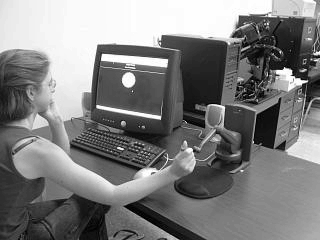

The Haptic Icon Prototyper consists of a waveform editor used to adjust the magnitude of a force-feedback effect over position or time. Waveforms can be refined by manipulating keyframes on visualizations of them and adjusting until a desired result is achieved. These waveforms can then be combined together in a timeline either by superimposing them to create a new tile or by sequencing them. The combination of these features is meant to support the user in rapidly brainstorming new ideas.

For more information, consult the 2006 Haptics Symposium paper.

Haptic Studio (Immersion)

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2003 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Proprietary |

| Venueⓘ The venue(s) for publications. | N/A |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | TouchSense Devices |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | Audio |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

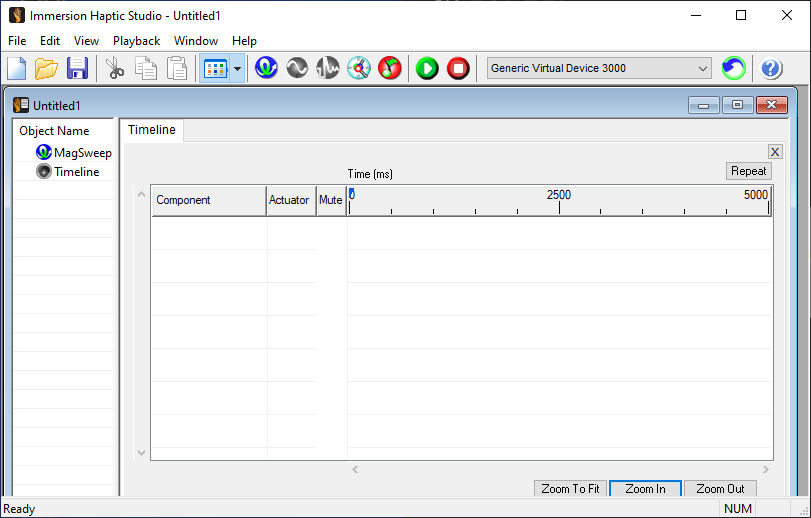

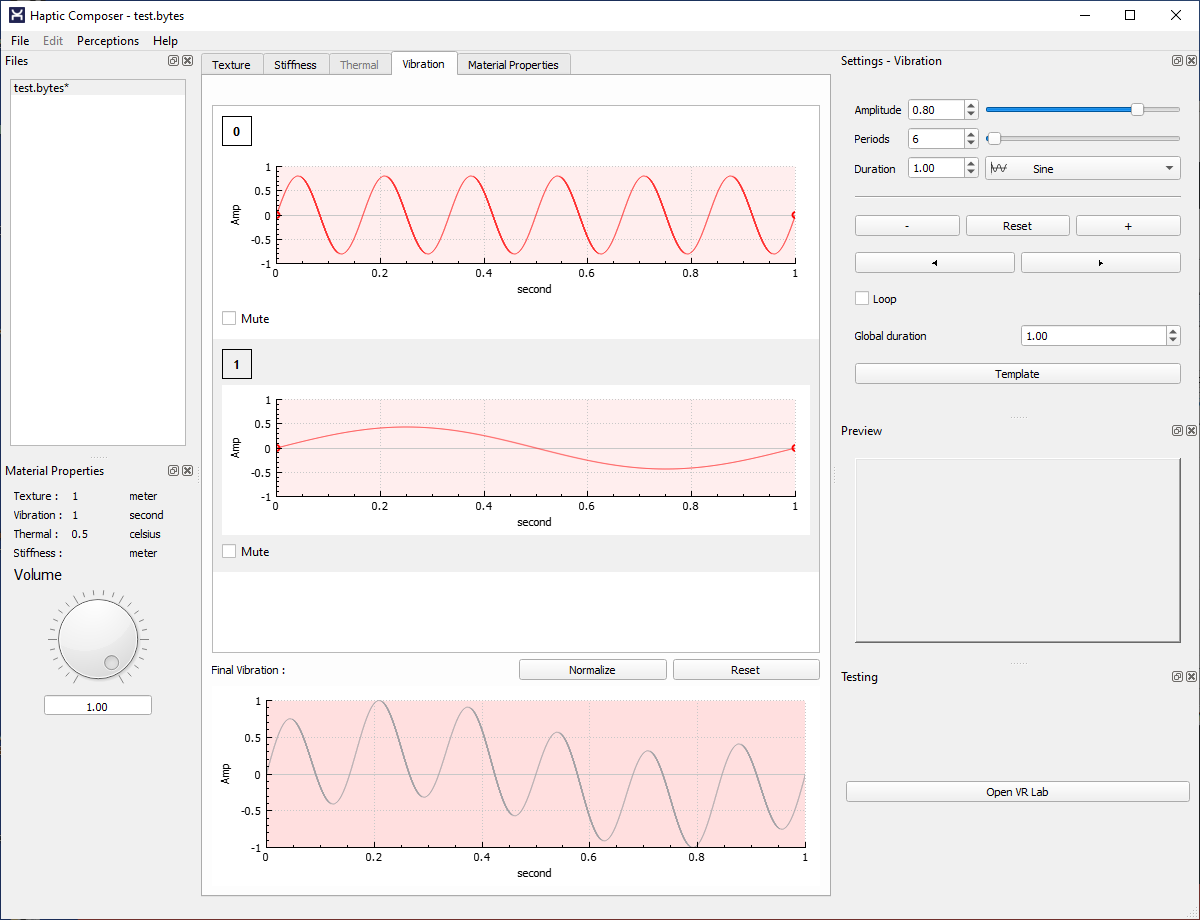

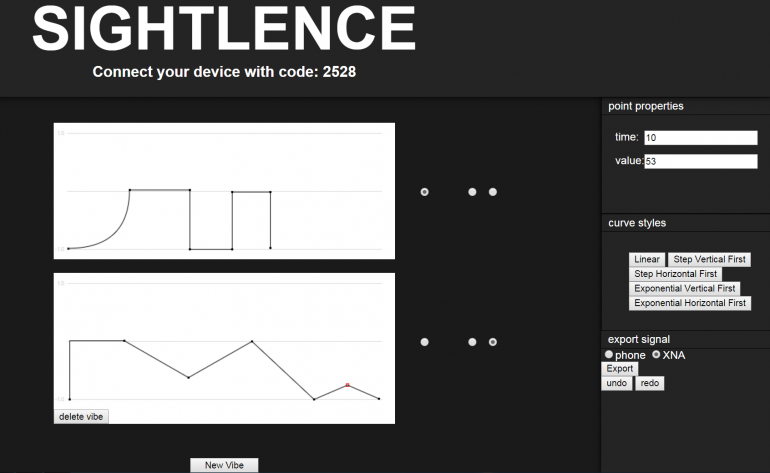

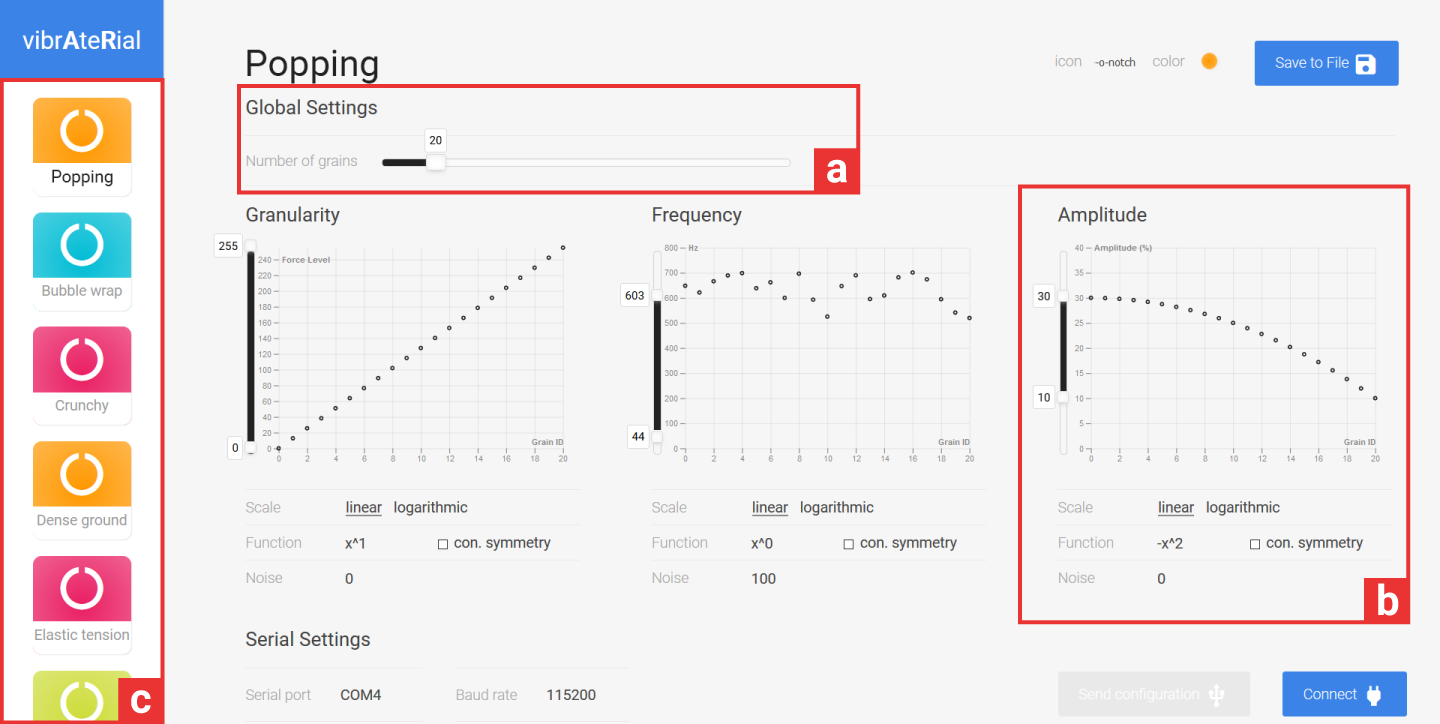

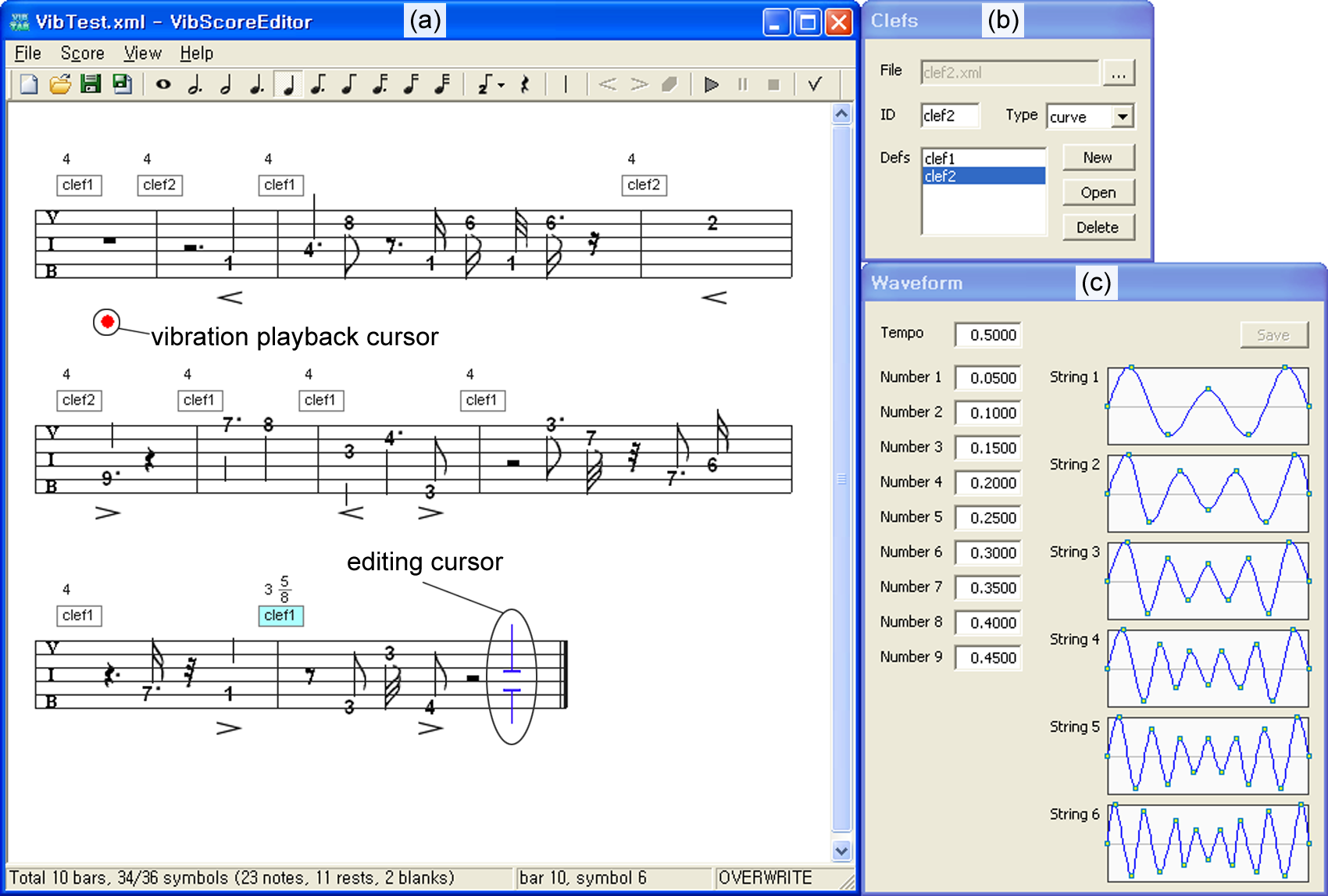

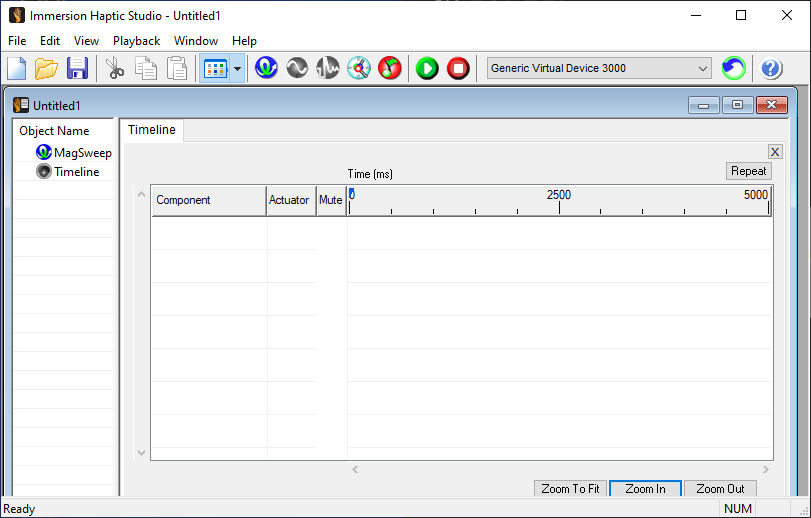

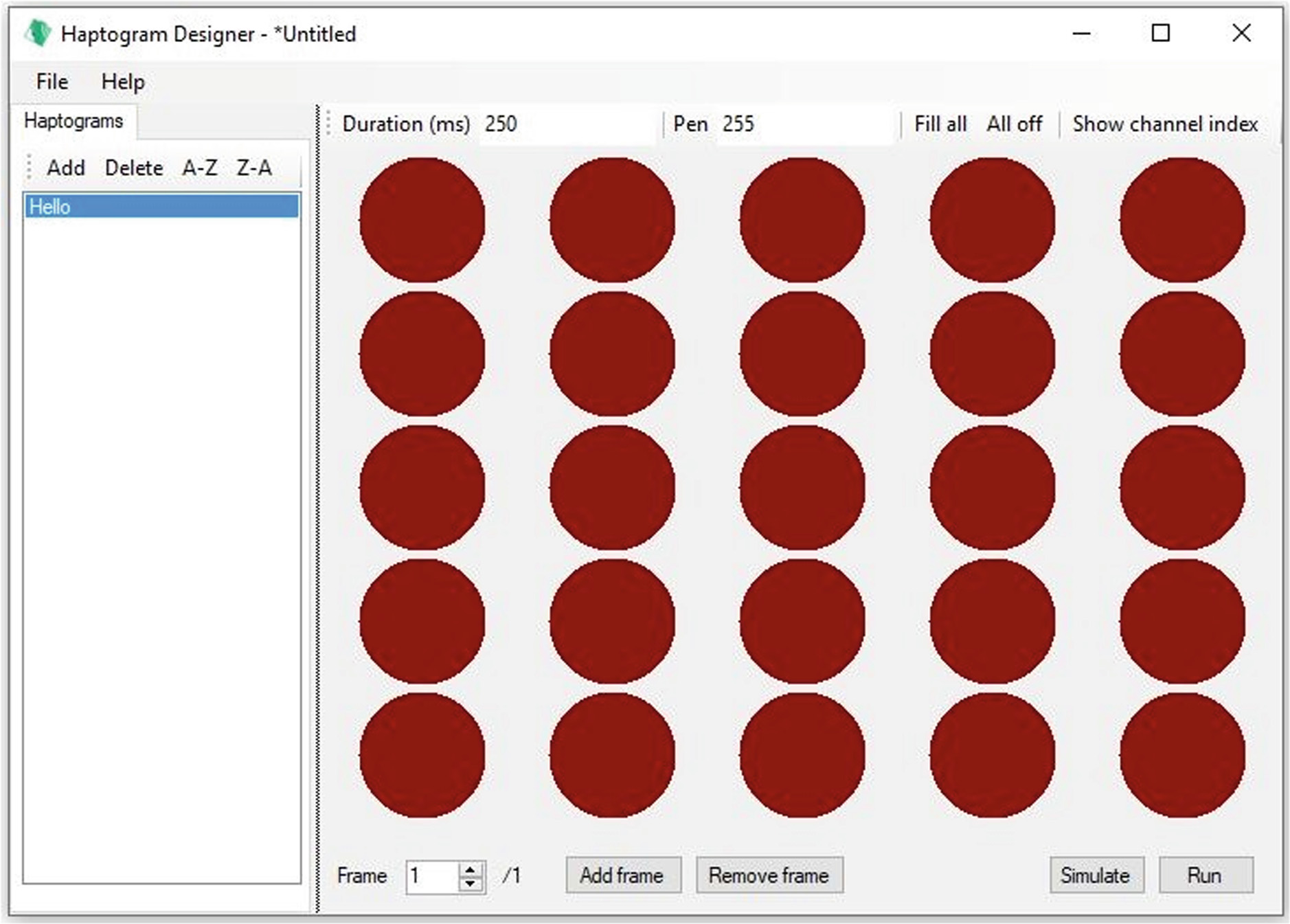

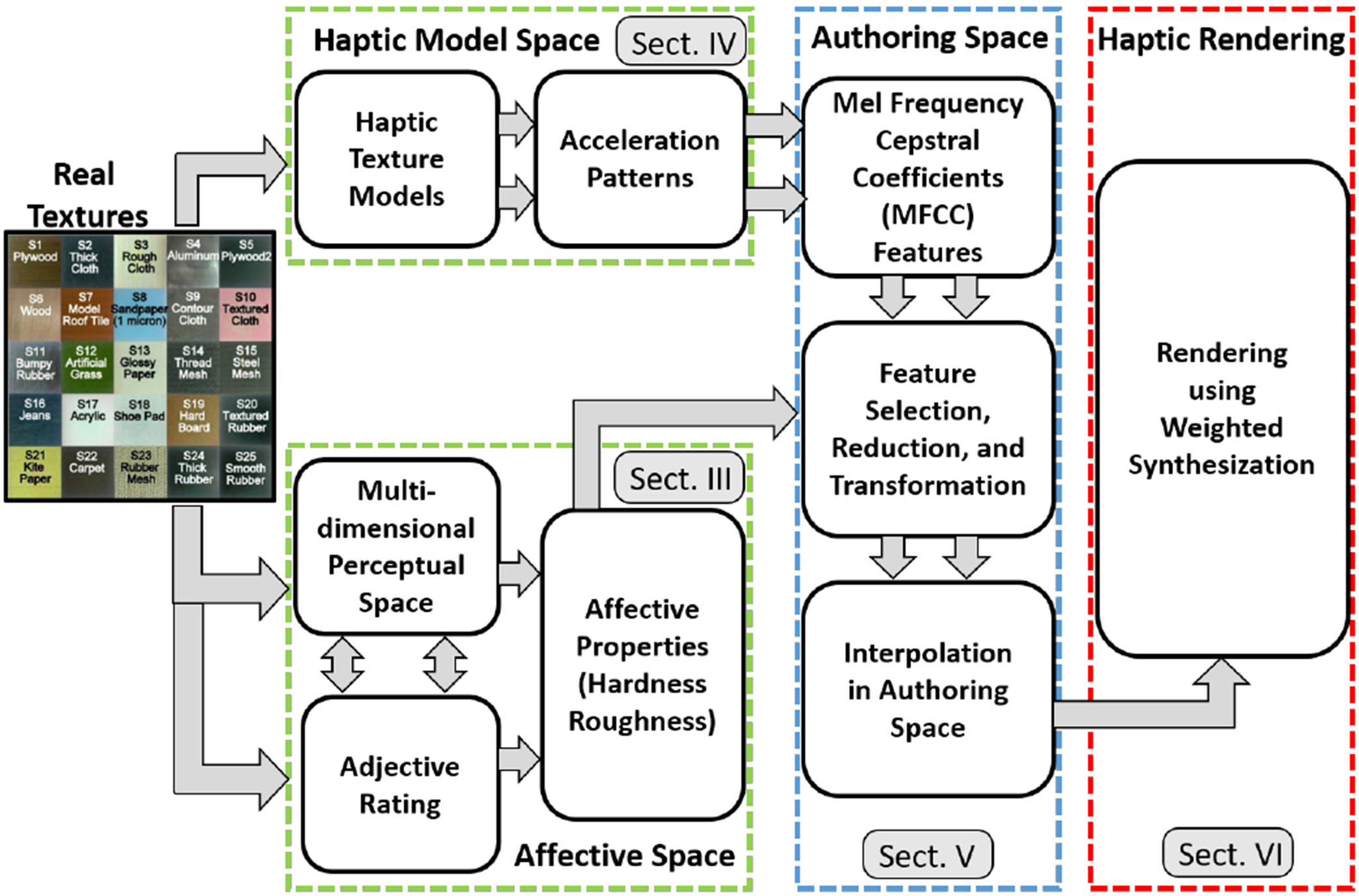

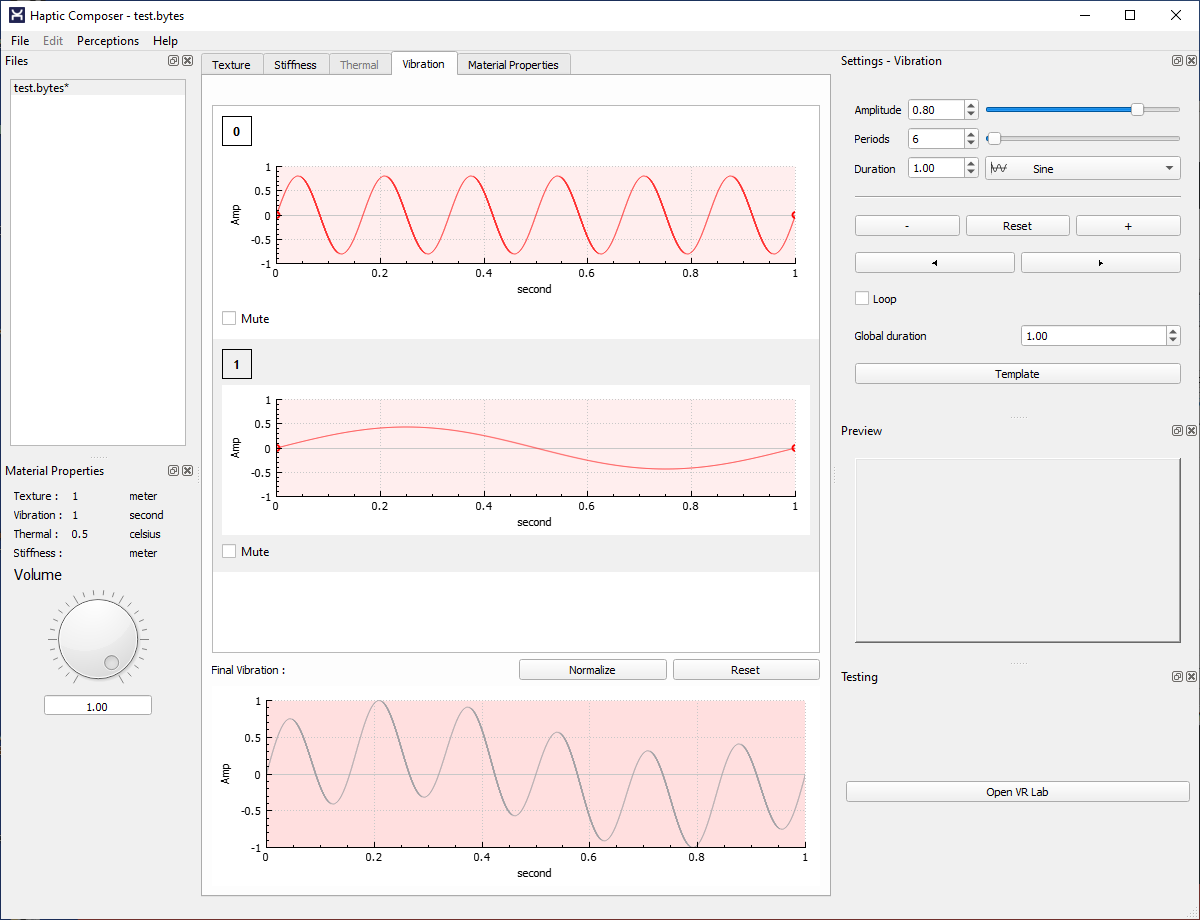

| Design Approachesⓘ Broadly, the methods available to create a desired effect.