AdapTics

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2024 |

| Platformⓘ The OS or software framework needed to run the tool. | Unity, Rust |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (GPL-3.0 and MPL-2.0) |

| Venueⓘ The venue(s) for publications. | ACM CHI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Prototyping, Haptic Augmentation |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Consumer |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Ultraleap STRATOS Explore |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | Hand |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time, Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Target-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Keyframe, Demonstration, Track |

| Storageⓘ How data is stored for import/export or internally to the software. | Custom JSON |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API, WebSockets |

Additional Information

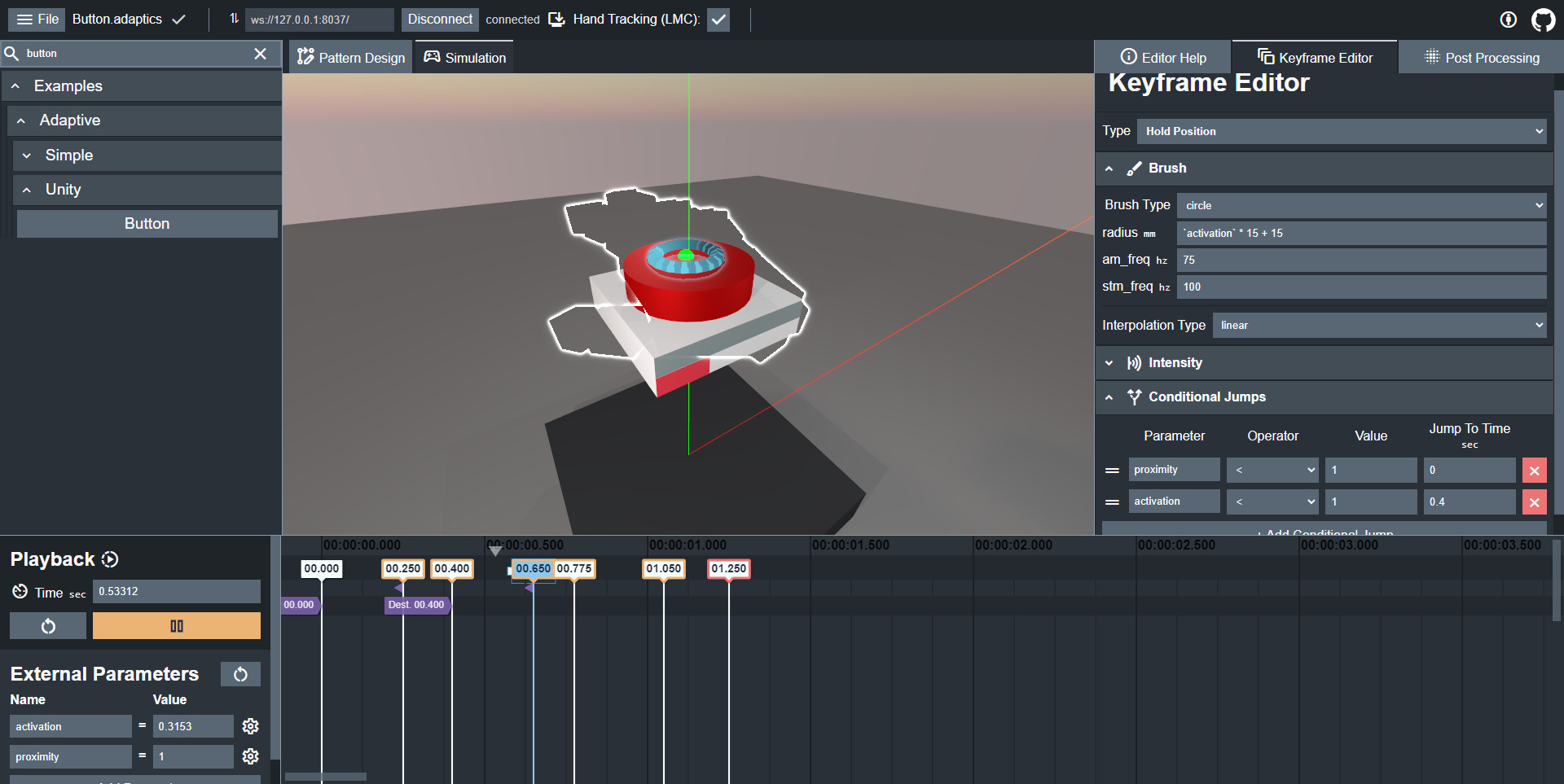

AdapTics is a toolkit for creating ultrasound tactons whose parameters change in response to other parameters or events. It consists of two components: the AdapTics Engine and Designer. The Designer, built on Unity, allows for the creation of adaptive tactons using elements commonly found in audio-video editors, and adaptive audio editing in particular. Tactons can be created freely or in relation to a simulated hand. The Designer communicates using WebSockets to the Engine, which is responsible for rendering on the connected hardware. While only Ultraleap devices are supported as of writing, the Engine is designed to support future hardware. The Engine can be used directly through API calls in Rust or C/C++.

To learn more about AdapTics, read the CHI 2024 paper or consult the AdapTics Engine and AdapTics Designer GitHub repositories.