Feelix

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2020 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows, macOS |

| Availabilityⓘ If the tool can be obtained by the public. | Available |

| Licenseⓘ Tye type of license applied to the tool. | Open Source (MIT) |

| Venueⓘ The venue(s) for publications. | ACM ICMIACM NordiCHIACM TEI |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Prototyping |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Force Feedback |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Class |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | Brushless Motors |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time, Action |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Demonstration, Dataflow |

| Storageⓘ How data is stored for import/export or internally to the software. | Feelix Effect File |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | API |

Additional Information

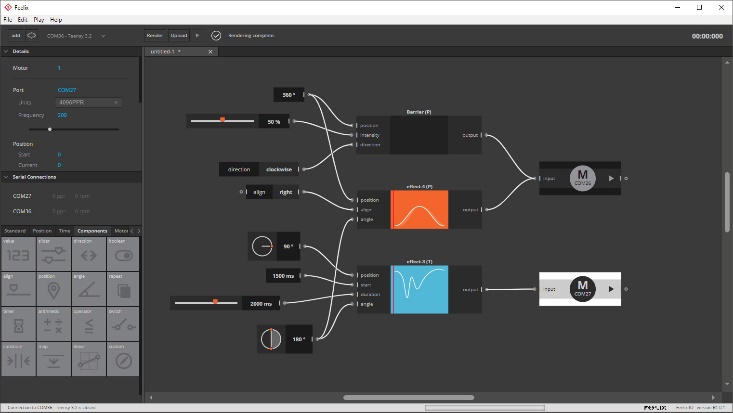

Feelix supports the creation of effects on a 1 DoF motor through two main interfaces. The first allows for force-feedback effects to be sketched out over either motor position or time. For time-based effects, user-created and pre-existing effects can be sequenced in a timeline. The second interface provides a dataflow programming environment to directly control the connected motor. Parameters of these effects can be connected to different inputs to support real-time adjustment of the haptic interaction.

For more information, consult the 2020 ICMI paper, the NordiCHI’22 tutorial, and the TEI’23 studio. and the Feelix documentation.