posVibEditor

Tool Summary

| Metadata | |

|---|---|

| Release Yearⓘ The year a tool was first publicly released or discussed in an academic paper. | 2008 |

| Platformⓘ The OS or software framework needed to run the tool. | Windows |

| Availabilityⓘ If the tool can be obtained by the public. | Unavailable |

| Licenseⓘ Tye type of license applied to the tool. | Unknown |

| Venueⓘ The venue(s) for publications. | IEEE HAVEIEEE WHC |

| Intended Use Caseⓘ The primary purposes for which the tool was developed. | Prototyping, Hardware Control |

| Hardware Information | |

|---|---|

| Categoryⓘ The general types of haptic output devices controlled by the tool. | Vibrotactile |

| Abstractionⓘ How broad the type of hardware support is for a tool.

| Class |

| Device Namesⓘ The hardware supported by the tool. This may be incomplete. | ERM |

| Device Templateⓘ Whether support can be easily extended to new types of devices. | No |

| Body Positionⓘ Parts of the body where stimuli are felt, if the tool explicitly shows this. | N/A |

| Interaction Information | |

|---|---|

| Driving Featureⓘ If haptic content is controlled over time, by other actions, or both. | Time |

| Effect Localizationⓘ How the desired location of stimuli is mapped to the device.

| Device-centric |

| Non-Haptic Mediaⓘ Support for non-haptic media in the workspace, even if just to aid in manual synchronization. | None |

| Iterative Playbackⓘ If haptic effects can be played back from the tool to aid in the design process. | Yes |

| Design Approachesⓘ Broadly, the methods available to create a desired effect.

| Direct, Procedural, Additive, Library |

| UI Metaphorsⓘ Common UI metaphors that define how a user interacts with a tool.

| Track, Keyframe |

| Storageⓘ How data is stored for import/export or internally to the software. | Custom XML |

| Connectivityⓘ How the tool can be extended to support new data, devices, and software. | None |

Additional Information

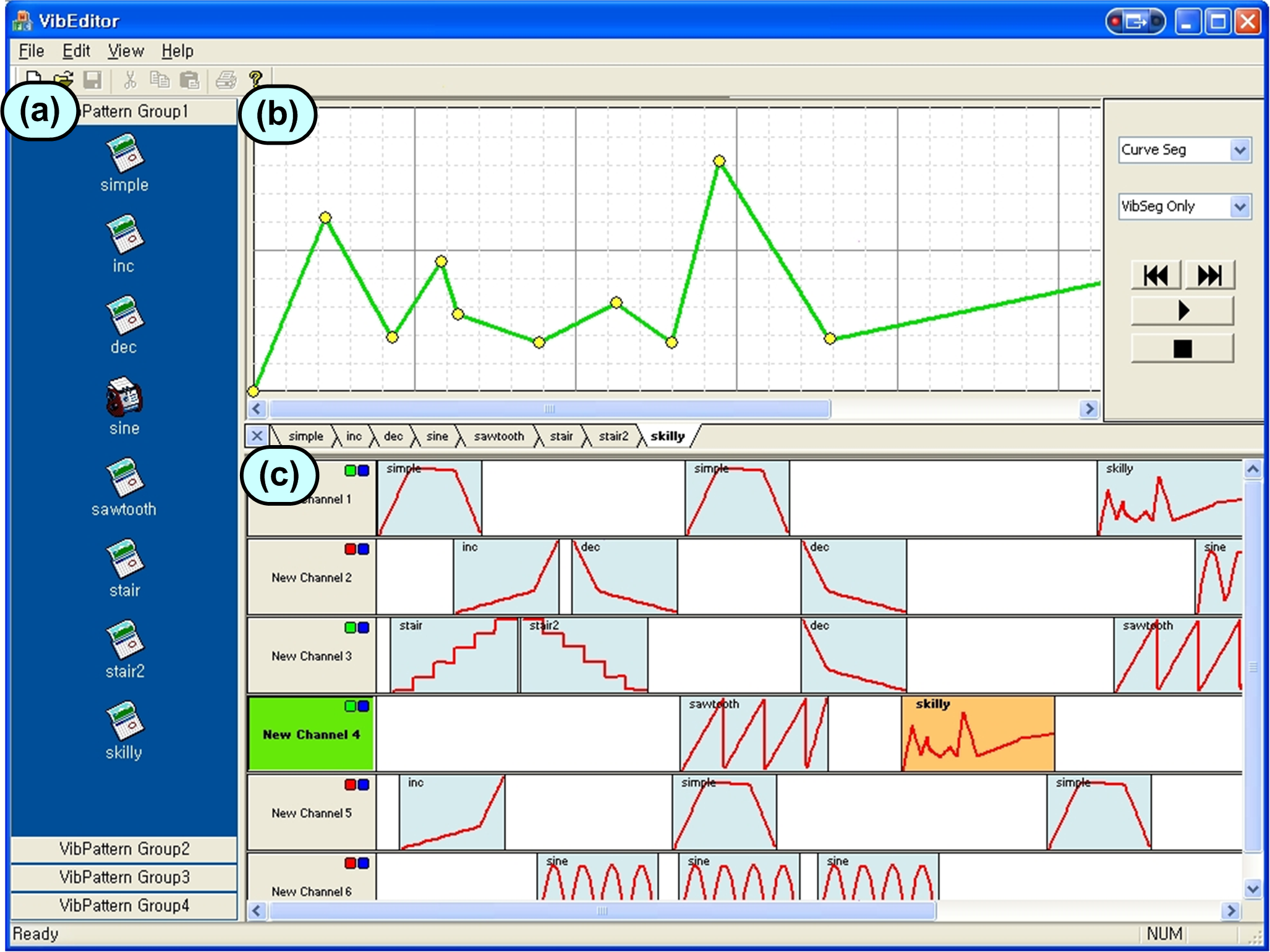

The posVibEditor supports the creation of vibration patterns across multiple ERM motors. Vibration assets can be created by manipulating keyframes in a vibration intensity over time visualization. These assets, or provided templates, can be copied into a track interface to decide which motor each will be displayed on and at what time. A “perceptually transparent rendering” mode is included to adjust the mapping of asset amplitude values to output voltage values so that the authored effect is felt as intended.

For more information, consult the 2008 Workshop on Haptic Audio Visual Environments and Games paper and the 2009 WHC paper.